User-experience (UX) problems in course design can be challenging for students as the web interface mediates most online learning. Yet, UX is often underemphasized in e-learning, and instructional designers rarely receive training on UX methods. In this chapter, the authors first establish the importance of UX in the contexts of both learner experience and pedagogical usability. Next the authors describe their study using think-aloud observations (TAOs) with 19 participants. This TAO study resulted in the identification of the following design principles for maximizing the UX of online courses offered within an LMS: (a) avoid naming ambiguities, (b) minimize multiple interfaces, (c) design within the conventions of the LMS, (d) group related information together, and (e) consider consistent design standards throughout the University. Finally, in this chapter the authors offer specific guidelines and directions to enable others to conduct similar TAO testing within their university context.

Author's Note

Two earlier manuscripts are the basis for much of this chapter. An initial practitioner report was shared through a university project site and intended to inform internal university audiences about the findings of this study and subsequent suggestions for the best UX design practices in Canvas.

Gregg, A. (2017). Canvas UX think aloud observations report [Report written to document the Canvas user experience study]. https://edtechbooks.org/-kZrk

A second published piece was written as a guide for others in the instructional design field to provide instructions for conducting UX testing within their own institutions. It is being re-printed here with modifications and with the approval of the Journal of Applied Instructional Design.

Gregg, A., Reid, R., Aldemir, T., Garbrick, A., Frederick, M., & Gray, J. (2018, October). Improving online course design with think-aloud observations: A “how-to” guide for instructional designers for conducting UX testing. Journal of Applied Instructional Design, 7(2) 17–26.

This chapter includes condensed versions of the initial university report and the JAID article. In this way, readers of this chapter can read about the study itself, including details on methods and findings, as well as access a practical guide for conducting their own UX testing. Additionally, this chapter, as opposed to the two previous manuscripts, includes a more thorough review of the learner experience literature to properly situate it in the context of this book.

1. Introduction

User-experience (UX) research puts the emphasis on the perspectives of users: what they value, what they need, and how they actually work (U.S. Department of Health & Human Services, 2016). This emphasis is important as misalignment between designer intention and actual user experience can result in unintended consequences (Nielsen, 2012). Figure 1 illustrates a commonplace example of this misalignment when a number of users do not follow the designer’s intended path.

Figure 1

Figure 1

Desire Path

Note. Photograph by Wetwebwork, 2008. Creative Commons license on Flickr.com.UX testing provides a crucial bridge between designer intentions and user interaction. UX testing ultimately “reminds you that not everyone thinks the way you do, knows what you know, and uses the Web the way you do” (Krug, 2014, p. 114). Given that the web interface mediates nearly all experiences for online learners in their courses, online learning seems to be a natural, if not critical, area for UX research (Crowther et al., 2004). Indeed, as Koohang and Paliszkiewicz (2015) argued, “The sound instruction that is delivered via the e-learning courseware cannot alone guarantee the ultimate learning. It is the usability properties of the e-learning system that pair with the sound instruction to create, enhance, and secure learning in e-learning environments” (p. 60). UX in e-learning contexts matters and can impact learning—the time that learners expend on non-intuitive course navigation is time taken away from more important learning activities (Ardito et al., 2006; Nokelainen, 2006). Intuitive design is defined as design when “users can focus on a task at hand without stopping, even for a second” (Laja, 2019, para. 2) and design that does not require training (Butko & Molin, 2012).

While the merits of UX testing in online learning have been recognized, such efforts have typically lagged behind other fields (de Pinho et al., 2015; Fisher & Wright, 2010; Nokelainen, 2006). This relative lack of emphasis on UX in e-learning may be in part due to some educators’ aversion to the conflation of “learners” with “users” (or “customers”) that a UX perspective can suggest (e.g., Rapanta & Cantoni, 2014). Another potential explanation for the lack of focus is that, while some instructional design models emphasize evaluation of the design (e.g., Reigeluth, 1983; Smith & Ragan, 2005), very little of the feedback designers receive about online courses is specifically focused on UX (Rapanta & Cantoni, 2014). Therefore, even if designers are interested in UX methods to improve online course design, they may not know where to begin or how to proceed.

This chapter is intended to demonstrate how UX testing, specifically the think-aloud observation (TAO) method, can be used in instructional design and online learning contexts to improve course-design usability; the chapter is organized as follows. First, the importance of UX and online course usability is situated within the contexts of learner experience and pedagogical usability. Second, a TAO study conducted with 19 participants to identify areas of navigational ease as well as challenges is described including methods and findings. Third, specific steps and guidelines based on both the literature and the authors’ reflective experiences are offered to enable others in instructional design contexts to conduct similar UX testing.

2. UX, Learner Experience, and Pedagogical Usability

Prioritizing UX in online course design assumes a learner experience paradigm, which at its core emphasizes the role and importance of the cognitive and affective experiences of learners (Dewey, 1938; Parrish et al., 2011). Dewey described learning as a transaction between internal and external factors or an individual and the environment:

An experience is always what it is because of a transaction taking place between an individual and what, at the time, constitutes his environment . . . The environment, in other words, is whatever conditions interact with personal needs, desires, purposes, and capacities to create the experience which is had. (1938, pp. 43–44)

Dewey’s conceptualization, while put forth well before Internet-enabled technologies, still applies to today’s context. For online learning, the learner’s experience is part of a transaction that takes place between the learner and the learning environment, which is largely mediated by the learning management system (LMS) interface from which the learners access their online course. Within this context, educators and designers are responsible for creating “environing conditions” that maximize learning (Dewey, 1938, p. 44). Improving these conditions necessarily requires an understanding of how they impact the learner. UX testing is a way to learn how the LMS interface—a primary “environing condition” in online learning—is experienced by learners in terms of intuitiveness, functionality, and even aesthetics. When considering online learning within this context, some of the learner experiences are inseparable from their experiences as users in the course interface.

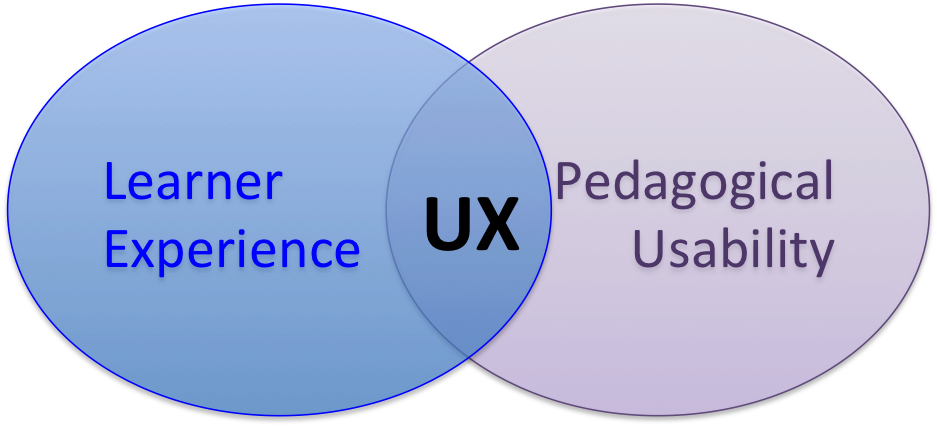

A concept related to but importantly distinct from both learner experience and UX is that of pedagogical usability, which refers to a category of usability strategies meant to operationalize learning-centered design principles in online learning environments (Silius et al., 2003, p. 3). Pedagogical usability is defined as “whether the tools, content, interface and tasks of web-based environments meet the learning needs of different learners in various learning contexts according to particular pedagogical goals” (de Pinho et al., 2015, Section II, “Pedagogical”, para. 2). This concept joins a growing body of literature that emphasizes how online learning design requires more than the implementation of technical usability strategies and techniques (Zaharias & Poylymenakou, 2009). For example, Zaharias and Poylymenakou (2009) mentioned the direct tie between the usability of e-learning designs and pedagogical value: “An e-learning application may be usable but not in the pedagogical sense and vice-versa” (p. 1). Thus, a learning-centered design paradigm, defined as designing with the purpose of meeting the unique needs of learners (e.g., motivational needs, pedagogical needs, metacognitive needs), has gained a significant role within UX studies (Brna & Cox, 1998).

Designing to improve UX will also contribute to improving pedagogical usability as learning-centered design requires an efficient combination of UX strategies and learning theories (Zaharias, 2004; Zaharias & Poylymenakou, 2009). See Figure 2 for a visual depiction of how UX intersects with both learner experience and pedagogical usability. This chapter focuses specifically on UX rather than the broader contexts of learner experience and pedagogical usability.

Figure 2

Figure 2

UX at the Intersection of Learner Experience and Pedagogical Usability3. Think-Aloud Observations Study

The authors of this chapter were all involved in a university-wide LMS transition from ANGEL to Canvas at a university where instructional design is largely decentralized, and multiple design groups work on online courses. The structure, naming conventions, and functionality of Canvas were distinct enough from ANGEL to require transitional design decisions made by instructors and instructional designers across the university. Therefore, the authors, originating from four organizationally distinct instructional design units, collaborated to investigate online courses through a UX lens and executed a TAO study (Cotton & Gresty, 2006) to answer the following research questions: (1) How are students experiencing the UX of online course designs in Canvas? (2) What UX design principles and best practices do these experiences indicate? See Gregg (2017) for the university whitepaper written on this study.

3.1. Study Design

While not specifically a design-based research (DBR) study, our research was very much in the methodological spirit of DBR (The Design-Based Research Collective, 2003) through both intentionally improving practice—by making specific Canvas course designs more intuitive and easier to navigate—and contributing to the broader literature—by identifying UX design principles and methods that could be relevant to others in the field.

Each instructional design unit participating in the study recruited student participants who had taken that unit’s online courses. The target was five participants per unit based on usability best practices (Nielsen, 2000). The study went through the institutional review board (IRB), and the participants each signed a consent form allowing their data to be analyzed and shared in anonymized ways. Any participant names used in this paper are pseudonyms. Participants were each incentivized with a $50 gift card on completion of the think-aloud observation; funding for incentives was provided by one of the participating colleges as well as a central instructional technology support department.

In total, 19 participants completed this study. The participant sample included males (12) and females (7); undergraduate (10) and graduate (9) students; and the following age ranges: 18–23 (4), 24–29 (4), 30–39 (4), 40–50 (5), 50–59 (2). Six of the participants had never used Canvas before, while 13 already had some experience in Canvas. While most students were located on or near the university’s main campus, one fully online student drove over eight hours to be able to visit the campus for the first time and participate in the study.

3.2. Data Collection

While each instructional design unit used their own course design and recruited their own participants, the study was conducted in such a way that there would be consistency across the data collection. Each unit developed a set of tasks for the participants to complete based on a consistent set of core questions; see Table 1 for examples of core questions.

Table 1

Sample Questions From the Think Aloud Instrument

|

Task #

|

Task Description

|

|---|

|

Task 3

|

You would like to receive a weekly email notification regarding course announcements. How would you set-up this notification?

|

|

Task 4

|

You remember you have the Lesson 09 quiz this week but need to check the submission due date. How would you find out when your quiz is due?

|

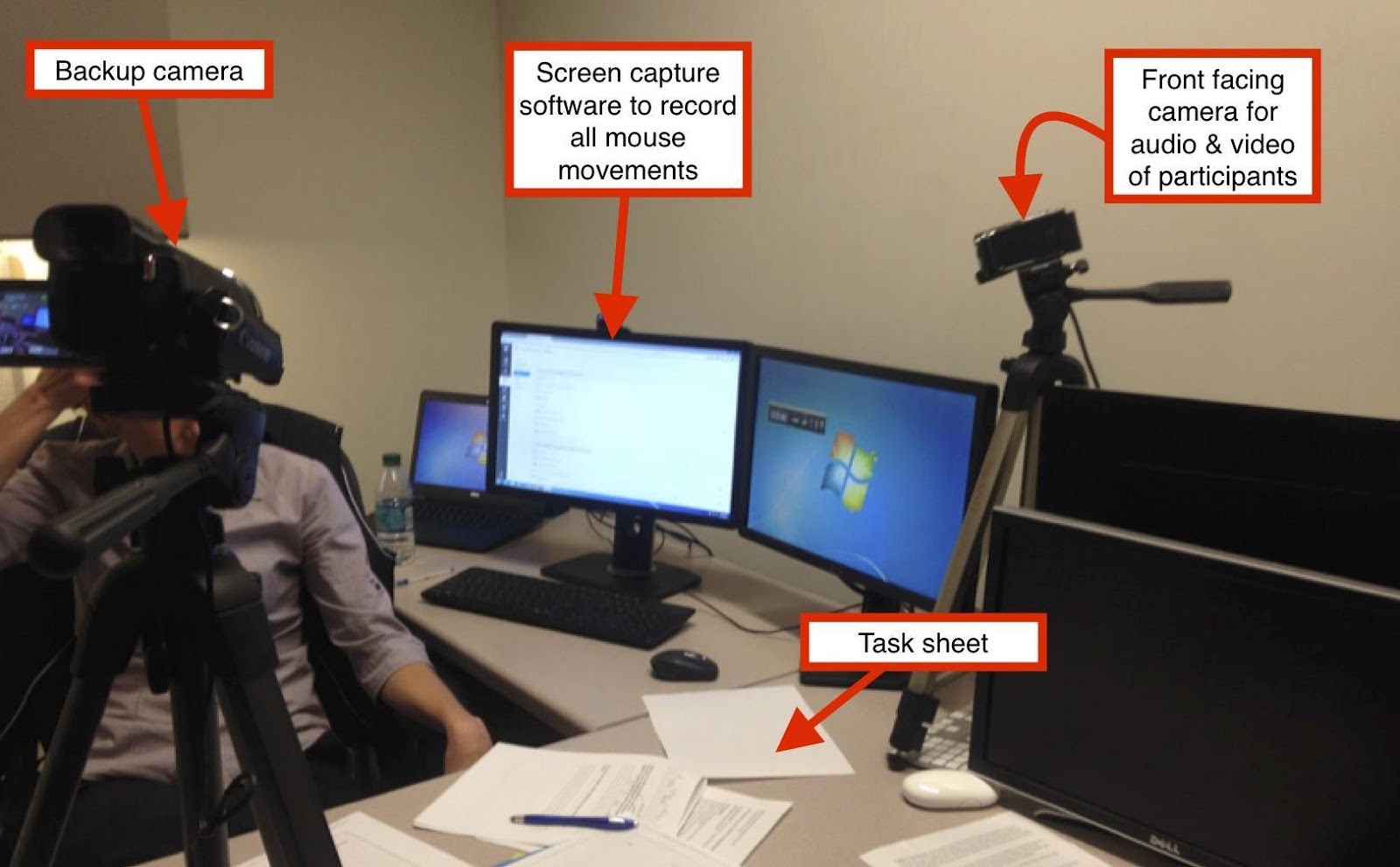

All of the facilitators, except those from the first instructional design unit participating in the study, received the same training in how to conduct a think-aloud observation. In order to reduce pressure or power differentials that the participants might have experienced, the facilitators explained to participants there were no “wrong” answers or ways to do things and that they themselves were not being tested. As illustrated in Figure 3, each testing room was set up in a similar manner to audio, video, and screen capture the full observation. In each TAO, the participant was greeted by the facilitator, asked to sign the consent form, given a demonstration of how to “think aloud,” asked to complete each of the tasks while thinking aloud, rate the difficulty of each task, and respond to a series of open-ended questions. Immediately following each TAO, the facilitators wrote a reflective memo (Saldaña, 2012) capturing their observations of where the participants navigated seamlessly and where they had challenges.

Figure 3

Figure 3

TAO Standard Room Configuration3.3. Data Analysis

In order to identify patterns in the learners’ actual behaviors and experiences, two researchers conducted the data analysis. Data considered were both qualitative—including the facilitators’ reflective memos, participant comments, and navigation patterns—and quantitative—including time on task and difficulty ratings. The researchers separately and collectively reviewed the full transcripts of each TAO, videos, difficulty rankings, open-ended participant comments, and facilitator notes multiple times. One researcher explicated all of the steps for each task for each participant and coded for time, effectiveness, efficiency, satisfaction, and learnability, using a scheme developed by both researchers. Effectiveness pertained to the accuracy of task completion, efficiency to the amount of effort and time, satisfaction to how learners responded to the system, and learnability to efficiency gain over time (Frøkjær et al., 2000; Nielsen, 2012; Tullis & Albert, 2013). These data were then analyzed thematically to identify suggested best practice design principles (Braun & Clarke, 2006; Clarke & Braun, 2014; Creswell, 2012; Saldaña, 2012).

To ensure quality, both researchers worked individually and collaboratively with all of the data over an extended period of time, discussed and resolved different perspectives, and developed mutually agreed-upon codes, analysis schemes, and final themes. By way of transparency, it should be noted that one of the researchers was also a participant in the study before she was aware that she would be working on the data analysis. This issue was discussed in-depth, and it was decided that her data would remain in the study as her role as a researcher was not established at the time of her participation.

3.4. Limitations

Limitations of this study include the non-random recruitment of participants due to, with one exception, all participants living near campus. Additionally, in spite of the training across the facilitators, there were slight differences in how each encouraged thinking aloud. Lastly, there were some tasks with ambiguities in terms of what specifically was being asked of the participant. These were removed from the data.

3.5. Findings and Implications

The following section briefly highlights findings and implications for design practices, specifically pertaining to improving UX, as suggested by the study. Pedagogical aspects such as learning outcomes and instructional design quality are not within the scope of the study. Participant quotes taken from their open-ended feedback during each of the TAOs are provided below to highlight the themes.

3.5.1. Avoid Naming Ambiguities

Unclear wording in a course, whether resulting from ambiguity, similarities (e.g., “unit,” “lesson”), naming conventions (e.g., “L07,” “Lesson07”), or mismatched terminology (e.g., “groups,” “teams”), can become a barrier to seamless navigation. For instance, calling something a “discussion forum” in one place and a “discussion activity” in another can be confusing. As a participant noted, “I think this is the discussion forum. It doesn't actually say discussion forum on it. But it does have a little text box, so I'm going to guess that's what it is . . . Discussion activity is the same thing as discussion forum. That was a little confusing, but it makes sense to connect those two languages then.” Additionally, terms that appear similar to participants caused confusion. Maggie demonstrated this confusion among terminology: “The lesson two fundraising scenario assignment, the word assignment seems kind of vague considering there is a discussion forum and an essay. So I don't really—I was confused to which one to choose to submit it in.” To maximize intuitive design, use identical terms for the same item throughout the course and sufficiently distinguish terms for different items.

3.5.2. Minimize Multiple Interfaces

When obligated to use different interfaces or systems outside the LMS, learners are required to navigate more than one design. David illustrated this: “You're still using two separate systems . . . That's a lot of the confusion and for a lot of students because you're kind of in two different worlds, back and forth . . . to get back to here, you got to go through the Canvas link and go back, and then it jumps you at the top end and—you're not even within your course anymore, it's a little odd.” When evaluating whether or not an interface is needed in addition to the LMS; therefore, designers must compare the additional system’s benefits against the UX costs of requiring students to move between multiple systems.

3.5.3. Design Within the Conventions of the LMS

Instructors and designers often develop workarounds to make systems work differently than they were intended and cause confusion for learners. For instance, some course designs use both “units” and “lessons” to organize content. Units are the containers that hold multiple lessons in order to make things easier to navigate; however, this is not always the case. For example, no logical connection was made between the two when learners were navigating the system, as noted by Alan: “It's a little confusing because I see unit three but then there's lesson five, lesson six. Lesson five activity, lesson six activity, lesson seven activity.” Gary stated, “I'm in 'modules' and I'm just going to Unit Three—no, that's Lesson Five. What was Lesson Three? Lesson Three is Unit Two, content is right there.” To avoid confusion, designers should adhere as closely as possible to the LMS naming conventions and structure.

3.5.4. Group Related Information Together

In this study, students opened multiple browsers to reference information published in different locations and to ensure they weren't missing anything. As Lien put it, “I find sometimes when I was looking for the due time and the assignments of a specific lesson, sometimes I can find part of the information in the Module under each lesson, but sometimes I have also go back to the Course Syllabus to find more . . . I feel like sometimes it's better to put all the assignments, maybe like due time, and what activity, and the name together in one place . . . it's better to save us time and make it more clear.” Whenever possible, put related project information in a single location with references linking to that information throughout the course.

3.5.5. Consider Consistent Online Course Design Across Courses

Because of the distributed nature of higher education, universities can include multiple campuses, colleges, departments, individual programs, and many diverse faculty members with unique and discipline-specific approaches to pedagogy. Additionally, there are new approaches to teaching and learning that might intentionally disrupt more standardized approaches. However, usability benefits when there is consistency in UX design across online courses. One participant emphasized a preference for consistency: “I like the fact that when you come to—when you're looking right here at the list of the lessons, you sort of know that the first link will be the lesson directions. And that's good if it's sort of standard across all the classes because—at least that's what I've seen so far.” If possible, agree to some consistent UX standards across courses.

This section of the chapter has described the TAO study conducted by the authors and identified suggested best practices for intuitive design based on the experiences of the 19 participants. In the next section, the authors provide an abbreviated guide for others interested in conducting their own TAO study.

4. Suggested Steps for Conducting TAOs with Online Courses

From the beginning of the study, the researchers intentionally positioned themselves as reflective practitioners, wherein they iteratively improved research instruments and processes. They also spent time researching, creating, reviewing, and piloting information and technologies before bringing in actual participants to the facility to test. The following suggested steps for conducting a TAO study were developed through a systematic synthesis of the literature and the critical continuous self-reflections of the authors’ experiences with the TAO method. A more detailed version of these steps can be found in Gregg et al. (2018).

4.1. Determine, Pilot, and Do: Conducting TAOs for Course Design

The recommendations for conducting a TAO study are organized in three broad, sequential categories: determine, pilot, and do. First, determine foundational elements, next pilot those elements, and, finally, do the UX testing with recruited participants. Some of the individual steps within each category can be conducted simultaneously or in a slightly different order.

4.2. Determine Foundational Elements

This section emphasizes the multiple areas that should be “determined” early in the process to serve as a blueprint for execution (Farrell, 2017). The first step in designing a TAO is identifying what should be evaluated. Practitioners may want to examine a new or existing course design—or even an individual course content element or assessment. After selecting what to test, specific tasks to demonstrate the usability of those elements need to be written. These tasks should be adequately complex yet feasible for testing for the participants (Boren & Ramey, 2000; Nørgaard & Hornbæk, 2006; Rowley, 1994).

Deciding whom to recruit as participants is a necessary step. In general, research demonstrates that testing five users can help identify roughly 80% of the usability problems in a system (Nielsen, 1993; Virzi, 1992). Additionally, while representative users can be ideal, most important is observing people other than the designers navigating the online course while completing authentic tasks (Krug, 2014).

There are multiple options for how to capture the participant observations and information during testing. These include note-taking, audio, video, and screen-capturing. While important things about the course design will be learned from simple observation, researchers can struggle to simultaneously facilitate, take notes, and monitor the TAOs (Boren & Ramey, 2000; Nørgaard & Hornbæk, 2006; Rankin, 1988). Therefore, research advises to record sessions since a retrospective analysis of the TAO can yield deeper understandings and improve the reliability and validity of the findings (Ericsson & Simon, 1993; Rankin, 1988).

When determining where and when to conduct the test, the use of technologies may impact the room or location selection. For example, the use of external cameras for in-person testing may require more floor space. In this case, a dedicated room also is useful as it will not have to be set up and deconstructed in the testing environment continually. If conducting a more formal study with IRB approval, an informed consent form will need to be signed by the participants. Even if it is not an IRB study, some form of consent should still be gained out of respect for the participants including transparency as to how their data will be used. Also, funding and/or incentives are not required to conduct TAOs, but they can be helpful with recruitment.

4.3. Pilot the TAO Process

Piloting is a very important part of conducting successful TAOs as it provides the opportunity to: (a) rehearse to ensure the study will run smoothly, (b) test the tasks to ensure none are misleading or confusing, (c) establish realistic timing estimations, and (d) validate the data and the wording of the tasks for reliable findings (Schade, 2015).

Do not assume that just because a task scenario makes sense to the person who wrote it that it would also make sense to the participants. If there is misleading wording in the questions, then accurately determining if participant confusion is due to a non-intuitive interface or problematic task questions becomes more difficult. To avoid this type of situation in the TAOs, we suggest asking someone else who is not involved in the writing of the questions to read the question and then describe exactly what the person perceives from it.

As most practitioners know, technology often does not perform as intended. A complete run-through of the technology to be used for the UX testing ideally in the room in which it will be used can help detect any problems with audio and video capturing (Rowley, 1994; Schade, 2015). Practicing in the room itself will also allow the discovery of any potential issues with noise, temperature, and room configuration.

Important things will be learned from simply watching how the participants navigate and where they seem to get confused, but the “thinking aloud” part of the TAOs is especially revealing. After all, Rubin and Chisnell (2008) assert that through the think-aloud technique, one can “capture preference and performance data simultaneously” (p. 204). This strategy can also expose participants’ emotions, expectations, and preconceptions (Rubin & Chisnell, 2008).

Thinking aloud while completing prescribed tasks is likely something the participant has not been asked to do before and is a behavior that does not come naturally to most people (Nielsen et al., 2002). Furthermore, asking someone to think aloud, especially while being recorded, can make for a potentially awkward encounter. Therefore, researchers should demonstrate the thinking-aloud process for the participants by viewing a task scenario on a completely different website and then thinking aloud while completing the task. Facilitators should practice helping participants through any nervousness or frustration (Boren & Ramey, 2000; Rowley, 1994).

Finally, in addition to piloting individual elements of the process, we strongly suggest that facilitators practice the entirety of the TAO process. The full pilot will not only reveal potential elements of the testing process to change but may also highlight design areas that can be improved in the course before actual testing (Boren & Ramey, 2000; Rowley, 1994; Schade, 2015). Two elements to include in a process pilot are a script and a checklist. A script will ensure consistency with each participant. The script should welcome the participant, outline the different phases of testing, and include information about how the facilitator may not be able to answer navigation-related questions from the participant during the testing phase. At this point, the script must emphasize that the UX of the course is being evaluated and not the participant. A detailed checklist may include items such as turning on all cameras, making sure the participant has access to the test environment, and having the participant sign any necessary forms.

4.4. Do the Actual UX Testing

Once all of the key elements of the TAO testing have been both determined and piloted, practitioners will shift into the actual recruiting of participants and conducting of the UX tests. Here the key steps in the process of actually conducting the TAOs are highlighted. With a participant audience identified, the next step is to recruit. If non-students were used as representative testers, then the recruiting process may be as simple as asking a few coworkers to assist. When recruitment is complete, the logistics of scheduling a time for participants to come to the facility for UX testing should occur.

Even when the elements of the TAO testing are determined and piloted, problems may still occur. We suggest keeping Krug’s advice in mind: “Testing one user is 100 percent better than testing none. Testing always works, and even the worst test with the wrong user will show you important things you can do to improve your site” (2014, p. 114). With two facilitators, one can be designated to take notes. However, even if there is only one facilitator, brief notes can still be taken during the test itself. In either scenario, we highly recommend continuing to capture thoughts as reflective memos immediately after the testing is complete.

When capturing reflections, document anything that gives context for the particular participant and what is observed related to UX areas. Note both what is observed (e.g., “participant struggled with locating his group”) and thoughts about how to make design improvements (e.g., “rename groups for consistency”). The more information captured during and immediately following the testing results in more data for making improvements to the design.

After conducting the TAOs, the next step is to review the collected data—both the recorded testing and the documented observations. Additionally, while this chapter shared details of a more formal data analysis, this is not necessary to make pragmatic UX improvements. Simply having multiple individuals watch the recorded navigation will highlight areas that are not intuitive. Finally, the purpose of conducting TAO testing is to improve course design and the student navigation experience. When determining what to change and improve based on the TAO testing, Krug (2010) recommends a “path of least resistance” approach to what is changed by asking, “What’s the smallest, simplest change we can make that’s likely to keep people from having the problem we observed?” (p. 111).

5. Conclusion

This chapter has focused on TAO testing as a useful method for improving the UX of online course designs. First, it positioned UX in the broader contexts of learner experience and pedagogical usability. Next, it presented the methods and findings of a TAO study conducted by the authors. Lastly, it provided specific steps for practitioners interested in conducting their TAO testing.

Ultimately, education involves personal challenge, change, growth, and development, no matter the discipline. Furthermore, students are more than simply “consumers” or “users,” and teaching and learning is far more complex than a simple web transaction or exchange of money for a service or product. At the same time, online students who are lost in a non-intuitive course interface have a lot in common with users who cannot easily navigate a consumer website. Learners may be less likely to leave a course website compared to a consumer website; however, poor UX design still has learning consequences. One of the biggest barriers to learning and learning satisfaction in the online learning environment is related to technical challenges encountered by learners (Song et al., 2004). Time and energy spent on trying to navigate a poorly designed course interface are time and energy not dedicated to the learning itself.

While instructional designers may want course navigation to be seamless from a UX perspective, they also can work in a vacuum without much, if any, direct UX feedback from students. Additionally, the life experiences of designers may not be representative of their students who take their courses (Rapanta & Cantoni, 2014). Consider that many designers go into the field because they like technology; this interest may not be shared by the average student. Therefore, student feedback on course-design usability assists designers in creating environments for students to focus on the more important elements of the learning experience. As working practitioners who conducted UX testing on their course designs, we have witnessed firsthand the power of student feedback through the TAO method. We would argue that receiving this form of feedback is a crucial element in course design that can help bridge the gap between designer intention and student execution. As discussed in the beginning of this chapter, UX testing is increasingly becoming the norm in industries that rely on web interfaces to reach their audiences. We believe that UX testing should also play more of a role in e-learning. The framework and discussion here are a part of the efforts to ensure that UX testing plays a stronger role in the field.

References

Ardito, C., Costabile, M. F., De Marsico, M., Lanzilotti, R., Levialdi, S., Roselli, T., & Rossano, V. (2006). An approach to usability evaluation of e-learning applications. Universal Access in the Information Society, 4(3), 270–283.

Boren, T., & Ramey, J. (2000). Thinking aloud: Reconciling theory and practice. IEEE Transactions on Professional Communication, 43(3), 261–278.

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101.

Brna, P., & Cox, R. (1998). Adding intelligence to a learning environment: Learner-centred design? Journal of Computer Assisted Learning, 14, 268–277.

Butko, A., & Molin, M. (2012). Intuitive design principles: Guidelines for how to build an instinctual web application [Bachelor thesis, University of Gothburg]. https://edtechbooks.org/-uRBF

Clarke, V., & Braun, V. (2014). Thematic analysis. In A. C. Michalos (Ed.), Encyclopaedia of quality of life and well-being research (pp. 6626–6628). Springer.

Cotton, D., & Gresty, K. (2006). Reflecting on the think-aloud method for evaluating e-learning. British Journal of Educational Technology, 37(1), 45–54.

Creswell, J. W. (2012). Qualitative inquiry and research design: Choosing among five approaches (3rd ed.). Sage Publications.

Crowther, M. S., Keller, C. C., & Waddoups, G. L. (2004). Improving the quality and effectiveness of computer‐mediated instruction through usability evaluations. British Journal of Educational Technology, 35(3), 289–303.

de Pinho, A. L. S., de Sales, F. M., Santa Rosa, J. G., & Ramos, M. A. S. (2015). Technical and pedagogical usability in E-learning: Perceptions of students from the Federal Institute of Rio Grande do Norte (Brazil) in virtual learning environment. 2015 10th Iberian Conference on Information Systems and Technologies (CISTI 2015) (pp. 524-527). IEEE.

Dewey, J. (1938). Experience and education. Macmillan.

Ericsson, K. A., & Simon, H. A. (1993). Protocol analysis: Verbal reports as data. MIT Press.

Farrell, S. (2017, September 17). From research goals to usability-testing scenarios: A 7-step method. Nielsen Norman Group. https://edtechbooks.org/-UfcA

Fisher, E. A., & Wright, V. H. (2010). Improving online course design through usability testing. Journal of Online Learning and Teaching, 6(1), 228-245.

Frøkjær , E., Hertzum, M. and Hornbæk, K. (2000). Measuring usability: Are effectiveness, efficiency and satisfaction really correlated? CHI '00: Proceedings of the SIG CHI Conference on Human Factors in Computer Systems (pp. 345 – 352). ACM.

Gregg, A. (2017). Canvas UX think aloud observations report [Report written to document the Canvas user experience study]. Penn State Canvas UX. https://edtechbooks.org/-kZrk

Gregg, A., Reid, R., Aldemir, T., Garbrick, A., Frederick, M., & Gray, J. (2018). Improving online course design with think-aloud observations: A “how-to” guide for instructional designers for conducting UX testing. Journal of Applied Instructional Design (JAID), 7(2) 17–26.

Koohang, A., & Paliszkiewicz, J. (2015). E-Learning courseware usability: Building a theoretical model. The Journal of Computer Information Systems, 56(1), 55–61.

Krug, S. (2010). Rocket science made easy: The do-it-yourself guide to finding and fixing usability problems. New Riders.

Krug, S. (2014). Don't make me think, revisited: A common sense approach to web usability (3rd ed.). New Riders.

Laja, P. (2019, May 26). Intuitive web design: How to make your website intuitive to use. CXL. https://edtechbooks.org/-ppun

Nielsen, J. (1993). Usability engineering. Academic Press.

Nielsen, J. (2000, March 18). Why you only need to test with 5 users. Nielsen Norman Group. https://edtechbooks.org/-VHX

Nielsen, J. (2012, January 3). Usability 101: Introduction to usability. Nielsen Norman Group. https://edtechbooks.org/-JMKq

Nielsen, J., Clemmensen, T., & Yssing, C. (2002). Getting access to what goes on in people's heads?: Reflections on the think-aloud technique. NordiCHI '02: Proceedings of the second Nordic conference on human-computer interaction (pp. 101–110). ACM.

Nokelainen, P. (2006). An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students. Journal of Educational Technology & Society, 9(2), 178–197.

Nørgaard, M., & Hornbæk, K. (2006 ). What do usability evaluators do in practice?: An explorative study of think-aloud testing. DIS '06L Proceedings of the 6th conference on designing interactive systems (pp. 209–218). ACM.

Parrish, P. E., Wilson, B. G., & Dunlap, J. C. (2011). Learning experience as transaction: A framework for instructional design. Educational Technology, 51(2), 15-22.

Rankin, J. M. (1988). Designing thinking-aloud studies in ESL reading. Reading in a Foreign Language, 4(2), 119–132.

Rapanta, C., & Cantoni, L. (2014). Being in the users' shoes: Anticipating experience while designing online courses. British Journal of Educational Technology, 45(5), 765–777.

Reigeluth, C. M. (Ed.). (1983). Instructional-design theories and models: An overview of their current status. Lawrence Erlbaum.

Rowley, D. E. (1994). Usability testing in the field: Bringing the laboratory to the user. CHI '94: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 252–257). ACM.

Rubin, J., & Chisnell, D. (2008). Handbook of usability testing: How to plan, design, and conduct effective tests (2nd ed.). John Wiley & Sons.

Saldaña, J. (2012). The coding manual for qualitative researchers (2nd ed.). Sage Publications.

Schade, A. (2015, April 5). Pilot Testing: Getting it right (before) the first time. Nielsen Norman. https://edtechbooks.org/-oUmB

Silius, K., Tervakari, A. M., & Pohjolainen, S. (2003). A multidisciplinary tool for the evaluation of usability, pedagogical usability, accessibility and informational quality of web-based courses. The Eleventh International PEG Conference: Powerful ICT for Teaching and Learning, 28, pp. 1–10.

Smith, P. L., & Ragan, T. J. (2005). Instructional design (3rd ed.). Wiley.

Song, L., Singleton, E. S., Hill, J. R., & Koh, M. H. (2004). Improving online learning: Student perceptions of useful and challenging characteristics. The Internet and Higher Education, 7(1), 59–70.

The Design-Based Research Collective. (2003). Design-based research: An emerging paradigm for educational inquiry. Educational Researcher, 32(1), 5–8.

Tullis, T. & Albert, W. (2013). Measuring the user experience: Collecting, analyzing, and presenting usability metrics. Elsevier.

U.S. Department of Health & Human Services. (2016). Usability testing. https://www.usability.gov/how-to-and-tools/ methods/usability-testing.html

Virzi, R. A. (1992). Refining the test phase of usability evaluation: How many subjects is enough? Human Factors: The Journal of the Human Factors and Ergonomics Society, 34(4), 457–468. https://edtechbooks.org/-AJL

Wetwebwork. (2008, September 11). Desire path [Photograph]. Flickr. https://edtechbooks.org/-kXu

Zaharias, P. (2004). Usability and e-learning: The road towards integration. eLearn Magazine. https://edtechbooks.org/-GvMR

Zaharias, P., & Poylymenakou, A. (2009). Developing a usability evaluation method for e -learning applications: Beyond functional usability. International Journal of Human–Computer Interaction, 25(1), 75–98.

Acknowledgements

The authors would like to thank the College of IST, Teaching and Learning with Technology, the World Campus, the College of the Liberal Arts, and the College of Earth and Mineral Sciences who all provided administrative, financial, travel, and/or staff support for this study. The authors would also like to thank the student participants in the study and others throughout the University who offered support.