Quality Assurance (QA) in higher education is a concept that owes its beginnings to quality assurance in the industrial sector. The purpose of this chapter is to describe strategies instructional designers can implement to promote quality assurance within their institutions.

What is Quality Assurance?

Quality Assurance (QA) in higher education is a concept owing its beginnings to quality assurance in the industrial sector. A rapidly changing higher education scenario in response to the ever-expanding need of skilled individuals across various disciplines and the call for a return on their investment from parents and students are some of the underlying causes for higher education to pursue QA (Wilger, A. 1997).

There are many definitions of quality assurance in higher education. In a literature review for the National Center for Postsecondary Improvement (NCPI), Wilger (1997) identifies the most complete definition, as follows:

“Quality Assurance is a collective process by which the University as an academic institution ensures that the quality of educational process is maintained to the standards it has set itself. Through its quality assurance arrangements the University is able to satisfy itself, its students and interested external persona or bodies that:

-

Its courses meet the appropriate academic and professional standards,

-

The objectives of its courses are appropriate

-

The means chosen and the resources available for delivering those objectives are appropriate and adequate, and

-

It is striving continually to improve the quality of its courses”

(Wilger, 1997; pg 2-3)

What Does Literature Say About Quality Assurance?

There is a plethora of research (Ryan, 2015; Wilger, 1997) that examines available literature in relation to quality assurance in higher education. Some of the major themes that have emerged across the various publications were considerations for building a QA program and the impact of QA program on all primary stakeholders, which include students, faculty, senior leadership. The reviewed literature (Ryan, 2015; Wilger, 1997) also identified the need to focus on the primary emphasis of a QA process, the process itself, how it operates, and how the information produced is used and reported. When discussing impacts of a QA program, a majority of the literature highlights the perception of the QA program among the key entities, the acceptance based on the institutional culture as well as skepticism in choosing one QA model over another due to a lack of universally agreed upon QA framework between local, regional, national and international higher ed institutions (Ryan, 2015).

There are myriad quality assurance agencies within the higher education environment. In the United States, regional accreditation is conducted by seven accrediting bodies in six regions. The accrediting bodies are:

In addition, professions that require licensure and certifications mandate their own set of guidelines that the specific programs have to meet. Review of the literature indicated a variety of QA models that can be adapted to suit a specific need. One of the most prominent ones in recent times has been the Quality Matters program (https://www.qualitymatters.org/) that has a systematic QA process laid out with tools, rubrics as well as professional development with a focus on continuous improvement of design of online programs. However, it does not account for the quality of faculty interaction and delivery in the online programs. These differences inherent in the emphasis of a single QA model combined with other themes discussed before showcase why the higher education community does not have a universally agreed upon QA framework.

Developing a QA framework that can be universally used requires much collaboration across the various local, regional, national and international agencies. What follows provides instructional designers starting the QA process with some practical considerations based on research (Ryan, 2015; Wilger, 1997) and practical experience irrespective of the model or QA agency utilized. The focus is on practical considerations from the “people” and the “process” perspective – the two critical components that play a significant role in the efficient and effective implementation of QA. Understanding these perspectives allows an instructional designer to map QA processes accordingly.

The People

In the following sections we describe three primary stakeholders of a university’s online learning QA effort: students, faculty, and upper administration. All three should be accounted for if such an effort is to be successful. The rationale is that all three are connected by common themes: quality course design, facilitation, and revision. Our aim is to provide QA-useful insight into each stakeholder.

Students

Unlike faculty, an instruction designer (ID) will seldom interact directly with students; rather, interaction occurs via the instructor and the student feedback received. Based on our higher ed experience, a challenge that an ID faces is assisting faculty in determining the appropriate/relevant method of collecting student feedback to use, the frequency of its use, and an approach to using that feedback to inform course adjustments.

Student Role in QA

We have yet to encounter a faculty member who denies the role of student feedback in determining course quality. What is noteworthy, however, is that some faculty are not comfortable receiving feedback from students. As one faculty stated:

It's never a nice email to get when something's goofed up or it's just explained poorly and needs to be improved. So, some people I think are more open to that than others. If you're defensive, then you're going to say, ‘Well, that student just doesn't know what they're doing. They should be more cognizant of what they're doing in the class or more tuned in’ versus really stepping back [and stating] “Wait, I didn't actually communicate what I thought I was communicating or that didn't look as good and intuitive as I thought it should have”

It is important, therefore, for an ID to recognize that some faculty may be hesitant to collect feedback from students. Additionally, faculty may only feel comfortable, at least initially, receiving student feedback in the form of end-of-course evaluations.

Means of Collecting Student Feedback

Most institutions use an end-of-course student evaluation tool; our institution uses the IDEA Student Rating System. In this section, the focus will predominately be on instructor-driven student feedback tools and recognizing the importance of providing instructors with options for feedback collection. Faculty who have taught exclusively in a face-to-face format may be used to gathering student feedback in an informal or ad hoc manner, such as after or before class conversations, which can provide the instructor with insight into the student’s experience. Such conversations are less likely to occur in an online course and consequently, an instructor will need to be more deliberate in collecting student feedback.

One way to view instructor-driven tools is through the lens of two categories: continuous and time-specific. An example of a continuous tool is a weekly reflective student journal. An example of a time-specific tool is a mid-course survey. Collection tools can additionally be broken down by question type: students’ opinion regarding the course (e.g., What aspects of the course would you change?), students' opinion regarding a specific aspect of the course (e.g., What did you find challenging about group assignment X?), and a students’ reflective analysis of their own academic performance (e.g., Was the Chapter 5 quiz challenging for you? Why was that the case?). It can be helpful to make faculty aware of their options regarding collecting student feedback.

Incorporating Feedback

Once faculty have gathered student feedback, they may need assistance classifying the feedback to answer questions such as: Does it address aspects of course design, course facilitation, or neither? For instance, a student may state that the course assessments were quite difficult. An ID may be better positioned than the faculty member to review the course learning activities to determine if students were provided enough opportunities to practice the skills that the assessments required of them.

Additionally, faculty may need assistance with an approach for incorporating student feedback. One approach appropriate for weekly or midpoint feedback is to disclose to students the feedback that they submitted. Faculty can place feedback into two categories (i.e., possible change and not possible change) and define what steps, if any, will be taken to address these changes. Our experiences have shown us that such an approach validates that students’ voices are being heard and that the faculty is addressing students’ needs.

For changes or adjustments to future iterations of a course (e.g., student feedback on an assignment), it may be helpful to provide faculty with a strategy to incorporate those changes. This may involve creating a system for cataloguing student suggestions and creating a plan that allows for enough time to make alterations. A plan such as addressing one module or unit a day in the semester prior to the one in which the course will run may provide structure not previously considered.

Faculty

At institutions where a significant percentage of online courses are facilitated by the faculty who design them, faculty are gatekeepers of course quality. In implementing a QA effort, IDs need to consider general faculty awareness of what constitutes a quality online course and effective and ineffective approaches to achieving faculty buy-in with a QA effort.

Faculty Awareness

Based on our experience, faculty do not need to be convinced of the significant role they play in online course QA. There is a perceived sense of agency. An ID does need to consider faculty’s familiarity with an external validation process. Some programs or schools regularly go through an accreditation process that examines its academic efficacy. For example, because of licensure exams, certification exams, and the need to meet both accreditation and state standards, faculty in some schools are quite familiar with external guidelines. Other faculty may not have any experience with such efforts. Lack of familiarity implies a need to convince such faculty of the validity of the QA effort. Convincing could take the form of testimonials from faculty peers who have successfully implemented QA-informed practices into the design, delivery, or revision of a course. If such faculty cannot be identified, an ID could reach to other institutions where such faculty may be found.

Another consideration is whether faculty are aware of what constitutes online course quality. The answer to this varies from institution to institution. QA is impacted by factors such as the following:

- the number of staff and faculty who formally support the pedagogical side of online learning,

- prioritization of online learning by the institution’s upper administration

- a number of years the university has offered online programs.

At our institution quality online courses are those that are formally developed with an ID and reviewed using a rubric similar to the Quality Matters (QM) Higher Education Rubric for Online & Blended courses or are courses comprise a program seeking QM certification. For example, at one university, current program-level QA efforts require faculty to participate in either the Quality Matters (QM) “Applying the QM Rubric” workshop or an internally developed three-week workshop. Both focus on foundational concepts of course design, the latter also focuses on foundational concepts regarding course facilitation.

With respect to effective and ineffective approaches to achieving faculty buy-in with a QA effort, it is a fair assumption that QA efforts increase a faculty’s workload. Some suggestions as to how to effectively achieve faculty buy-in for a QA effort follow:

- Define a faculty champion. Some faculty members have expressed to us that strictly top-down efforts are seen as ineffective. Therefore, having a fellow faculty member speak to peers about a QA effort could be a more effective strategy. As Rogers (2003) suggests, a champion’s people skills, as opposed to his or her position in an organizational chart, will be the asset most valuable to achieving buy-in (p. 383). Another consideration is that the champion may need to be positioned to engage with administrators about resources the faculty need, such as course release or stipends, to successfully engage with the QA effort.

- Involve faculty from the beginning. It may be the case that the QA effort is a top-down mandate. Nevertheless, faculty should be involved in the specifics of the QA effort from the outset. A good suggestion is to have the faculty champion lead these conversations. The faculty champion is better positioned to listen to faculty grievances and to effectively applaud the efforts that the faculty are making.

- Establish connections for faculty. Perhaps a faculty member is seeking tenure. It may be helpful to see how the work being done to improve the quality of online course design could be included in a retention, tenure, and promotion packet. Perhaps a faculty member is quite invested in the effectiveness of their teaching. Experience indicates faculty are much more familiar with the phrase teaching effectiveness than they are with the term quality assurance. Our interactions have revealed that faculty perception about the latter term is the implication that something is currently wrong with the course, a message that faculty may not take well.

Another type of connection deals with the jargon an ID may use. It is important that faculty are able to grasp the concepts related to the QA effort. Terms such as alignment, objectives, formative assessment, and accessibility may be foreign to faculty, thus there is a need to explain such concepts in a manner that allows faculty to reinvention of their pedagogical practices will not be necessary.

Upper Administration

Very few upper administrators would sincerely state that they do not support an institutional QA effort. Yet, there is potentially a significant gap between a chancellor, president, or provost stating “I am for this QA effort” and the allocation of resources to make the effort possible. As one administrator put it to us:

If in any case where the leaders do not fully invest or do not provide full support, it would be difficult to achieve the QA process solely from bottom-up process, as it would be much more difficult to overcome the administrative or functional divisions to get adequate data and resources, and would usually discourage the efforts to end up as status-quo, within a silo.

It is crucial, therefore, that QA efforts have the support, both in word and in resources, from an institution's upper administration. However, the reality is that all institutions will not be able to allocate resources towards the effort. This is especially true during trying economic times. Additionally, an ID may not even have access to the institution’s upper administration. If either or both is the case, an ID could consider leveraging any available resources from peer institutions or reduce the scope of the effort. The template (see Appendix) provided will allow those who do not currently have access to resources and/or senior leadership to make a strong case for resources once they become available.

Getting a Seat at the Table

It is probable that many IDs are not able to directly address their institution’s upper administration. At some universities there is an associate vice chancellor who advances QA efforts, but this may not be the case for all. If the structure of an institution is such that there is not a direct report position who can advance the cause to upper administration (i.e., a champion), one needs to be identified.

Speaking the same language

What makes for a quality online course or program? If there have been previous QA efforts regarding online courses or programs, it may not be necessary to have a champion engage the president or provost in an education campaign about what quality means when applied to online courses. The QA champion would need to associate the effort with a topic viewed as important to upper administrators. For example, student enrollment and retention are key considerations for an institution’s administration. What motivates a student to enroll and persist in a face-to-face program can be quite different from what motivates them to enroll in an online program. While the institution’s overall reputation may consistently be a factor, variables such as location, amenities, or a successful athletic team are less likely to attract and retain online students.

Sustainable, Data-driven Efforts

Two important considerations of a QA pitch to upper administration are whether it is data-driven and whether sustainability has been considered. One person who has knowledge of this subject informed us:

I have seen enough cases where misunderstanding and therefore misuse of the QA process from the upper administration end up wasted resources and efforts, and especially closing the door for true opportunity because of the lack of trust in the validity of the process.

This insight lends credence to the template (See Appendix) provided, a template that is informed by institutional data and promotes the sustainability of the QA effort.

By focusing on practical considerations of a QA effort from the “people” and the “process” perspective, we believe an ID will be well-positioned to successfully map and implement a QA effort.

References

Rogers, E. M. (2003). Diffusion of Innovation (Fifth ed.). The Free Press.

Ryan, T. (2015). Quality assurance in higher education: A review of literature. Higher Learning Research Communications, 5(4), 1-12. https://doi.org/10.18870/hlrc.v5i4.257

Wilger, A. (1997). Quality assurance in higher education: A literature review. National Center for Postsecondary Improvement, Stanford University. Retrieved from http://web.stanford.edu/group/ncpi/documents/pdfs/6-03b_qualityassurance.pdf

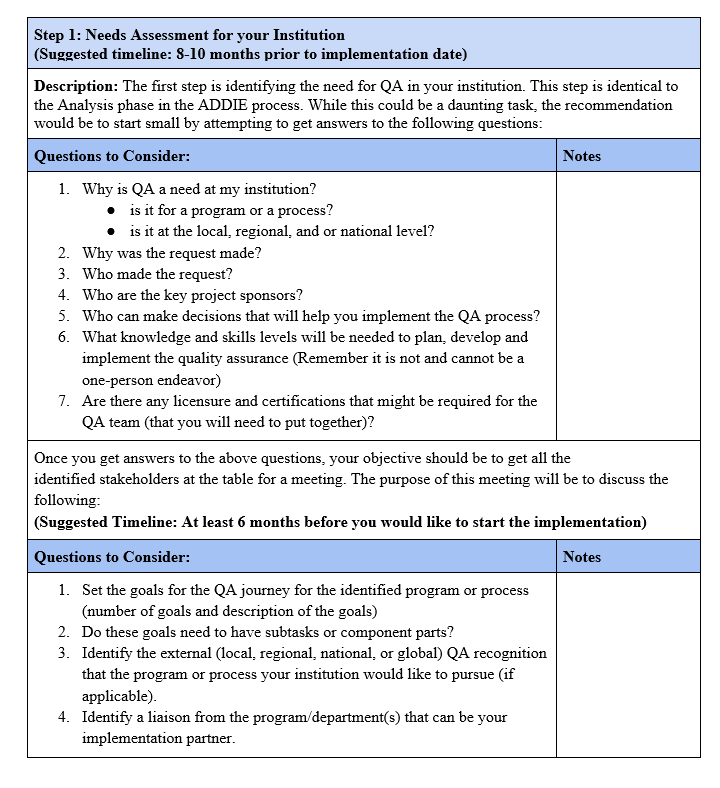

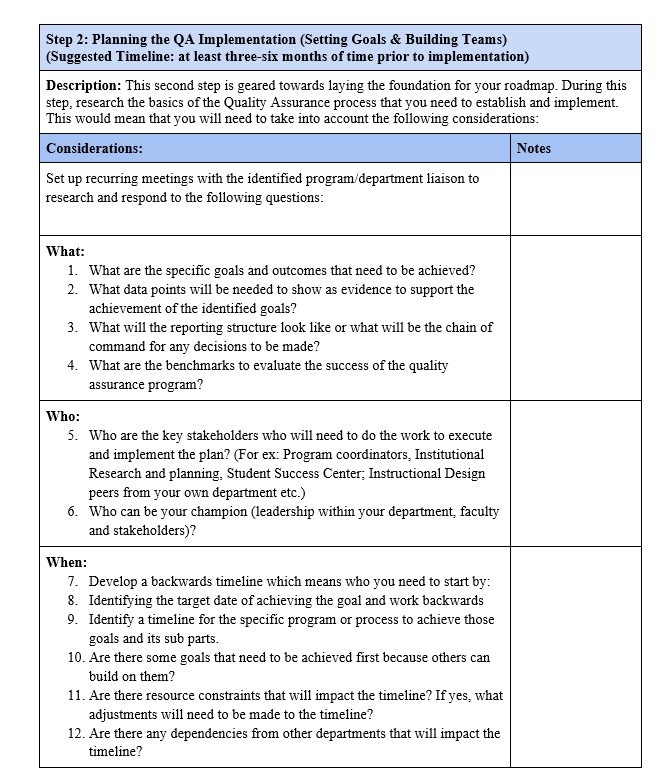

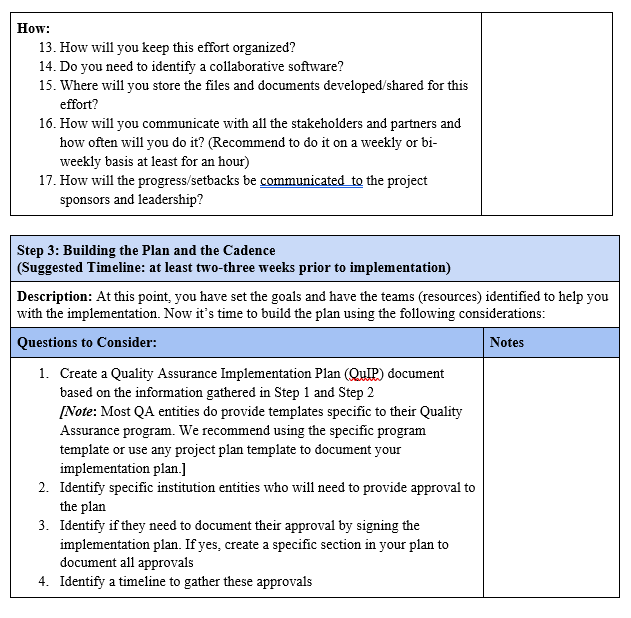

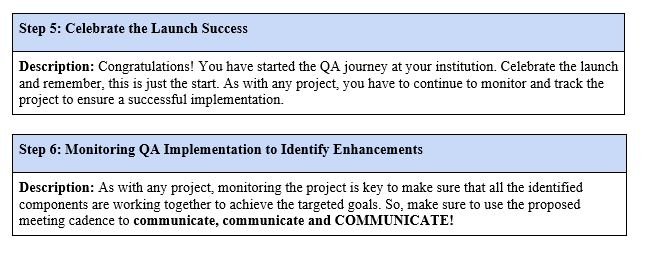

Appendix

Roadmap to Plan the Quality Assurance Journey at Your Institution

Understanding the contributions of people to the QA process will lay the foundation to leverage interpersonal skills in relevant processes to create a QA roadmap at your institution. As a first step, to help you get started with creating your roadmap to plan your QA journey, we have attempted to provide you with a template broken down into a series of six steps and key questions to consider. As an instructional designer, you will be able to see glimpses of the ADDIE process in the various steps identified below. We would also like to clarify that this template is an adaptation of a plethora of templates that you might find on the world wide web.