Learning is a product of interaction. (Elias, 2011, p. 1)

Each semester, a student’s interactions with peers, teachers, and content leads to learning (see Moore, 1989). As formal education increasingly takes place online, these interactions take on new forms. Students might have conversations with fellow students and their teachers asynchronously through discussion boards and synchronously through video conferencing software, or they might read textbooks, watch educational videos, complete projects, and take quizzes and tests. As students interact in online environments, they leave digital breadcrumbs of their learning experience that help reveal their learning paths, norms, and behaviors. However, understanding what these bits of data mean can be difficult and has necessitated the emergence of the new field of Learning Analytics, which focuses on “the measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (SOLAR, 2012, p.1).

When learning analytics data is visualized and reported, people can understand and implement changes in response to the data to improve learning. A common tool to report data about learners and their learning environment is a learning analytics dashboard (LAD). For instance, learning analytics dashboards are increasingly becoming incorporated into Learning Management Systems (LMS; Park & Jo, 2015, p. 110), wherein a student logging into an LMS may have access to a student-facing dashboard that provides feedback from the teacher on assignments and provides recommendations of content areas to study further. Conversely, a teacher logged into an LMS for the same course may have access to a teacher-facing dashboard that identifies struggling students and suggests ways to intervene.

Student- and teacher-facing LADs fulfill a variety of purposes. Student-facing LADs report information about students’ online learning experiences, provide feedback, encourage self-reflection and self-awareness, and motivate learners to achieve performance outcomes (Roberts, Howell, & Seaman, 2017, p. 318). To accomplish these purposes, student-facing LADs include features such as links to additional readings, information about course difficulty, progress within a course, other students’ time management practices, and personalized feedback on performance in relation to peers and learning outcomes (Roberts et al., 2017, p. 318).

Teacher-facing LADs, on the other hand, are frequently used to identify struggling students. In addition, they may also be used to help teachers better understand their courses, reflect on teaching strategies, and identify ways to improve course design (Viberg, 2019, p. 2), although these purposes are less prevalent than that of identifying at-risk students. Teacher-facing LADs with early warning systems for at-risk students may use complex predictive modeling and can include data sources such as students’ previous academic histories, current grades, time spent in different sections of the LMS, and clickstream data about learning activities (Viberg, 2019, pp. 1-2).

Continuous Improvement Learning Analytics Dashboards

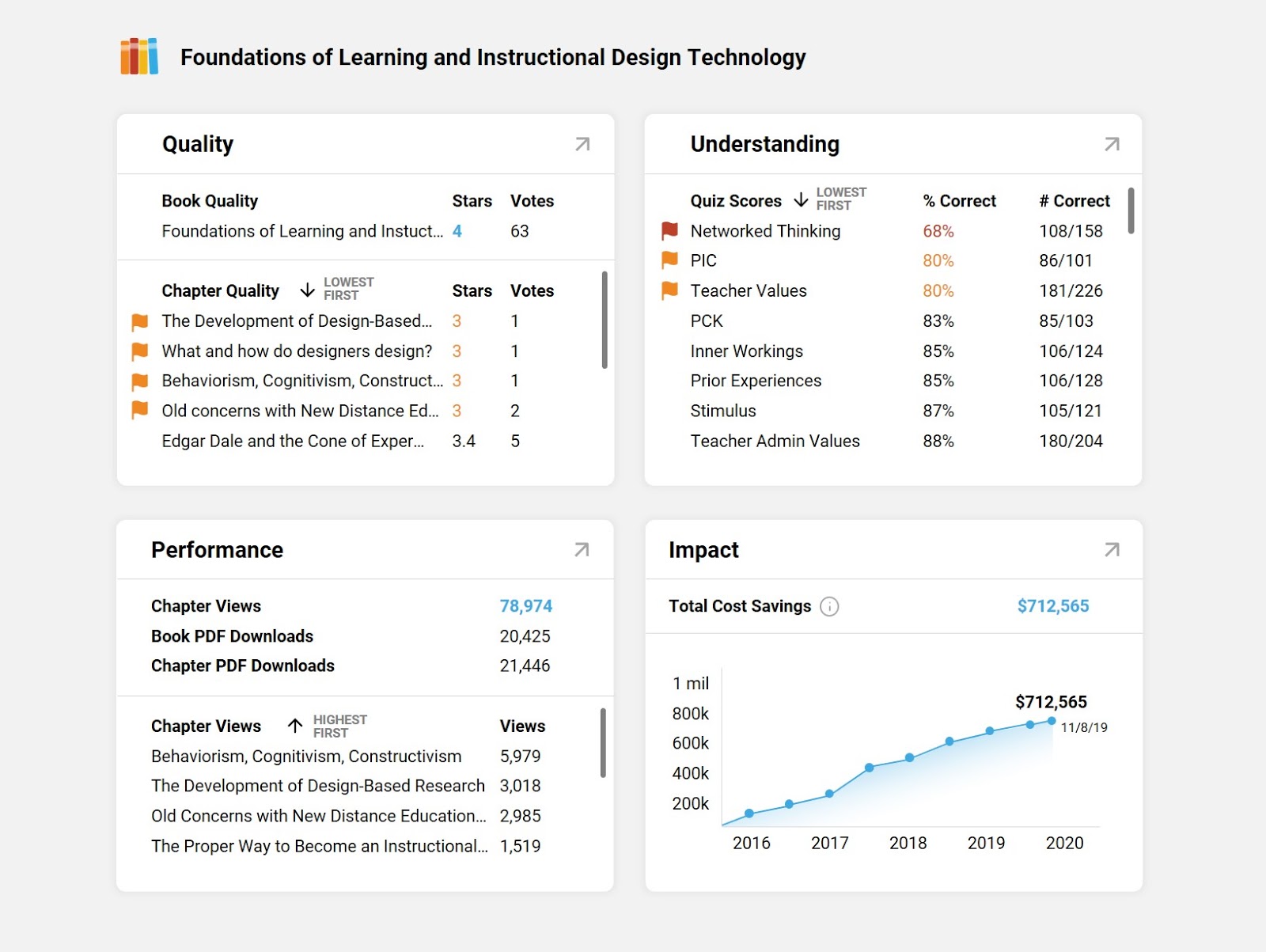

While student- and teacher-facing LADs remain the most common types of LADs, dashboards have also been created to facilitate the continuous improvement of online learning resources. This emerging type of LAD, known as a continuous improvement LAD, provides feedback to educational content creators about the quality and performance of educational content (see Figure 1). Continuous improvement LADs are relatively new, but they have been incorporated into online educational platforms such as textbook publishing platforms (e.g., EdTech Books), university library websites (Loftus, 2012), and government websites focused on educating the public (Desrosiers, 2018).

Figure 1

Example of a Continuous Improvement Learning Analytics Dashboard

When designing a learning analytics dashboard, designers must consider who the dashboard is trying to influence and what assumptions it is making about deficits contributing to poor performance. While a complete analysis of the underlying value systems of each type of LAD is beyond the scope of this chapter, Table 1 may be helpful in understanding intended audiences and designer beliefs about deficits that influence the design of each type of LAD. Stated simply, this means that the intended audience and design of each type of dashboard implies that the problem is located in a particular place. This deficit might be ascribed to the student (e.g., poor study habits), the teacher (e.g., poor pedagogy), or the content (e.g., poor design).

Table 1

Targets of Underlying Deficit Mindsets that Influence Different Types of LADs

| Target of Deficit Mindset |

How to Improve Student Performance |

Types of LADs Influenced by Value System |

|---|

| Student |

Encourage student effort

Modify student behavior

|

Student-facing LAD

Teacher-facing LAD

|

| Teacher |

Improve teaching strategies

Intervene with at-risk students

|

Teacher-facing LAD |

| Content |

Improve content |

Teacher-facing LAD

Continuous improvement LAD

|

Note that each type of deficit mindset lends itself to specific actions that dashboard users can take to improve the learning experience. For example, students can study more and better manage their time; teachers can improve their teaching strategies, motivate and inspire their students, and adjust the resources they use; and content creators can improve the quality of their content. Rather than attributing poor performance to external factors, such as how students are using content or which teaching strategies are employed, a continuous improvement LAD attributes poor performance to poor content quality and uses metrics that help content creators improve their content. When designing a continuous improvement LAD, then, dashboard designers should ensure that the information displayed on the dashboard is relevant to and actionable by content creators and that it also provides ongoing information about how content changes are impacting student performance.

As a recent example of a continuous improvement LAD, in 2018, the Massachusetts Digital Services team developed a dashboard that helped “Mass.gov content authors make data-driven decisions to improve their content” (Desrosiers, 2018). The dashboard took data from a variety of sources, including Google Analytics, Siteimprove, and Superset, and integrated the data into the website’s content management system (CMS). As a result, content authors could simply select an Analytics tab when editing their content to view performance metrics and access recommendations to improve their content. The team also collected ongoing online survey data to obtain direct feedback from Mass.gov users about their satisfaction with the site, reasons for using the site, and suggestions to improve the site.

After eight months of analyzing potential performance indicators and validating indicators with five partner agencies using a sample set of the website’s 100 most-visited pages, the dashboard developers summarized performance indicators into four categories: (1) findability, (2) outcomes, (3) content quality, and (4) user satisfaction (Desrosiers, 2018). Each category received a score from 0-4, which was then averaged to create an overall score. In addition, the dashboard included general recommendations for ways content creators could improve content in each of the four categories.

The dashboard was valuable to content creators, because it showed how specific content pages were performing over time and provided specific suggestions on how to improve content. For example, if a content creator saw that a page about SNAP benefits had a Content Quality score of 2, the content creator could find and implement recommendations from the dashboard, such as “Use SiteImprove to check for broken links and fix them” and “Spell out acronyms the first time you use them” (Desrosiers, 2018).

As this example illustrates, designing an effective continuous improvement LAD can be a complex task that requires a deep understanding of both the dashboard users (in this case, the creators of the Mass.gov content pages) and the people accessing the content (in this case, the visitors to Mass.gov). Just as a continuous improvement LAD facilitates iterative improvements to an educational website or platform’s content, this example suggests that an iterative process can be used in designing and developing the dashboard itself, wherein user feedback can be used to improve the usability and efficacy of the dashboard.

Best Practices in Designing a Continuous Improvement LAD

The data analyzed and visualizations displayed on a continuous improvement LAD for a government website would likely be very different from those on a different site, such as an online textbook publishing platform, “because pedagogical, technical and organisational aspects of learning are complex, [and] they must be carefully interpreted within the used context” (Viberg, 2019, p. 1). Yet, despite differences from one continuous improvement LAD to another, effective continuous improvement LADs may share several characteristics.

In 2004, as analytics dashboards were emerging in business and other fields, data visualization consultant and author Stephen Few defined a dashboard as “a visual display of the most important information needed to achieve one or more objectives; consolidated and arranged on a single screen so the information can be monitored at a glance” (p. 3). Fifteen years later, dashboards have become a widely used data analytics tool, and several recommendations have been suggested for designing effective dashboards. Many of these recommendations fall within four categories: (1) design for the dashboard’s intended purpose; (2) choose relevant metrics; (3) ensure data is current and accurate; and (4) use effective visual displays. I will now describe each of these recommendations in detail.

Design for the Dashboard’s Intended Purpose

First, the purpose of a dashboard should direct the dashboard’s design. As explained previously, continuous improvement LADs facilitate the continuous improvement of online content and are informed by a content-deficit mindset. In addition, the design of continuous improvement LADs is influenced by the needs of the content creators using the dashboards and by the type of educational content assessed by the dashboards.

Designers of continuous improvement LADs may benefit from frequent communication and collaboration with content creators (De Laet, 2018). Through interviews and surveys, dashboard designers can better understand the information content creators need to know about the students using the learning content so they can change the content to better meet the students’ needs. For example, authors publishing textbooks on an online textbook publishing platform may desire to know which textbook chapters are most relevant to students and which topics students have a hard time understanding. These questions may help dashboard designers choose appropriate metrics for the dashboard that pertain to this information. Dashboard designers can show iterations of the dashboard design to content creators and use their feedback to inform subsequent iterations of the dashboard’s design.

Choose Relevant Metrics

Second, metrics are the building blocks of any dashboard and of the visualizations displayed on that dashboard. Metrics are “measures of quantitative assessment used for assessing, comparing, and tracking performance” and comparing current performance with historical data or objectives (Young, 2019). Metrics should emanate consciously and directly from the intended purpose of the dashboard. In the case of continuous improvement LADs, metrics should be selected that measure and provide actionable data for content creators to evaluate student learning and improve content.

In business analytics, the term key performance indicators (KPIs) is used to identify meaningful metrics. The implication that metrics should measure only important, or key, indicators and that the measurement is one of performance applies neatly to continuous improvement LADs. Are students able to successfully demonstrate learning through knowledge assessments and other exercises? How often is a particular learning product (e.g., a textbook or educational video) accessed or completed? How do students rate the quality of the resource? Put simply, continuous improvement LADs should report key metrics to content creators to help them improve their content.

Ensure Data Are Current and Accurate

Third, regardless of which metrics are selected, a dashboard is only useful if it is based on current, accurate information. Dashboards should be connected to accurate data sources and should be updated regularly so that content creators can make informed decisions on how to improve their content. While some types of dashboards, such as strategic business dashboards, may only need data to be updated monthly, quarterly, or even annually, other types of dashboards, such as operational business dashboards, may require real-time data updates (Few, 2013). Designers of continuous improvement LADs should consider both the purpose of the dashboard and the needs of dashboard users when determining which data sources to use and how frequently the data should be updated.

Continuous improvement LADs often require data integration, which is defined as “the process of collecting data from disparate locations and systems, and presenting [the data] in a meaningful and useful way” (Boonie, 2016, p. 1). For example, as mentioned previously, the Mass.gov dashboard combined data from Google Analytics, Siteimprove, Superset, and other sources. Dashboard designers should ensure that data from disparate databases has been properly transformed and normalized before being integrated into a continuous improvement LAD (Boonie, 2016).

Use Effective Visual Displays

And fourth, after selecting appropriate metrics and integrating relevant data sources, dashboard designers must decide how to effectively display data. A variety of books and research articles have been written about dashboard design and data visualization (see Knaflic, 2015; Few, 2012; & Few, 2013); in this section, I will describe a few outstanding principles.

To begin, continuous improvement LADs should display high-level summaries that can be viewed and interpreted at a glance. Because humans have limited working memory, they are not able to store large amounts of visual information at a time (Yoo, Lee, Jo, & Park, 2015). As a result, dashboards are less effective if users must scroll through large amounts of content or select various tabs to identify and piece together information. Instead of having excessive visualizations, dashboards should prioritize information and display on a single screen the information that is most important. In accordance with psychologist George A. Miller’s observation (1956) that the average person can hold 7, plus or minus 2 objects in working memory, some dashboard designers recommend that no more than 5 to 9 visualizations be displayed on a dashboard's primary view. Interactions such as buttons, tabs, tooltips, and scrolling can be used to display additional content without overwhelming the user; however, the dashboard should not rely on these interactions to report key information (Bakusevych, 2018).

As Stephen Few explained (2004), a defining characteristic of a dashboard is “concise, clear, and intuitive display mechanisms” (p. 3). Dashboards should not use ostentatious or distracting visuals; rather, dashboards should apply minimalistic design principles that draw attention to important data. For example, dashboards should appropriately use negative space (sometimes called white space) so that the information is not too crowded and relevant data stand out to users (Bakusevych, 2018).

Dashboards designers should select the appropriate visuals to display different types of information. For example, bar charts are useful in comparing values at a point in time (e.g., current quality rating of an educational video) whereas line charts are useful in comparing values over time (e.g., total student savings as a result of using an open educational resource textbook). While a large variety of graph types may be used to report data, Tables 2 and 3 about common graph types may prove useful in deciding which visuals to display for different types of data (see Bakusevych, 2018 & Knaflic, 2015, pp. 35-69).

Table 2

Purposes of Common Graphs Representing Data at a Point in Time

| Analyze relationships |

Compare

values |

Analyze composition |

Analyze distribution |

|---|

|

Scatterplots

Bubble charts

Network diagrams

|

Bar/column charts

Circular areas charts

|

Tree map

Heat map

Pie/donut chart

|

Scatterplots

Histograms

Bell curves

|

Table 3

Purposes of Common Graphs Representing Data Over Time

| Analyze relationships |

Compare

values |

Analyze composition |

Analyze distribution |

|---|

| N/A |

Line graphs

Slope graphs

|

Stacked column chart

Stacked area chart

Waterfall chart

|

N/A |

As Tables 2 and 3 indicate, graphs may be used to analyze relationships, composition, and distribution, as well as to compare values. Whether the data depict a specific point in time or illustrate changes over a period of time influences which type of graph should be used.

Dashboards directed toward users whose native language reads from left to right should display the most important information in the upper left and then organize the rest of the information in a Z-pattern. In other words, dashboard designers should design with the assumption that users will view the first row of visualizations from left to right and then move down to the next row following the same patterns. Graphs with related information should be close to each other so users do not have to look back and forth between distant areas of the dashboard.

In addition to following these general recommendations, dashboard designers should use effective visual displays to address a challenge unique to continuous improvement LADs; namely, the data used to inform actions is iterative. When content creators make adjustments to content based on feedback from the dashboard, the data about that content is no longer valid for making further judgments. For example, a continuous improvement LAD on an online textbook publishing platform may display the scores of knowledge check questions. If a content creator observes that a specific question has low scores, the content creator may clarify parts of the chapter or adjust the wording of the knowledge check question. In either case, after the adjustments are made, the data about the knowledge check scores are no longer valid.

As this example illustrates, each iteration, improvement, or adjustment made to content invalidates previous data about that content. How can effective visual design address this problem? To compensate for the problem of invalid data, continuous improvement LADs must clearly document iterative changes to content. In the case of the adjusted knowledge check question, the dashboard must visually show content creators how scores to the knowledge check question changed in relation to the adjustments made. By clearly displaying when content changes occurred, content creators can see to what extent their changes led to desired outcomes, and can know when additional changes are needed.

Conclusion

As students enroll in online classes and engage with digital content, they leave behind an abundance of data that, if properly collected, reported, and analyzed, can lead to enhanced learning. Learning analytics dashboards are an effective tool to visualize and report these data so students, teachers, and content creators can make informed decisions about their role in the learning process. An emerging type of LAD, known as a continuous improvement LAD, helps content creators make incremental improvements to their content using data about student performance with the content and user feedback. While the metrics and visualizations of continuous improvement LADs vary depending on the type of content the dashboard seeks to improve, several data visualization and dashboard best practices may help in designing an effective continuous improvement LAD. These practices include designing for the dashboard’s intended purpose, selecting relevant metrics, maintaining accurate and up-to-date data, and using effective visual displays. As more educational platforms begin to collect and report data about the quality and performance of their content, content creators using these platforms will be able to make informed, data-driven decisions about how to improve their content and increase student learning.

References

Bakusevych, T. (2018, July 17). 10 rules for better dashboard design. Retrieved from https://uxplanet.org/10-rules-for-better-dashboard-design-ef68189d734c

Boonie, M. (2016). Determining, integrating, and displaying your metrics and data [White paper]. Retrieved from https://www.pharmicaconsulting.com/wp-content/uploads/2016/10/Determining-Integrating-and-Displaying-your-Metrics-and-Data_2.pdf

Desrosiers, G. (2018, August 20). Custom dashboards: Surfacing data where Mass.gov authors need it. Retrieved from https://medium.com/massgovdigital/custom-dashboards-surfacing-data-where-mass-gov-authors-need-it-80616c97dff4

De Laet, T. (2018). Learning Dashboards for Actionable Feedback [PowerPoint presentation]. Retrieved from http://www.humane.eu/fileadmin/user_upload/Learning_dashboards_for_actionable_feedback.pdf

Elias, T. (2011). Learning analytics: Definitions, processes and potential. Retrieved from http://learninganalytics.net/LearningAnalyticsDefinitionsProcessesPotential.pdf

Few, S. (2004, March 20). Dashboard confusion. Intelligence Enterprise. Retrieved from https://www.perceptualedge.com/articles/ie/dashboard_confusion.pdf

Few, S. (2012). Show me the numbers: Designing tables and graphs to enlighten (2nd ed.). Burlingame, CA: Analytics Press.

Few, S. (2013). Information dashboard design: Displaying data for at-a-glance monitoring (2nd ed.). Burlingame, CA: Analytics Press.

Knaflic, C. N. (2015). Storytelling with data: a data visualization guide for business professionals. Hoboken, NJ: John Wiley & Sons, Inc.

Loftus, W. (2012). Demonstrating success: Web analytics and continuous improvement. Journal of Web Librarianship, 6(1), 45-55.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81.

Moore, M. (1989). Three Types of Interaction. American Journal of Distance Education. 3. 1-7. 10.1080/08923648909526659.

Park, Y., & Jo, I. H. (2015). Development of the learning analytics dashboard to support students’ learning performance. Journal of Universal Computer Science, 21(1), 110.

Roberts, L. D., Howell, J. A., & Seaman, K. (2017). Give me a customizable dashboard: Personalized learning analytics dashboards in higher education. Technology, Knowledge and Learning, 22(3), 317-333. doi:http://dx.doi.org.erl.lib.byu.edu/10.1007/s10758-017-9316-1

SoLAR. (2011). Open Learning Analytics: an integrated & modularized platform. Society for Learning Analytics Research. Retrieved from http://solaresearch.org/OpenLearningAnalytics.pdf

Viberg, O. (2019). How to Implement Data-Driven Analysis of Education: Opportunities and Challenges.

Yoo, Y., Lee, H., Jo, I. H., & Park, Y. (2015). Educational dashboards for smart learning: Review of case studies. In Chen, G., Kumar, V., Kinshuk, Huang, R. & Kong, S. C. (Eds.), Emerging issues in smart learning (pp. 145-155). Springer, Berlin, Heidelberg.

Young, J. (2019). Metrics. Retrieved from https://www.investopedia.com/terms/m/metrics.asp