From time to time new technologies provide us with a qualitatively different ability to engage in previously possible activities. For example, 20 years ago it was already possible to publish an essay online. You simply used the command line program Telnet to login to a remote server, navigated into the directory from which your webserver made html files available to the public, launched the pico editor from the command line, wrote your essay, and manually added all the necessary html tags. Today, open source blogging software like Wordpress makes publishing an essay online as easy as using a word processor. Yes, it was possible to publish essays online before, but the modern experience is qualitatively different.

“Evaluate” is the final step in the traditional ADDIE meta-model of instructional design, and it has always been possible—if, at times, expensive and difficult—to evaluate the effectiveness of instructional materials. Modern technology has made the process of measuring the effectiveness of instructional materials a qualitatively different experience. Gathering data in the online context is orders of magnitude less expensive than gathering data in classrooms, and open source analysis tools have greatly simplified the process of analyzing these data.

Historically, any needed improvements discovered during the evaluation process would take a significant amount of time to reach learners, as they could only be accessed once new editions of a book were printed or new DVDs were pressed. Again, modern technology makes the delivery of improvements a qualitatively different exercise. When instructional materials are delivered online, instructional designers can engage in continuous delivery practices, where improvements are made available to learners immediately, as often as multiple times per day.

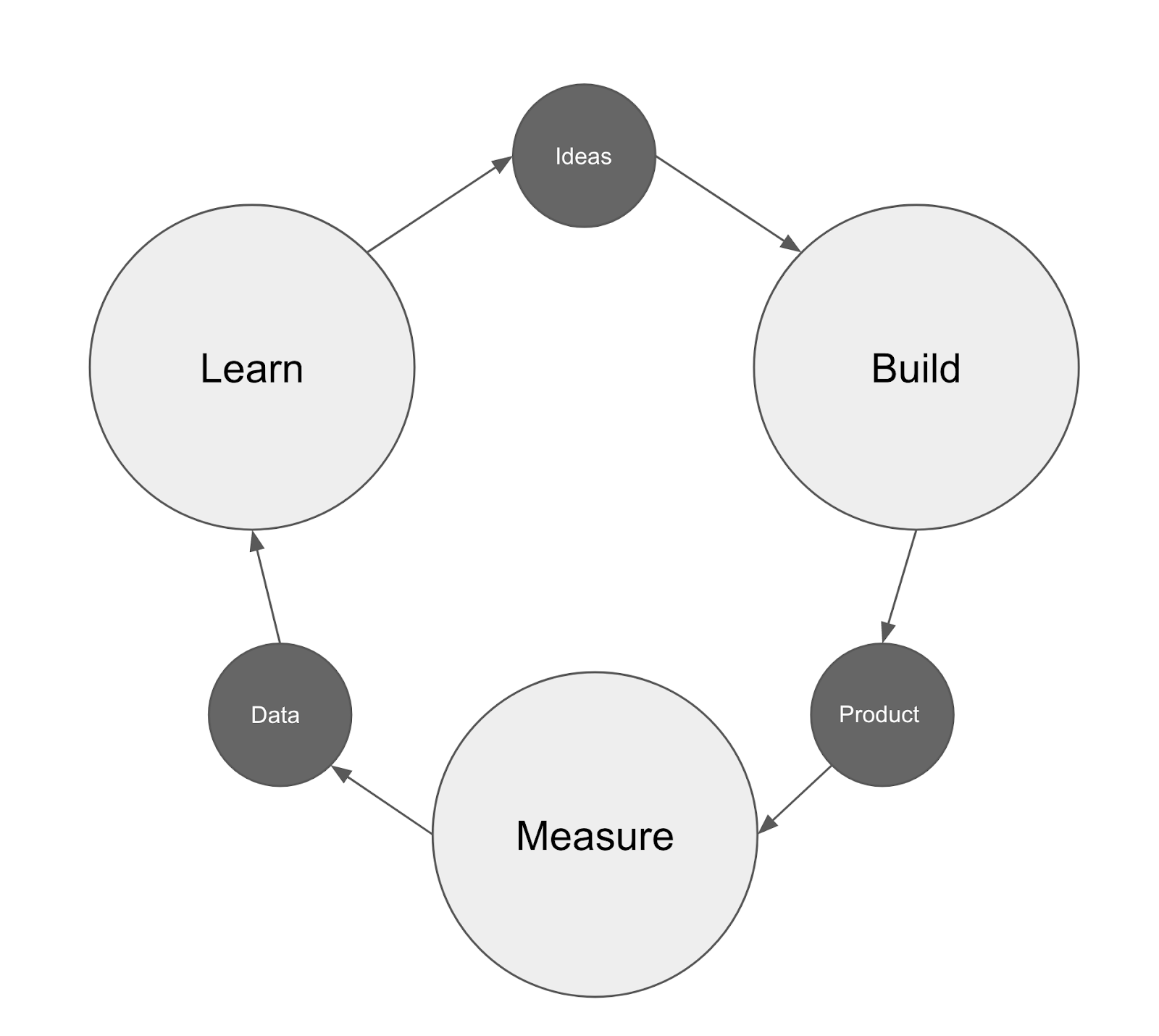

The modern approach to continuous improvement designed for use in the context of online services described by Ries (2011), called the “build - measure - learn cycle,” is illustrated in Figure 1.

Figure 1

The Build - Measure - Learn Cycle

In this chapter we adapt the build - measure - learn cycle for use by instructional designers who want to engage in continuous improvement. Because our focus is on the improvement of instructional materials, our discussion below does not include a discussion of the creation of the first version of the materials. (The first version of the materials could be open educational resources created by someone else or a first version that you created previously.)

The chapter will proceed as follows:

- Conceptual Framework: We argue that all instructional materials are hypotheses, or our best guesses, informed by research, about what instructional design approach will support student learning in a specific context. Thinking this way will naturally lead us to collect and analyze data to test the effectiveness of our instructional materials.

- Build: We describe the implications of designing for data collection, together with the instrumentation and tooling that must be built in order to collect the data necessary for continuous improvement.

- Measure: We describe the process of analyzing data in order to identify portions of the instructional materials that are not effectively supporting student learning.

- Learn: We discuss methods to use when reviewing less effective portions of the instructional materials and deciding what improvements to make before beginning the cycle again.

- Technical Note: We briefly pause to discuss the role of copyright, licensing, and file formats in continuous improvement.

- Worked Example: We demonstrate one trip through the cycle with a worked example.

- Conclusion: We end with some thoughts about the imperative implied for instructional designers by the existence and relative ease of use of continuous improvement approaches like the build - measure - learn cycle.

Conceptual Framework

Instructional Materials Are Hypotheses

People who design instructional materials (who we will refer to as instructional designers throughout) make hundreds of decisions about how to best support student learning. Each decision is a hypothesis of the form “in the context of these learners and this topic, applying this instructional design approach in this manner will maximize students’ likelihood of learning.” The ways in which these individual decisions are interwoven together creates a network of hypotheses about how best to support student learning.

Hypotheses Need to Be Tested

It reveals a fatal lack of curiosity for an instructional designer to simply say “these materials were designed in accordance with current research on learning” without following through to measure their actual effectiveness with actual learners in the actual world. While designing instructional materials in accordance with research is a positive first step, to our minds the most important measure of the quality of instructional materials is the degree to which they actually support student learning. Questions of whether or not the materials are informed by research, are finished on schedule and on budget, are stunningly beautiful, render correctly on a mobile device, or were authored by a famous academic become meaningless if students who use the materials do not learn what the designers intended.

Initial Hypotheses Are Seldom Correct

Hypotheses need to be refined in an ongoing cycle of improvement. Data collected during student use of content and from assessments of learning can be used to identify specific portions of the instructional materials (i.e., specific instructional design hypotheses) that are not successfully supporting student learning. Once these underperforming designs (hypotheses) are identified, they can be redesigned, improved, and incorporated into a new version of the instructional materials. The updated collection of instructional design hypotheses can then be deployed for student use, and the cycle of continuous improvement can begin again.

Build: Designing for Data, Instrumentation, and Tools for Data Collection

In order to be able to engage in continuous improvement, instructional materials must be designed for data collection. There must be a unifying design framework that will allow data from a wide range of sources to be aggregated meaningfully. The method we will describe throughout this chapter organizes instructional materials around a network of learning outcomes. In this method of designing for data collection, all instructional materials (e.g., readings, simulations, videos, practice opportunities) are aligned with one or more learning outcomes. All forms of assessment, both formative or summative, are also aligned with one or more learning outcomes (this alignment must be done at the individual assessment item level.)

Once instructional materials have been designed for data collection, tools and instrumentation must be created so that the data can actually be collected and managed. The system that mediates student use of the instructional materials (e.g., a learning management system) must be capable of (a) expressing the relationships between learning outcomes, instructional materials, and assessments, (b) capturing data about student engagement with these instructional materials, and (c) capturing item-level data about student engagement with, and performance on, assessments. The data collected by the system should be able to answer questions such as, for any given learning outcome, what instructional materials in the system are aligned with that outcome? (If instructional activities are “aligned with” a learning outcome, student engagement with the instructional activities should support mastery of the outcome.) For any given learning outcome, what assessment items in the system are aligned with that outcome? (If assessments are “aligned with” a learning outcome, student success on these assessments should provide evidence that they have mastered the outcome).

Measure: Using RISE Analysis to Identify Less Effective Learning Materials

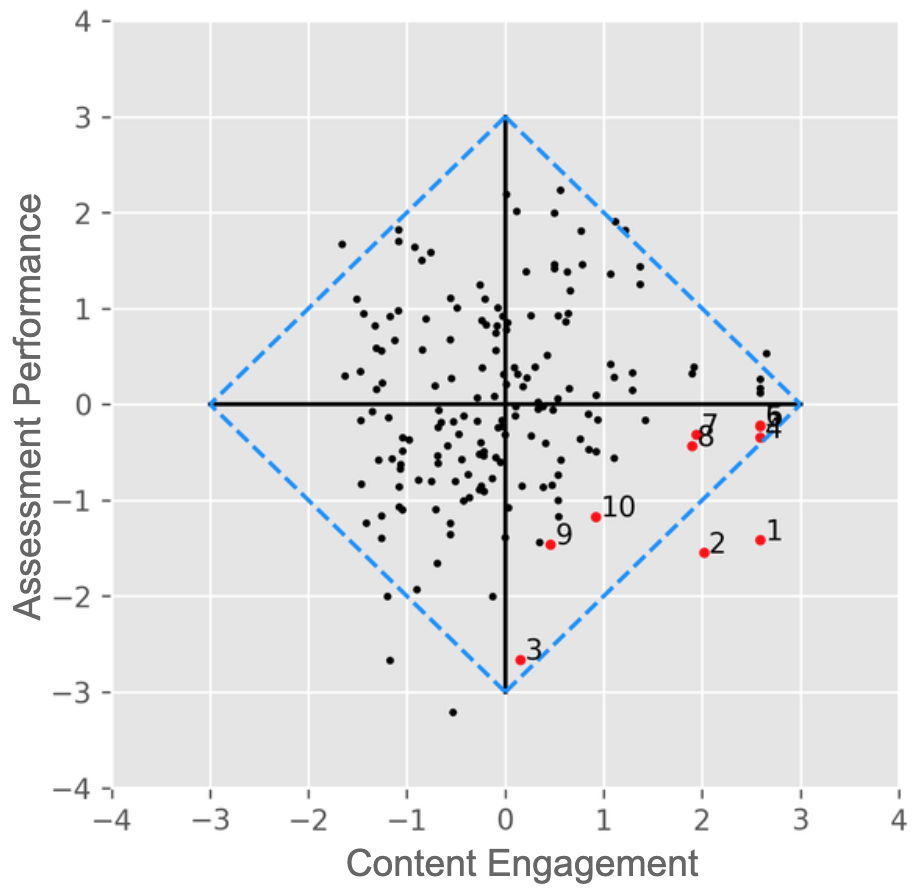

As described in Bodily, Nyland, and Wiley (2017), activity engagement data and assessment performance data can be analyzed together to identify learning outcomes whose aligned instructional materials are not sufficiently supporting student mastery (as demonstrated by performance on aligned assessments). The purpose of Resource Inspection, Selection, and Enhancement (RISE) analysis is to identify learning outcomes where students were highly engaged with aligned instructional materials, but simultaneously performed poorly on aligned assessments.

Each point in Figure 2 represents a learning outcome. The x-axis is engagement with instructional materials and the y-axis is assessment performance, both converted to z-scores. The bottom-right quadrant (high engagement, low performance) indicates which outcomes should be targeted for improvement and are numbered to indicate the order in which they should be addressed.

Figure 2

A RISE Analysis Plot

An open source software implementation of RISE analysis is described in Wiley (2018). This greatly simplifies the process of running RISE analyses, as long as appropriate data on learning outcome names, content engagement, and assessment performance are available.

Learn: Understanding Why Learning Outcomes End up in the Bottom Right Quadrant

Once learning outcomes are identified as being in the bottom right quadrant of a RISE analysis plot, the cause of the problem can be isolated. For brevity, we will refer to learning outcomes in the bottom right quadrant of a RISE analysis plot as “underperforming learning outcomes” below. The root of the problem can generally be identified in two steps.

The first step in isolating the problem with an underperforming learning outcome is evaluating assessments aligned with each learning outcome. Are the assessments accurately measuring student learning? Questions to ask at this stage include: are there technical problems with the assessment? Are items miskeyed? Are other sources of spurious or construct-irrelevant difficulty present? Are measures of reliability, validity, or discrimination unacceptably low? If the answer to any of these questions is yes, improvements should be made to problematic assessments, after which the instructional designer can stop working on this learning outcome and move onto the next. There is likely no need to make improvements to instructional materials aligned with this learning outcome.

If the aligned assessments are functioning as intended, the instructional designer can move on to the second step—reviewing the instructional materials to determine why they aren’t sufficiently supporting student learning. This process is highly subjective and brings the full expertise of the instructional designer to bear. The instructional designer reviews the instructional materials aligned with the learning outcome and asks questions about why students might be struggling here. For example:

- Is there a mismatch between the type of information being taught and the instructional design approach originally selected? For example, if students are learning a classification task, are examples and non-examples provided without a specific discussion of the critical attributes that separate instances from non-instances?

- Is there a mismatch in Bloom’s Taxonomy level between the learning outcome, the instructional materials, and the assessment? (For example, are the learning outcome and instructional materials primarily the Remember level, while the assessments require students to Apply?)

- Have the instructional materials failed to provide learners with an opportunity to practice in a no/low-stakes setting and receive feedback on the current state of their understanding?

We cannot list every question an instructional designer might ask, but we hope these examples are illustrative. Talking with students can also be incredibly helpful at this stage. These conversations are an effective way for the instructional designer to zero in on root causes of students’ misunderstandings.

Once the instructional designer believes they have identified the problems (i.e., they have a new hypothesis about how to better support student learning), new or existing instructional materials and assessments can be created, adapted, or modified. Students can also be powerful partners and collaborators in creating improvements to the instructional materials (e.g., OER-enabled pedagogy as described by Wiley and Hilton (2018)).

When this (Build) process is completed, the new or improved materials can be released to students immediately. Once students are using the new version of the materials, this use will result in the creation of new data which the instructional designer can examine using RISE analysis (Measure). These analyses support the instructional designer in forming new hypotheses about why students aren’t succeeding (Learn). When this continuous improvement process is followed, instructional materials should become more effective at supporting student learning with each trip through the cycle.

Technical Note: The Role of Copyright and File Formats

Before adaptations or modifications can be made, instructional designers must have legal permission to make changes to the instructional materials. Because copyright prohibits the creation of derivative works that are often the result of the improvement of instructional materials, one of two conditions must hold. In the first condition, the instructional designer (or their employer) must hold the copyright to the instructional materials, making the creation and distribution of improved versions legal. In the second condition, the instructional materials must be licensed under an open license (like a Creative Commons license) that grants the instructional designer permission to create derivative works (aka improved versions of the instructional materials).

Legal permission to create derivative works can be rendered ineffective if the instructional materials are not available in a technical format amenable to editing (e.g., HTML). ALMS analysis as described in Hilton, Wiley, Stein, and Johnson (2010) includes four factors to consider regarding the “improvability” of instructional materials. The first factor is Access to editing tools—is the software needed to make changes commonly available (e.g., MS Word) or obscure (e.g., Blender)? The second factor is the Level of expertise required to make changes—is the content easy to change (e.g., Powerpoint) or difficult to change (e.g., an interactive simulation written in Javascript)? The third factor is whether or not the instructional materials are Meaningfully editable—is the document a scanned image of handwritten notes (this text is not easily editable) or an HTML file (easily editable)? The final factor is Source file access—is the file format preferred for using the resource also the format preferred for editing the resource (e.g., an HTML file) or are the preferred formats preferred for using and editing the files different (e.g., PSD versus JPG)?

If the instructional materials you are working with do not belong to you or your employer, are not openly licensed, or are available only in file formats that are not conducive to adaptation and modification, you may not be able to engage in continuous improvement.

A Worked Example

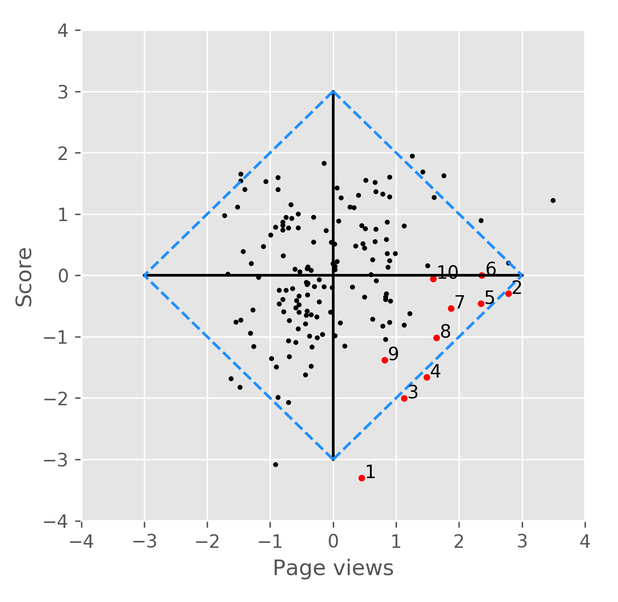

Lumen Learning, a company that offers instructional materials for college classes that can be adopted in place of traditional textbooks, offers a Biology for Non-majors course in its Waymaker platform. This platform allows instructional designers to enter learning outcomes and align all instructional materials and assessment items with the learning outcomes. A RISE analysis was conducted using the content engagement data and assessment performance data for all students who took the Biology for Non-majors course during a semester. Among the top 10 underperforming learning outcomes it identified, the RISE analysis revealed that students were performing poorly on assessments aligned with the learning outcome “compare inductive reasoning with deductive reasoning” despite the fact that students were engaging with the aligned instructional materials at an above average rate (see outcome 1 in Figure 3 below). This learning outcome was selected for continuous improvement work.

Figure 3

Biology for Non-Majors RISE Analysis Plot

A review of the aligned assessment items by an instructional designer revealed that the items appeared to be keyed correctly and free from other problems. Following this review of the aligned assessments, the instructional designer reviewed the aligned instructional materials guided by the question, “why are students who use these instructional materials not mastering the outcome?” The analysis revealed that the instructional materials for this outcome were comprised of two paragraphs of text content, each of which defined one of the terms. No other instructional materials were provided in support of mastery of this learning outcome and students appeared to be unable to remember which of these similar sounding terms was which.

The instructional designer decided to make minor edits to the existing paragraphs to improve their clarity and also to create an online interactive practice activity (Koedinger et al., 2017) in support of this learning outcome. This activity provided students with mnemonic tools to help them remember which term is which, and combined these mnemonics with practice exercises in which students classify examples as either inductive or deductive and receive immediate, targeted feedback on their performance. The online interactive practice activitity can be viewed in context at https://edtechbooks.org/-QwUE.

These new and updated instructional materials are now integrated into the existing materials and are being used by faculty and students across the United States. After another semester is over, the RISE analysis will be rerun. This new analysis will either confirm that the improvements to the instructional materials have improved student learning, in which case other underperforming learning outcomes will be selected for continuous improvement, or they will confirm that there is still work to do to better support student learning of this outcome.

Conclusion

Modern technologies, including the internet and open source software, have radically decreased the cost and difficulty of collecting and analyzing learning data. Where evaluation alone was once prohibitively difficult and expensive, today the entire continuous improvement process is within reach of those who design instructional materials for use in online classes and other technology-mediated teaching and learning settings. While Ries (2011) described the build - measure - learn cycle as a way to rapidly increase a company’s revenue, we see a clear analog in which similar approaches can be used to rapidly increase student learning. We now live in a world where it is completely reasonable to expect instructional materials to be more effective at supporting student learning each and every term.

We invite the reader to help us make this possible state of affairs the actual state of affairs by engaging in continuous improvement activities in their own instructional design practice. And in the spirit of continuous improvement, we further invite the reader to join us in developing and refining the processes described in this chapter—in part by completing the survey at the end of this chapter and providing us feedback on how the chapter can be improved.

References

Bodily, R., Nyland, R., & Wiley, D. (2017). The RISE framework: Using learning analytics to automatically identify open educational resources for continuous improvement. The International Review of Research in Open and Distributed Learning, 18(2). https://edtechbooks.org/-ymD

Hilton, J., Wiley, D., Stein, J., & Johnson, A. (2010). The four R's of openness and ALMS analysis: Frameworks for open educational resources. Open Learning: The Journal of Open and Distance Learning, 25(1), 37-44. https://edtechbooks.org/-vqPr

Koedinger, K. R., McLaughlin. E. A., Zhuxin, J., & Bier, N. L. (2016). Is the doer effect a causal relationship?: How can we tell and why it's important. LAK '16: Proceedings of the Sixth International Conference on Learning Analytics & Knowledge. https://edtechbooks.org/-wNfS

Ries, E. (2011). The Lean Startup. Crown Business: New York.

Wiley, D. (2018). RISE: An R package for RISE analysis. Journal of Open Source Software, 3(28), 846, https://edtechbooks.org/-LKLb

Wiley, D. & Hilton, J. (2018). Defining OER-enabled Pedagogy. International Review of Research in Open and Distance Learning, 19(4). https://edtechbooks.org/-tgNj