.jpg)

“Data! Data! Data! I can’t make bricks without clay.” −Sherlock Holmes in "The Adventure in the Copper Beeches" by Arthur Conan Doyle

Having core data practice knowledge and skills are essential to effective blended teaching. By the end of this chapter you should be able to meet the following objectives:

Competency Checklist

- I can create formative assessments with mastery-thresholds (3.1).

- I can create a mastery-tracker with assessments aligned to learning outcomes (3.2).

- I can identify important patterns in student performance data (3.2).

- I can use data to recommend focused learning activities for students (3.3).

- I can use data to evaluate and improve assessments and instructional materials (3.3).

3.0 Introduction - Storytelling with Numbers

Data can be an intimidating topic of conversation for many reasons. One of these reasons may be that data is often used in the context of reports and analyses of measurements that might be unfamiliar to us. It is used to predict weather patterns, the stock market, the outcomes of important sporting events, and even the time it may take you to get to the mall on Thursday night the week after Thanksgiving. However, all definitions of “data” have one central theme: the use of data is simply storytelling with numbers, and based upon those numbers, people tell the story of what was measured to have happened, why it seemed to have happened, and what might happen next. Data certainly don’t give us clairvoyance, but they do help us to see patterns and use those patterns to predict the next thunderstorm, stock market crash, winner of the Division I Championship, or starting time of rush hour traffic.

Within education we use the term “data” to categorize numerical information such as assessment results, frequency of attendance, and engagement measurements, but we also use the data for categorical information such as student demographics. We can determine where students are in their understanding of learning objectives, why students are where they are, how we can help them get where they need to be, and when they are finally there. In other words, data helps us tell and direct the story of student achievement.

You can use this link to obtain some data for yourself! See how ready you are to use data in your own blended classroom.

3.1 Developing a Mastery-based Classroom

To introduce data practices, we will first discuss the concept of mastery-based progression. Many blended classrooms use this approach because blended teaching’s data practices provide a gateway to a successful mastery-based classroom.

3.1.1 What is mastery-based progression?

Mastery-based learning focuses on student performance rather than seat-time to determine how students progress through the curriculum. Students demonstrate mastery of a skill or topic before moving on to a more advanced one. Meanwhile, you maintain a fixed expectation of how students will perform and allow the time required to achieve that level to vary. In other words, learning becomes a constant and time becomes variable. In contrast, time-based progression holds fixed the time spent on a particular outcome and allows the student performance to vary; time is the constant and learning is the variable (see Figure 3.1).

time-based vs mastery-based.png) Figure 3.1 Time-based vs Mastery-Based Progression.23

Figure 3.1 Time-based vs Mastery-Based Progression.23Definition: Mastery Vs Competency-Based Learning

Mastery-based learning maintains that students should reach a certain level of performance before moving on to the next concept or skill.

Competency-based learning is often used synonymously with mastery-based learning, but also includes the idea that students who come with skills acquired outside of the classroom can demonstrate competence in those skills and move on.

Building Student Skills through Mastery-Based Curriculum

Video 3.1 (2:30) http://bit.ly/btb-v208

Watch on YouTube

Watch on YouTubeWhat to Look For: Identify the various reasons that students, teachers, parents, administrators, and even politicians like the idea of personalized learning.

Mastery-based progression is better for student learning than time-based progression. However, it requires a skill set that may be new to you: managing a classroom where students are progressing at a variety of different paces. It also requires you to be better at effectively using data to inform your teaching practices. Student data will help you and your students both know when he or she is ready to advance to the next concept or skill. Good data practices also provide insight into specific student deficiencies so that you can provide targeted interventions to help students overcome those deficiencies.

3.1.2 Creating assessments for mastery-based progression

Good assessments are key to effective learning. Good assessments come in many forms and are designed to measure a student’s growth towards the learning objectives. Assessment validity provides us a barometer for trusting that the assessment is accurately measuring what it is intended to measure and that it is appropriate for the students it is given to.

Types of evaluations are split up into two major groups: summative and formative. Summative assessments are usually given at the end of a unit, course, or school year and are often created by someone other than you. Formative assessments are typically shorter, more frequent, and diagnostic in purpose; they provide specific guidance to students and to you on what your students still need to learn.

You will develop a wide range of formative assessments for your classroom. Among this range is the variance of online versus in-person evaluations. Both types are central elements of effective blended teaching. Administering assessments online and in-person have different advantages that should be considered as you are planning your instruction. These are detailed below in Table 3.1.

Table 3.1 Strengths of different assessment types administered in-person & online.

Type of Assessment | In-Person Advantages | Online Advantages |

Quizzes and exams | - Easier to prevent cheating

| - Individualizes question selection

- Automated scoring and feedback

- Multiple attempts possible

|

Observations | | |

Live presentations and physical demonstrations | - Sensory richness

- Fewer technology barriers

| - Flexibility in time and space

- Time to provide detailed feedback between presentations

- Can re-watch presentations

- Management of peer review

|

Papers and projects | - Peer review may benefit from human connection

| - Digital submission and collection

- Online rubrics and gradebook integration

- Management of peer review

|

Portfolios | - Allows for physical objects

- Sensory richness

| - Portability and share-ability

- Ability to easily update

- Can contain dynamic elements

|

Discussion Participation | - Attendance is easy to track - contribution more difficult

- Energy of contribution can be easily felt

| - Everyone can contribute

- Quality of contribution can be assessed

|

For an assessment to be useful for mastery-based progression, each element needs to be associated with a specific student learning outcome (SLO). Rather than focus on overall scores for a quiz or exam, we will focus on scores at the SLO level. Consider the example in Figure 3.2. This figure shows the exam scores for a class where every student has scored 85% or higher on the exam. Pretty impressive! Are all the students in the class ready to move on to the next unit?

gradebook with unit exam 1 scores all at 85_.png) Figure 3.2 Gradebook with unit Exam 1 scores all at 85% or above.24

Figure 3.2 Gradebook with unit Exam 1 scores all at 85% or above.24To answer this question and use this exam for mastery-based progression, you will need to match each item or question in the exam with the SLO that it is measuring as shown in Figure 3.3.

diagram showing how items in the unit exam are broken.png) Figure 3.3 The relationship of items in the unit exam to four different SLOs.25

Figure 3.3 The relationship of items in the unit exam to four different SLOs.25Figure 3.4 shows the unit exam results organized by SLO. Now when you look at the exam scores are all the students ready to move on to the next unit?

gradebook from figure 2.3.png) Figure 3.4 Gradebook from Figure 3.3 with Exam 1 scores by SLO.26

Figure 3.4 Gradebook from Figure 3.3 with Exam 1 scores by SLO.26Boxed areas of Figure 3.4 show that even though all students scored 85% or above on Exam 1, all but one student demonstrated significant weakness in at least one SLO. Moving on to the next unit without helping students to overcome deficiencies could lead to an increase in poor performance.

3.1.3 Identifying Mastery Thresholds

Mastery gradebooks or trackers are tools that allow you to quickly and easily see how well a student has mastered each SLO. To clearly communicate this, teachers typically will use a streetlight color coding scheme in the gradebooks (see Figure 3.5). In the mastery gradebook, green indicates a student has achieved a mastery level on an assessment or SLO, yellow indicates near mastery, and red indicates that the student needs more significant remediation or intervention.

in mastery gradebooks, colors green.png) Figure 3.5 The visual representation of whether students have reached established mastery thresholds.27

Figure 3.5 The visual representation of whether students have reached established mastery thresholds.27Creating Mastery Assessments

As you know, assessments can have many forms—quizzes, tests, reports, essays, projects, performances. The possibilities are nearly endless. The importance of a mastery assessment is that it is directly linked to a learning standard. This means the rubric for a project or essay is focused primarily on mastery of the objective and not on things like grammar, presentation length, format, etc. One of the ways to do this is to create a rubric that breaks apart your mastery into four different levels and transfer those levels over to the rubric. The four levels of your mastery rubric may include:

- Exceeds Mastery

- Mastery

- Near Mastery

- Remediation

It is important to know what each of these benchmarks looks like for a given standard and what form of assessment can best measure them.

You or your school will need to determine appropriate upper and lower mastery thresholds. There is research to support the idea that the upper mastery level should be set somewhere in the range of 80-95%. Figure 3.6 shows the gradebook from Figure 3.4 as a mastery gradebook with the upper threshold set at 85% and the lower threshold set at 75%.

example of a mastery tracker for SLOs.png) Figure 3.6 Example of a mastery tracker for SLOs (Mastery=85%, Near Mastery=75%).28

Figure 3.6 Example of a mastery tracker for SLOs (Mastery=85%, Near Mastery=75%).28Competency: I can create formative assessments with mastery-thresholds (3.1)

Challenge 1

Review the items in one of your unit assessments and map each item to the SLO that it is measuring as in Figure 3.4. Determine appropriate mastery thresholds for each SLO.

Challenge 2

Create a small quiz with 4-5 questions that all focus on assessing one SLO in your curriculum. Determine appropriate mastery thresholds for your quiz. Build the quiz in your learning management system.

Challenge 3

Use section 3.1 of the Blended Teaching Roadmap to determine mastery levels for a standard you use in your classroom and brainstorm mastery-based assessments for that standard (http://bit.ly/BTRoadmap).

3.2 Monitoring Student Growth

Once you have (1) created or acquired assessments, (2) matched assessment items to your SLOs, and (3) set appropriate mastery thresholds, you are ready to begin using your assessments to monitor student engagement and learning. In this section, we will cover how data can be used to monitor student performance and activity or engagement online. Included are a number of examples of data from educational software that help teachers monitor student learning and engagement. Finally, you will learn several strategies for identifying meaningful patterns when analyzing mastery data.

3.2.1 Using Data Dashboards

A data dashboard is simply a tool that helps you to visualize student data in real-time. It is different from a printed growth chart because the charts in the data dashboard are updated instantaneously as new student data is added to the system. Like a car dashboard, student data dashboards might also include several data displays that help you to understand patterns in student learning. Figure 3.7 is a simple example of a data dashboard with three pieces of data at the class level. Data dashboards will often let you view classroom-level data and then drill down to individual student-level data by clicking on the course-level charts. This allows you to look for patterns happening at both the class and student levels.

example data dashboard from ImagineLearning.jpg) Figure 3.7 Example of a class-level data dashboard from ImagineLearning.29-30

Figure 3.7 Example of a class-level data dashboard from ImagineLearning.29-30There are two major categories of data that are typically used to monitor student growth and therefore visualized in data dashboards (see Table 3.2 for examples):

- Performance data: Data that are direct measures of student learning such as how students have performed on assessments.

- Activity data: Data that are indirect measures that often help explain student learning patterns such as participation, effort, engagement, and activity levels.

Table 3.2 Examples of student performance and activity data.

Example Performance Data Sources | Example Activity Data Sources |

- Gradebook

- Mastery Gradebook

- Adaptive Learning

- Performance Dashboard

- State/National Exams

| - Attendance

- Participation

- LMS login and activity

- Engagement

|

Both types of data are important to understanding the story of student learning and growth.

Mastery gradebookswith their red, yellow, and green colors are good examples of simple performance data dashboards. Figure 3.8 shows several different examples of software that enable a mastery gradebook view of student performance.

a few examples of a mastery gradebook.jpg) Figure 3.8 A few examples of mastery gradebooks.31-32

Figure 3.8 A few examples of mastery gradebooks.31-32Figures 3.9a-c show some other examples of the variety of performance data dashboards available in commonly used software programs like Khan Academy,33 Imagine Learning,30 and ALEKS.10

example dashboard from ImagineLearning.png) Figure 3.9a Example dashboard from ImagineLearning (1=activity data, 2-3=performance data, 4=performance and activity data).34

Figure 3.9a Example dashboard from ImagineLearning (1=activity data, 2-3=performance data, 4=performance and activity data).34 example of a khan academy dashboard.png) Figure 3.9b Example of a Khan Academy Dashboard (1=activity and performance data, 2=performance data, 3=activity and performance data).35

Figure 3.9b Example of a Khan Academy Dashboard (1=activity and performance data, 2=performance data, 3=activity and performance data).35 example of an ALEKS dashboard.jpg)

Figure 3.9c Example of an ALEKS Dashboard (1,4,5=performance data; 2,3=activity data).36

In addition to performance data, activity data gives you insight into how your students are spending (or not spending) their learning time. At the most basic level, this looks like attendance and participation data (see Figure 3.10). This kind of data may help explain performance levels for a student who is regularly missing school, who is frequently pulled out of class during a particular time of day, or who is not submitting assignments.

attendance data at the class-level.jpg) Figure 3.10 Attendance data at the class-level and student-level.37

Figure 3.10 Attendance data at the class-level and student-level.37In addition to basic attendance data, most LMSs and adaptive software programs also provide student activity data that can be helpful in understanding how students are using their time. For example, this data may allow you to see how often a student has logged into the software or how much time the student spent on a particular learning task. Figure 3.11 shows examples of dashboards with information about login, time spent on a particular topic, time spent watching instructional videos, and the number of attempts to answer assessment questions.

examples of activity data from PowerSchool SIS.png) Figure 3.11a Examples of activity data from PowerSchool SIS.38

Figure 3.11a Examples of activity data from PowerSchool SIS.38 examples of activity data from Khan Academy.png) Figure 3.11b Examples of activity data from Khan Academy illustrating the range of topics that students are engaging with.39

Figure 3.11b Examples of activity data from Khan Academy illustrating the range of topics that students are engaging with.39 example of Activity Data from Khan Academy.png) Figure 3.11c Example of activity data from Khan Academy illustrating the time spent watching videos and doing skill activities. 40

Figure 3.11c Example of activity data from Khan Academy illustrating the time spent watching videos and doing skill activities. 40 examples of activity data (whole class activity over time and individual student activity).png)

lower half.png) Figure 3.11d Examples of activity data (whole class activity over time and individual student activity) from the Canvas LMS.41

Figure 3.11d Examples of activity data (whole class activity over time and individual student activity) from the Canvas LMS.413.2.2 Identifying Patterns in Student Data

While data dashboards are helpful for presenting large amounts of student data in visual form, you must develop the skills of interpreting student data and using the data to improve student learning. Interpreting patterns in student data is the process of reading and trying to understand the story that the numbers tell about a student’s learning growth without having all of the narrative details.

For example, can you tell by looking at the dashboards in Figure 3.8 what outcomes each student is struggling with the most? Which data dashboard would you look at to see if attendance has been a barrier to his progress? What story does the data in Khan Academy dashboard (Figure 3.11a) tell about where the students are struggling? How many successful and unsuccessful attempts have been made on the problem set? What might you infer from this data about what interventions the student needs?

There are no absolute rules to use when identifying patterns in student data, but there are many proven practices that can help you. In this section, we will provide you with some places to start your data analysis as well as some rules of thumb that you can use as guidelines for interpreting information.

3.2.3 The AAA Process

Tables 3.3-3.5 show a generalized process for working effectively with data—the AAA Process. You always begin with identifying a question that you want to ASK of the data. This initial question is often focused on understanding patterns related to an individual, a group of students, or even the instruction itself. The second step of the process is to ANALYZE the data for patterns that can help you answer the question you have asked. Finally, you need to ACT on what you have found. This typically entails adjusting the student learning activities, the instruction, or the assessments used to gather data.

Table 3.3 The AAA Process -- Step 1 ASK

Step 1 - ASK |

Individual Student | Small Group or Class | Instructional Materials |

1. What progress has the student made towards their learning goals? 2. What SLO is the student struggling with? 3. What has the student done to master the SLO? | 1. Is there a group of students that need help with the same SLO? 2. Is there a group of students I can ask to work together? 3. Are there outcomes that the whole class needs help with? | 1. Is the assessment accurately measuring the SLO? 2. Is the learning activity missing elements needed to help students achieve the SLO? |

Table 3.4 The AAA Process - Step 2 ANALYZE

Step 2 - ANALYZE |

Individual Student | Small Group or Class | Instructional Materials |

Some things to look for… 1. Performance patterns—SLO mastery completion. 2. Activity patterns— off-task behavior or excessive time on one SLO might indicate why performance is low. 3. Possible causes— low effort, absences, missing prerequisite knowledge, etc. | Some things to look for… 1. Actionable Groups —homogenous/ heterogeneous groups, groups in remediation. 2. Mastery Movement —coming out of remediation. 3. LMS Activity. | Some things to look for… 1. Assessment Functioning— items that most students are missing. 2. Activity Use—resources being used or not used. 3. Instructional Gaps —activity didn’t cover concept or had misleading info or didn’t help students reach mastery. |

Table 3.5 The AAA Process - Step 3 ACT

Step 3 - ACT |

Individual Student | Small Group or Class | Instructional Materials |

1. Work with student to review and adjust goals. 2. Provide targeted remediation. 3. Recommend targeted materials/activities. | 1. Provide small group direct instruction. 2. Establish learning groups or peer tutoring. 3. Recommend targeted materials/activities. | 1. Improve assessments. 2. Improve learning materials. |

Ultimately, you will want to become familiar with the data dashboards that are available to you in your classroom. You will need to know where to find performance and activity data for your students. In the section below, we will present some data dashboards from several different sources and typical questions that could be ASKED of that data. Test yourself to see if you can use the data in the scenarios below to ANALYZE patterns that can answer the questions posed. If you cannot answer the questions with the existing data dashboard, identify what data you would need to answer the questions.

Scenario 1: MasteryConnect Tracker Data

Figure 3.12a shows the mastery tracker with five student learning outcomes (SLOs) for a unit. If you were the teacher, what questions would you ASK of the data? What patterns do you see as you ANALYZE the data? What are possibilities for how you would ACT on the data? Table 3.6 shows a possible response to these questions.

mastery connect data - how would you use this data to help students progress.png) Figure 3.12a Mastery Connect data - How would you use this data to help students progress?42

Figure 3.12a Mastery Connect data - How would you use this data to help students progress?42Table 3.6. Example analysis of data in Figure 3.12a

ASK | ANALYZE | ACT |

Which students or groups of students need remediation around which SLOs? | - A group of students need help with 6.5

- Two students need help with 6.1.

- Victor is struggling with the whole unit.

| - Begin with 1-1 tutoring session with Victor on 6.1-6.4. Pull in Sirius for tutoring on 6.1.

- Create small group direct instruction for students needing remediation or at near mastery on 6.5.

|

Which students are close to achieving mastery? Which students have achieved mastery and could help peers? | - Many students at mastery (green) or near mastery (yellow) for 6.1-6.4.

| - Option 1=provide online practice activities around SLOs for near mastery students.

- Option 2=pair mastery and near mastery students to practice together on selected SLOs.

|

Scenario 2: Khan Academy Data

Figure 3.12b shows a Khan Academy dashboard for Student 3 who you have noticed is struggling with the unit on geometry. Figure 3.12c shows two data screens where you have drilled down further to see what the student’s activity focus has been and what the data for the performance in the first troublesome skill set shows. What patterns do you notice in each of these screens and what is the story that the data is telling you? Table 3.7 shows a possible response to these questions.

.png) Figure 3.12b Khan Academy data - How would you use this data to help students progress?43

Figure 3.12b Khan Academy data - How would you use this data to help students progress?43 khan academy data - how would you use this data to help students progress.png) Figure 3.12c Khan Academy data - How would you use this data to help students progress?44

Figure 3.12c Khan Academy data - How would you use this data to help students progress?44Table 3.7 Example analysis of data in Figures 3.12b-c

ASK | ANALYZE | ACT |

What skills does the student need help with? | (#1) The student has spent 100 minutes and is listed as struggling in 3 skills. (#2) The student has had minimal practice in 4 additional areas. | Set a goal with the student to work on 1-2 skills at a time until mastered and not to work on a dozen skills simultaneously. |

Why is the student struggling? | (#2 and #3) The student is jumping all around, spending 2-6 minutes in a dozen different skills. (#4) Looks like the student spent time trying to figure out Q#1-2 and even watched a little instructional video, then probably just guessed on Q#3-5. | Address persistence issue with instructional videos—observe student watching videos to see if language level is too high for comprehension. |

Scenario 3: MasteryConnect Tracker Data

You are working to help students achieve mastery on SLO RL.6.5. Early in the class, you observed how your students were performing and only one student was at mastery level (see Figure 3.12d). In preparation for the outcome quiz, you conducted another observation during some class activities in which you saw that most of the class was at mastery or near mastery (see Figure 3.12d). You were surprised that when students took the assessment, nobody demonstrated mastery. You click on the assessment report to see if it can help you to better understand (see Figure 3.12e). What questions do you ASK of the data? What patterns do you see as you ANALYZE the data available? Table 3.8 shows a possible response to these questions.

.png) Figure 3.12d MasteryConnect assessment data - What patterns do you see?45

Figure 3.12d MasteryConnect assessment data - What patterns do you see?45.png) Figure 3.12e MasteryConnect item analysis data - How would you use this data?46

Figure 3.12e MasteryConnect item analysis data - How would you use this data?46Table 3.8 Example analysis of data in Figures 3.12d-e

ASK | ANALYZE | ACT |

How are students progressing from Observation 1 to the final assessment? | - Observation 1 shows that most students haven’t mastered the SLO.

- Observation 2 shows that most of the students have progressed to mastery or near mastery.

- Final assessment shows students regressing away from mastery.

| Investigate questions #3 and #5 to see if the answers have been mis-keyed.

|

Why are so many students not mastering the SLO final assessment? Is there possibly something wrong with the assessment or students’ preparation for the assessment? | Difference between scores for Observation 2 and final assessment suggest the following possibilities: - Students have regressed.

- Measurement differences between observations and assessment.

- (#1) exploring item scoring for the final assessment shows two items most students are missing which could be mis-keyed answers or dimensions of the SLO not observed in Observation 2.

| If the answers are correct, evaluate the questions to see if questions match the SLO and if the instruction (including materials) needs to be updated to address the deficiency. |

Competency: I can identify important patterns in student performance data (3.2)

Challenge 1

Identify the data dashboards available to you in the LMS or in the software in your classroom. What are some of the questions you can find answers to in the data you have access to?

Challenge 2

Create a mastery tracker for one curricular unit that includes formative and summative assessments that align with unit learning outcomes.

Challenge 3

Use section 3.2 of the Blended Teaching Roadmap to determine what data you have access to in your classroom. How do you plan to use both this data and a mastery gradebook to improve student learning and to inform classroom practices? (http://bit.ly/BTRoadmap).

3.3 Using Data to Improve Learning

Monitoring student performance and activity is pointless if we don’t plan to ACT on what we have learned or if we provide the same instruction to everyone regardless of story the data tells. In this section, you will learn about how you can use student data to improve student learning by:

- Informing student learning goals

- Improving learning activities

- Improving assessments and learning materials

3.3.1 Informing Student Learning Goals

In the next chapter, we will discuss the importance of allowing students to co-create their learning experiences. Part of this co-creation is sharing ownership over student data. In the past, performance data has either been something that you see in the grade book, or something that students create during activities that are not reported in the grade book. However, when trends in data are shared with students and student-created data is shared with you, you and your students can work together to co-create learning. In order for this to occur, students need to not only have access to their own data but understand how to read it and recognize its trends.

Helping Students Understand Data and Set Goals

Video 3.4 (4:50) http://bit.ly/btb-v275

Watch on YouTube

Watch on YouTubeWhat to Look For: Observe the different kinds of data this teacher and student discuss, and how each kind of data is used to set different kinds of goals (both academic and behavioral).

Setting individual learning goals with a student requires dedicating attention to that student’s interests and performance data. Once you understand the story a student’s data tells, you can conference with him or her to determine which learning activities, assignments, projects, and/or assessments are the best match. Consider the data in Figure 3.13.

mastery connect data demonstrating need for individualized student goals.png) Figure 3.13 MasteryConnect data demonstrating need for individualized student goals.47

Figure 3.13 MasteryConnect data demonstrating need for individualized student goals.47 Each student is going to have different individual goals. Hannah doesn’t need to work on the first standard, RL6.1, nor does Lavender. Sirius, however, does need help. He will need to meet with you to get that help. Will also struggles, but he could receive assistance from resources online or from students who have already mastered RL6.1.

Each of these students will need to set a different learning goal. Hannah probably won’t have goals for RL6.1 or RL6.4 because she has mastered them. Instead, you will need to look at her data for the standards that are near mastery to determine what she needs help with. Once you understand the help that she needs, you can conference with her to determine the activities she will need to complete to fill the gaps in her understanding. You can also find the projects or assessments she will use to illustrate that the gaps have been filled. The last goal you will set with her during this conference would be to establish a timeline for completing her goals. The next chapter will talk more about creating personalized goals for students that need more than data to guide their goals.

Individual Goal Setting Conference

Here are the essential data talking points in an individual goal setting conference.

- Where is the student currently?

- Where does the student need to go?

- What can help the student get there?

- How can the student illustrate that he or she is there?

- How quickly can the student complete the plan?

- When does the student need to illustrate increased performance?

In addition to helping students determine individual goals, you can work with students to set group goals. At times, you benefit from having groups of students work together. Using the data for Hannah, Sirius, Lavender, and Will, we can imagine ways in which groups of students may want to work together (see Figure 3.13).

Based on this data, you might choose to have all four students work together on RL6.2 and RL6.3. This would allow Sirius to help Hannah, Lavender, and Will with RL6.2, and allow Lavender and Will to help Sirius and Hannah with RL6.3. Once the students have agreed to work together on these standards, you could conference with the entire group to establish the same kind of plan you would for an individual student.

Group Goal Setting Conference

Here are the essential data talking points in a group goal setting conference.

- Where is each student currently?

- Where does each student need to go?

- How can each member of the group help each other get there?

- What instructional activities or resources can help the students get there?

- What project can let students demonstrate that they are all where they need to be?

- How quickly can the group complete the project?

- When does the group need to illustrate increased performance?

- When will the group work on various aspects of their project(s)?

- How will group contributions be reported and observed?

When meeting with groups, you need to make sure that all students understand their responsibilities within the group. In a group where everyone has the same level of mastery, this is relatively easy. Each member is responsible for learning and then contributing their newly acquired knowledge to the group’s work. When students have different levels of understanding among them, negotiating group roles can be more complicated. Let’s look at the data for Hannah, Sirius, Lavender, and Will again (see Figure 3.13).

Student Groupings

There are essentially three strategies that can be used when grouping students based on performance data:

Homogeneous groups consist of students who are all at the same level. This can include students who are all at mastery and will be working on enrichment activities, students who are near mastery and need to work on the same activities together to get to mastery, and students who are in remediation and need to meet with you.

Heterogeneous groups are made up of students who are all at different levels. This usually includes a mixture of students who are at mastery and near mastery working together to improve their understanding of the materials. The mastery students learn the material better by teaching the near mastery students, and the near mastery students from the small group tutoring.

Mixed groups combine homogeneous and heterogeneous groups to personalize instruction. This is common in station rotations where some groups may work together, while other groups complete online learning activities or meet with you.

If these students were working on RL6.2 and RL6.3 together, Sirius would guide group work for the standard that he has already mastered (RL6.2). He should not be finishing the project for this standard. He should be primarily focused on working on the standard that he and Hannah are near mastering (RL6.3). His project with Hannah would be guided by Lavender and Will. In the group conference, both you and the students would need to decide what Hannah would be doing since she would have to be focused on completing both projects. The group would also have to determine how they would demonstrate understanding of the standards once the projects are finished. Would there be individual assessments? Would the group present the project as a whole? Would each group member present the project individually? Some members of the group would possibly have to complete multiple assessment strategies if one is not enough to fully demonstrate understanding.

For Secondary Teachers

Conferencing & Goal Setting

Video 3.5 (2:24) http://bit.ly/btb-v203

Watch on YouTube

Watch on YouTube Watch on YouTube

Watch on YouTubeAs a secondary teacher you are focused not only on helping students achieve learning standards, but also on helping students feel college and career ready. Your school has the added responsibility of helping students to set meta-goals like getting into a private or state university, going into the military, or obtaining a job or a union membership.

College and career goals should not stop there, however. Faculty and staff need to help students see how specific classes will help them meet their larger goals. If you mentor students in this regard, you will need to help them set achievement, pacing, and time goals for these classes, so that their meta-goals can inform their day-to-day goals. This can also be accomplished through your school’s student mentoring program where selected teachers mentor specific groups of students, through one-on-one meetings with counselors or through meetings with appointed goal-setting support staff. These staff help answer the question, “Where is the student currently?”

Check out these videos to see how one school is making this work. (Your school might not be ready for this kind of systemic transformation yet, but it is something you could start working towards by following the advice in this book.)

Watch on YouTube

Watch on YouTubeWhat to Look For: These teachers list several strategies for helping students know where they are and where they need to go. Look for strategies that you could use in, or modify for, your classroom.

3.3.2 Improving and Informing Learning Activities

It is probably most common for you to use patterns in student data to inform the nature of future student learning activities. In your analysis of data patterns, you will identify individual students or groups of students who have trouble with specific SLOs. Often the recommended activities will fall into one of three categories: student-teacher activities, student-student activities, and student-content activities.

Student-Teacher Activities involve the most valuable and limited resource within the classroom—you the teacher. In a blended classroom you want to use your time and skills to the maximum potential. This means doing what you do best, which often involves (1) diagnosing and remediating specific learning challenges and (2) encouraging and motivating your students. You encourage and motivate students when setting and following-up on student learning goals (see 3.3.1) among other things. When you identify an individual student or small group of students who need remediation (red) in a mastery gradebook, it is often effective to conduct a one-on-one or small group tutoring session because you can diagnose more nuanced challenges than software can.

Multiple Levels of Student Support

Video 3.8 (1:30) http://bit.ly/btb-v381

Watch on YouTube

Watch on YouTube What to Look For: Listen to how teachers have a plan for helping students progress from getting help from content, then from peers, then finally from the teacher.

You can also use data like that shown in Figure 3.14a to identify specific problems that your student or group of students is struggling with. The specific problems that were missed might be selected and used in a one-to-one or small group tutoring session.

Watch on YouTube

Watch on YouTubeWhat to Look For: Listen to how teachers develop independent learning rotations with “teacher time” for students below 50% mastery.

khan academy data that allows teacher.png) Figure 3.14a. Khan Academy data that allows teacher to see which questions were missed.48

Figure 3.14a. Khan Academy data that allows teacher to see which questions were missed.48 Watch on YouTube

Watch on YouTubeWhat to Look For: Notice the relationship between students in this peer tutoring example.

Watch on YouTube

Watch on YouTubeWhat to Look For: Listen to how the teachers decide how to group students based on data.

Student-Student Activities involve options such as peer tutoring, small group peer teaching, and collaborative projects. Peer tutoring and small group teaching activities work best when students are near mastery (yellow) but probably do not work as well when they are in need of remediation (red). These types of student-student activities not only help the student who is near mastery but also help the peer tutor develop 21st Century Skills (see the 4 Cs in section 1.0), such as communication and collaboration. Peer tutoring or small group work can even keep students on-task if they are paired with the right peer. You can then use student data to select an appropriate peer tutor who has already mastered the SLO and who is well behaved.

Watch on YouTube

Watch on YouTubeWhat to Look For: Observe how the teachers use student performance data to decide how to manage daily small group instruction.

Student-Content Activities are activities students complete from a teacher-curated playlist or activities recommended by an adaptive learning software program (see 4.4.1 for more on creating playlists). Some software makes it easy for you to recommend learning activities based on student needs. For example, Figure 3.14b shows how Khan Academy allows you to manually assign practice problems to students in each skill area based on the student’s needs.

khan academy allows instructors to assign practice items to.png) Figure 3.14b Khan Academy allows instructors to assign practice items to individual students or groups of students.49

Figure 3.14b Khan Academy allows instructors to assign practice items to individual students or groups of students.49Recommending curated student-content activities is a particularly good option when you already know that the student’s weakness matches with existing activities. It can also be a good option for students who are near mastery (yellow) and just need a little more practice to achieve mastery.

3.3.3 Improving and Informing Assessments and Learning Materials

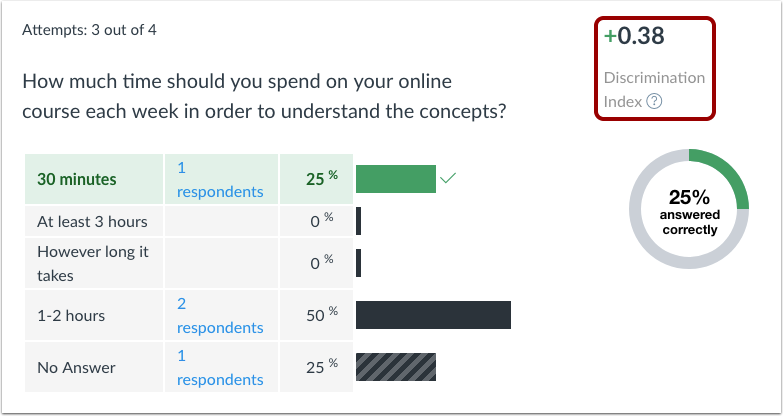

Identifying patterns in student data can lead you to recommend different kinds of learning activities as outlined in section 3.3.2. As shown in Scenario 3 (Figures 3.12d-e), data might also lead you to discover weaknesses in your assessments or learning materials. Looking at assessment data that shows what students are missing and what they are getting correct can also give you clues about concepts that might be missing or unclear in the instructional materials. Figure 3.15 shows an example of data from a simple quiz question in Canvas. This data reveals that most students don’t understand the answer to the question. Because of this, you may need to update either the question itself or the instructional materials.

Figure 3.15 Canvas student data by quiz item.50

Figure 3.15 Canvas student data by quiz item.50Some ways that you might act to improve assessments or learning materials based on data include the following:

- Altering items;

- Adding/eliminating questions from question banks;

- Redesigning rubrics;

- Finding better learning resources to use in activities or playlists;

- Clarifying written instructions.

Competency: I can use data to recommend focused learning activities for students (3.3) and to evaluate and improve assessments and instructional materials (3.3).

Challenge 1

Review a previous assessment that you have given and gather some data about student performance. Which items tested well, and which did not test well?

Challenge 2

Use assessment data for one student from either a class assessment or a state standardized assessment to brainstorm some goals that you might encourage that student to set.

Challenge 3

Use section 3.3 of the Blended Teaching Roadmap to plan the grouping strategy that you will use with your students as well as the activities that students can complete at each level of mastery (remediation, near mastery, mastery, and exceeding mastery). (http://bit.ly/BTRoadmap)

Check your Understanding

Go for the Badge

Complete Section 3 of the Blended Teaching Roadmap to plan and outline your strategies for gathering and using student data to improve student mastery in your classroom. (http://bit.ly/MyBTRoadMap-Ch3) (See two examples of a completed Roadmap in Appendix C.)