Suppose you are a veteran science teacher. After years of development, you've finally done it. You have created the best high school science education curriculum ever devised!

The curriculum takes three years to complete. You are now doing it with all of the students in your school and have found that the students have been performing much better on standardized tests than their peers did in previous years, and many more of them are being accepted to elite colleges.

Wanting to get funding to implement the program in other schools across the state, you approach a program officer for a major research organization. She suggests that your results are very promising but that in order to be competitive for their large grants, you will need to prove more reliably that the curriculum is having the effect that you are claiming and that it is not due to chance or some other factor.

"Can you try it out with some other schools," she asks?

"No," you reply, "not without more funding."

"Well," she responds, "it seems then that you'll need to do some form of a controlled trial at your current school, where you randomly assign half of the students to use the experimental curriculum and the other half to use the old curriculum. Then, you should be able to prove with greater certainty that it's the curriculum having the effect, and we can talk more optimistically about funding opportunities."

"But," you begin to carefully explain, "the curriculum is an entire package. It takes three years to complete. That means if we don't do it with half the students, they probably won't perform as well on standardized tests and won't get into good colleges at the same rate as their peers. I don't think I can do that to them."

She furrows her brow and nods understandably. "Yes, that would be a difficult choice. But if you want to really help more kids and make this thing big, then you're going to need to be a bit more scientific in how you do this. Some kids might fall through the cracks those first few years, but just think of all the kids you'll be able to help for years to come once you have the evidence you need!"

Key Terms

- Consequentialism

- An approach to ethics that holds that the morality of an action should be determined by its effects.

- Contractarianism

- A non-normative approach to ethics that holds that what is held to be right and good is merely determined by social contracts that are shared between people.

- Deontology

- An approach to ethics that holds that the morality of an action should be determined by its duty-bound adherence to particular laws or norms of behavior.

- Ethics

- The branch of knowledge that deals with rightness, goodness, and morality.

- Moral Relativism

- A non-normative approach to ethics that holds that what is right and good is only ever determined by references to individual or cultural norms or contexts.

- Normative Ethics

- Approaches to ethics that assume some level of universalizability of moral action (across cultures or contexts).

- Utilitarianism

- The consequentialist stance that moral behavior consists of doing what will have the greatest effect, typically in terms of doing the greatest good for the greatest number of people.

- Virtue Ethics

- An approach to ethics that holds that the morality of an action should be determined by its relationship to the moral agent's development or expression of fundamental virtues.

Now, pause. Ask yourself honestly: If you had to make this choice and there were no other options, what would you do? Would you jeopardize the futures of a few dozen students in order to potentially improve the lives of thousands? Or would you continue the curriculum with the few hundred knowing that doing so will likely prevent you from ever being able to have any impact beyond the walls of your school?

Though fictional, this story represents one of the many very real ethical dilemmas that education researchers must face. These dilemmas are shaped by competing values, competing beliefs about what is good, and competing needs of the self, other individuals, and society.

In this scenario, would it be good to continue providing the curriculum to all of your students? Of course. Would it also be good to do the research necessary to get funding to provide it to more students? Also, yes. But, if you cannot do both, then which good is greater, preeminent, or better? (I could further complicate the issue and point out that it also probably wouldn't be bad for you to be able to monetarily benefit from selling your curriculum at scale either, but we'll ignore that for now.)

Ethics, or the study of rightness, goodness, and morality, exists to help us determine which actions are right and wrong, under what circumstances, and why. Like everyone, researchers must grapple with the ethics of their decisions as individual people, but they must also grapple with the ethics of their decisions as researchers in a field where there are many opportunities to do good or harm, to act rightly or to act wrongly. In this chapter, I'll provide an overview of the three dominant approaches to normative ethics, which will serve as a starting point for analyzing the morality of particular research behaviors and the enactment of research agendas through differing paradigms.

Relativistic vs. Normative Ethics

In the example above, we might argue that a science teacher's withholding curriculum from a smaller group of students would be immoral, because intentionally withholding valuable educational experiences from any student is, as a rule, never acceptable. Or we could argue in the opposite direction that the potential benefit of helping more students by performing the experiment justifies any negative outcomes that the smaller group might experience. Or the teacher might claim that withholding the curriculum would constitute a betrayal of the teacher's relationship to the students and their role as a teacher, while another might argue that the same action might be a betrayal of the teacher's role as a researcher.

This is an example of a moral dilemma or a situation when the purported morality of an action is called into question and represents a conflict between opposing values or requirements (McConnell, 2018). In this case, the opposing requirements faced by the teacher would be requirements to a smaller group of current students vs. a larger group of potential students.

One might attempt to avoid the dilemma altogether by arguing for a stance of moral relativism or contractarianism, explaining that the moral choice for this teacher would be determined by their cultural context, individual beliefs, time, and place. Or in other words, there is no moral dilemma here, because whatever the teacher chose to do would simply be a matter of preference, cultural norms, habituation, etc. Such relativistic approaches to morality are differentiated from normative approaches to morality, which seek to universalize moral requirements in some manner.

Relativistic approaches to ethics are common in popular culture today and are often seen as "harbingers of tolerance, open-mindedness, and anti-authoritarianism" (Baghramian, 2015). At some level, non-normative approaches to morality are appealing, because they allow us to iconoclastically discount taboos and mundane behaviors that might be considered immoral in some cultures but not in others (such as wearing a hat indoors or extending one's left hand to a stranger for a handshake). Yet, relativistic approaches to ethics are ultimately untenable for researchers, because the same relativistic argument that could be used to claim that there is nothing morally wrong with refusing to refer to an elder by their appropriate title could also be used to justify direct harm to individuals or to claim that Nazi war crimes committed in the name of research were merely reasonable expressions of the time and context.

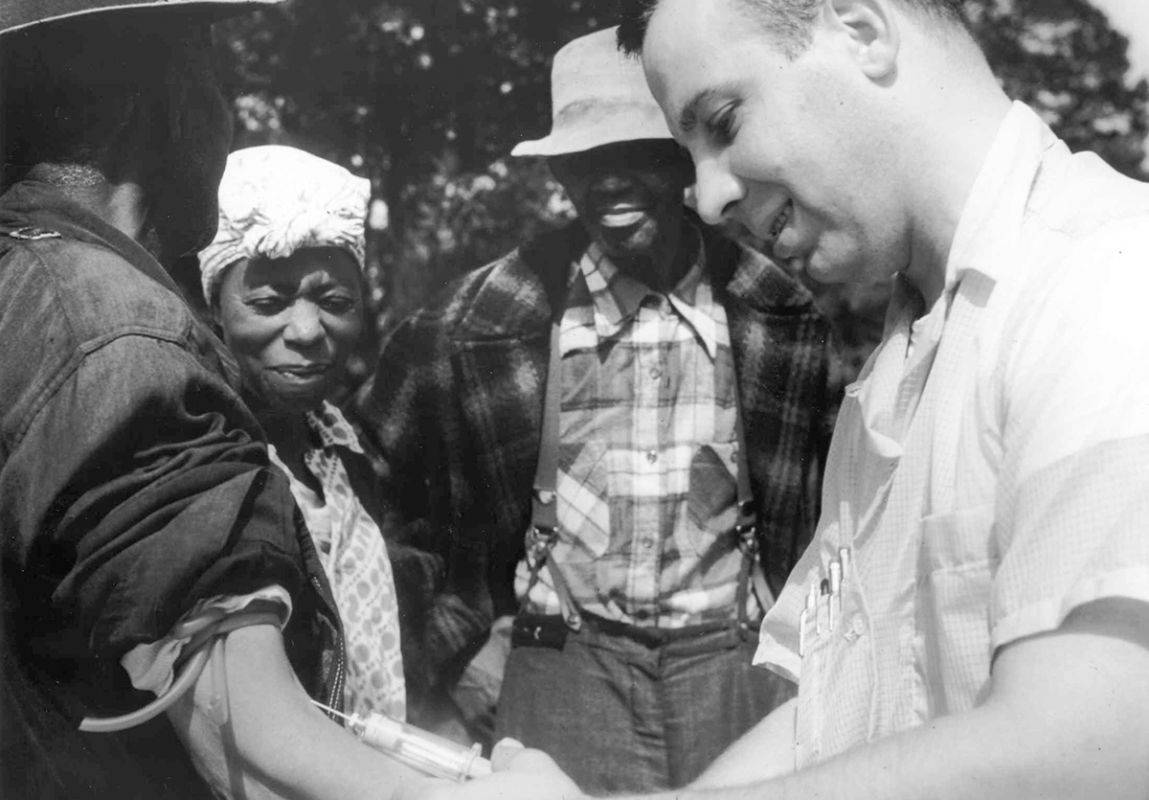

A patient and a physician in the Tuskegee Syphilis Study

A patient and a physician in the Tuskegee Syphilis StudyHistory is replete with examples of researchers engaging in unethical and harmful activities:

- In the 1940s, Nazi scientists conducted thousands of experiments on prisoners in concentration camps, in which researchers intentionally harmed, tortured, and killed victims in various ways, including poisoning, blunt head trauma with hammers, mustard gas, freezing, jaundice, sterilization, burning, electroshock, body part transplantation, and many other methods.

- From 1932 to 1972, hundreds of economically disadvantaged African American men with syphilis in the Tuskegee Syphilis Study were provided with meals and other incentives so that researchers could study what syphilis did to their bodies. They were never offered penicillin treatment, even though it was accepted as the standard treatment by 1947, and they were not accurately told why the researchers were studying them.

- In the 1940s, inmates at Stateville Penitentiary were recruited for participation in a study on malaria and were paid $100. They were then infected with malaria via mosquito bites, assigned to treatment and control groups, and administered a variety of potential treatments, many of which were highly toxic. Some participants were intentionally not treated until their temperatures exceeded 108-degrees. They experienced jaundice and anemia as well as "severe headaches and eye pain, nausea, weakness, vertigo, vomiting, diarrhea, and Herpes simplex, which was very common and often severe" (Miller, 2013). At least one patient died.

- In 1939, researchers in the Monster Study experimented on war orphans to better understand the development and treatment of stuttering problems. As part of the study, they intentionally influenced many children who spoke fluently to develop stutters, which impacted their ongoing ability to communicate and to be successful in their schoolwork.

- From 1963 to 1973, 67 male inmates in Washington and Oregon were offered $25 per session (along with suggestions of parole) to allow researchers to irradiate and biopsy their testicles. This allowed researchers to better understand how radiation might affect astronauts.

Such researcher actions are not merely relativistically immoral but are normatively reprehensible. We should not look at these examples and merely excuse them by saying that they "simply represent a different time" or culture. Rather, we need ethical measures and ways of reasoning that allow us to differentiate between moral and immoral behaviors across contexts in ways that relativistic morality cannot allow. Toward this end, ethicists approach moral dilemmas in a variety of normative ways, and as researchers, we must also be willing to approach ethics normatively to ensure that the morality of our work transcends our current time and culture.

Three Approaches to Normative Ethics

When faced with ethical dilemmas, we often find ourselves weighing multiple values, rights, requirements, or goods against one another, and determining which should be prioritized may not be clear. Ethicists propose that there are at least three aspects of the dilemma that we can focus on to guide our thinking: the action itself, the consequences of the action, or the nature and intentions of the person performing the action. By giving one of these aspects greater creedence than the other two, we can create normative guidelines for what constitutes ethical behavior. Such guidelines will have far-reaching effects as they influence our moral reasoning across multiple situations, such as guiding us to always prioritize consequences over intentions.

By focusing more attention on one of these three aspects of a situation, we will subscribe to one of three common approaches to normative ethics: deontology, consequentialism, or virtue ethics. Ethicists continually disagree on which of these is the best approach, because each has its own benefits and disturbing consequences, but researchers will occasionally use each to make ethical claims about their work, and it is, therefore, our responsibility to better understand them and decide when they should be utilized.

Deontology

Deontology focuses on actions themselves and proposes that morality consists of adhering to universal laws of good action (Alexander, 2016). An example of this might be a parent teaching a child not to lie. "Even if it means you might get into trouble," a parent might say, "it is always better to tell the truth." By teaching this to a child, it is anticipated that the child will develop an intrinsic, duty-based motivation to tell the truth. If the child acts out of duty, then threats of punishment or promises of reward will not matter, as the child will tell the truth simply because it is "always the right thing to do."

A professional example of this would be the Hippocratic Oath (or variations that are still administered in some form to many graduating medical students in the U.S.) and the related injunction to "first, do no harm." Such oaths expect medical professionals to hold certain practices related to patient wellbeing as sacred and inviolate, and the morality of an action stipulated in the oath is interpreted solely upon rigid conformity to it in a generally black-and-white, legalistic manner.

Laws, policies, constitutions, and declarations of rights operate similarly. For instance, the United Nation's Universal Declaration of Human Rights holds that "Everyone has the right to life, liberty and security of person." And based upon this moral dictum, governments, regimes, and individuals are judged as either moral or immoral by whether or not they follow it. Following the dictum is considered moral, while deviating from the dictum (no matter the reason, purpose, or context) is considered immoral.

Following the research abuses perpetrated by Nazis in World War II and the subsequent Nuremberg Trials, the Nuremberg Code was created and adopted as an international code of ethics governing research experiments. Some of the points of the code were clearly deontological in nature, such as requiring voluntary consent from research subjects and allowing subjects to withdraw from studies at any point, the duty-based principle underlying such points being that researchers have a duty to honor the self-determination and agency of participants. In other words, honoring participant self-determination and agency is simply the right thing for researchers to do.

In education, this translates into basic moral expectations of the researcher, in which we only study participants who have provided their informed consent, do not take advantage of vulnerable populations (such as young children and prisoners), inform participants of the nature of our work, do not attempt to coerce participation from our participants, do not share participant data without their consent, and allow participants to withdraw at will. Generally speaking, these are universal codes of conduct that we subscribe to simply because rigid conformity is the moral thing to do.

However, though many deontological approaches to ethics are commonplace, there are several obvious problems that can arise from this approach. First, by relying upon universal maxims for the right action, deontological ethics can seem too rigid in all situations and contexts. For instance, though honoring participant self-determination is paramount, are researchers required to be held to it if participants are attempting to self-harm? Or might there be another moral requirement placed upon the researcher to interfere out of concern for the subject's wellbeing? In the case of the child learning to tell the truth, might there be situations when telling the truth is not the morally correct thing to do, such as when truth-telling will result in imminent harm to another person?

Second, since deontology defines morality as rigid adherence to maxims in a universal fashion, much harm can arise if those maxims are themselves harmful. Abraham Lincoln famously offered a deontological argument for following bad laws as follows:

When I so pressingly urge a strict observance of all the laws, let me not be understood as saying there are no bad laws.… But I do mean to say, that, although bad laws, if they exist, should be repealed as soon as possible, still while they continue in force, for the sake of example, they should be religiously observed.

In this regard, Lincoln argued for deontological rule-following but also recognized that any law might be wrong and in need of revision or abolition. In this view, then, rule-keeping can be seen in some ways to be more important than the rules themselves, which means that under a deontological view, a moral rule-keeper might be causing much harm but still be considered moral.

In a similar way, the faithful rule-keeper may sometimes actually be acting against the intent of the law by obeying the "letter of the law" while violating the "spirit of the law." For instance, as an instructor, I might establish and enforce assignment deadlines to ensure that my students are progressing through my course at a reasonable pace so that they will be able to successfully complete it, but if I enforce this rule too rigidly and do not account for students with special needs or unexpected trauma, then I would be allowing adherence to the rule to stand in the way of the purpose for the rule (i.e., student success).

Today, researchers do operate under several key duty-based ethical standards. As suggested earlier, guidelines on how to treat subjects, especially in terms of consent, privacy, self-determination, and safety are generally treated as vital, but not all are consistently treated as inviolate in every circumstance.

Learning Check

Which of the following statements are examples of deontological approaches to ethics?

- "Thou shalt not kill." — The Holy Bible

- "The end may justify the means as long as there is something that justifies the end." — Leon Trotsky

- "Act only on that maxim through which you can at the same time will that it should become a universal law." — Immanuel Kant

- "A knight must not complain of his wounds, though his bowels be dropping out." — Don Quixote (Man of La Mancha)

- "Do what is right, let the consequence follow." — Latter-day Saint Hymn

Consequentialism

Consequentialism focuses on the consequences of actions and proposes that the morality of an action is determined by the desirability or harm of its results (Sinnott-Armstrong, 2019). To connect back to the truth-telling example above, a parent employing consequentialist ethics to teach a child about honesty might teach them that "you should normally tell the truth, because telling the truth makes people happy." In this scenario, truth-telling is not considered to be good on its own but only as a vehicle for making people happy. If, then, a situation ever arises when telling the truth would lead to heartache or sadness, then, in those cases, telling the truth would no longer be the morally right thing to do. This places all determinations of morality upon the effects of the actions, intended or not, and not the actions themselves.

As perhaps consequentialism's most well-known and most-discussed formulation, utilitarianism holds that when judging between two potential actions, the moral choice will be the one that maximizes benefit, either in terms of numbers of people benefitted or the qualitative nature of the benefit (Driver, 2014). In other words, the goal of utilitarianism is utility or human happiness, and all actions are morally evaluated based on their influences upon happiness. Because of its popularity and development, I'll focus on utilitarianism as the prime example of consequentialism for moving forward.

Professional, social, and personal instances of utilitarianism are widespread. People will regularly structure their activities in ways that maximize their own happiness or that of their social group and will often even ignore moral maxims that they might generally hold to be true if they are perceived to interfere with this pursuit.

As a simple example, the original formulation of the Hippocratic Oath required physicians to not ever "use the knife," ostensibly because cutting a patient in any form inflicts harm on the patient's body. This is undoubtedly true, and anyone who has ever undergone surgery that involves incisions might still have the scars to prove it, but is there ever a time when surgery by incision is justified? Certainly. Take the case of a child who is born with a congenital heart defect. Surgeons have developed procedures to correct such defects, but doing so often requires breaking the infant's sternum, stopping their heart, transfusing blood from a donor, and months of recuperation. Why would anyone allow such a gruesome and dangerous procedure to be performed on an infant? The simple answer is that the net benefit to the child, in terms of years-lived and improved quality of life, justifies actions that might otherwise be unconscionable. That is, in this case, surgeons and parents believe that the consequence (improved life and happiness of the infant) justifies what would otherwise be an immoral act (intentionally harming the infant).

In fact, many of us would argue that if a parent did not allow reasonably-successful surgeries to be performed on a terminally-ill child then that parent would be guilty of negligence toward the child — meaning that failure to harm the child through surgery would be immoral.

Such reasoning is not only applied to doing harm to an individual to promote their overall wellbeing but is also commonly used to justify doing harm to an individual or small group in order to benefit society more broadly. We ask individuals to temporarily harm themselves by donating blood in order to save the lives of others. The very notion of drug testing in pharmaceuticals is based on this premise, where relatively small groups of people agree to take drugs that may have negative health side-effects in order to inform treatment for the larger population.

Many other points of the Nuremberg Code operate from this ethical stance, wherein researchers must "yield fruitful results for the good of society" that would be "unprocurable by other methods" and the "degree of risk to be taken should never exceed that determined by the humanitarian importance of the problem to be solved." This means that risks to individuals and violations of general rules of conduct might be appropriate in situations where doing so is absolutely essential and of broad benefit to society.

Similar utilitarian reasoning is employed by education researchers to justify their studies and to occasionally violate maxims that otherwise would be followed. As the introductory dilemma to this chapter suggested, beneficial educational interventions might sometimes be denied to some students in order to prove their legitimacy for larger populations. Even in a single classroom, normal activities might be temporarily disrupted to try a new curricular approach, because it promises to have a net benefit to students in the long-run. And in situations where we want to study students' behaviors, we might temporarily not let them know that we are studying them because knowing would change their behaviors.

In all of these cases, note that a form of the term "temporary" was used, meaning that though education researchers might sometimes justify harming participants through their work, there remains an expectation that doing so will both (a) provide net benefits to society in terms of research outcomes and also, whenever possible, (b) correct any harm done to the individual, providing net benefits to them as well. So, if a student is placed in a control group and is temporarily denied access to a beneficial experimental education intervention, then it is expected that upon completion of the study the student will be allowed to benefit from the intervention as well. Though such reparative approaches to harming students are not always possible, the general principle is that education researchers will do everything possible to minimize risk of harm to participants and also do everything possible to correct any harm that occurs because of the study.

Though utilitarianism is common, there are at least two major problems that adherents of this approach must address. First, consequences are not always clearly discernible before acting. The child telling the truth might not know the effect it will have on the listener, and the surgeon might be asked to perform a surgery with a low likelihood of success. In these cases, if the consequence is increased harm (sadness for the listener or death for the patient), then the action was immoral. Yet, the entire purpose of ethics is to inform people on how they should act before they act, meaning that if the morality of an action cannot be determined until after the consequences are felt, then how are we to act? All morality, then, potentially becomes a guessing game of whether our actions will have the effects we intended, and if they don't, then we are immoral as a matter of chance.

The second, and even more serious, difficulty with utilitarianism is that it can be used to rationalize the violation of fundamental rights and causing extreme harm to individuals as long as such atrocities are done in the name of promoting the greater good. Almost every despot, dictator, and perpetrator of genocide in history has claimed to act for the greater good. The entire Nazi propaganda machine was based on such premises: that in order for Germany and the majority of the population to thrive, a small minority of the population would need to be deprived of rights. Nazis justified horrible experiments on political prisoners upon the rationale that such suffering would benefit the German majority via better science, better medicine, and better soldiers. Utilitarian arguments can always be made to marginalize, harm, or destroy individuals and minorities as long as doing so provides a net benefit to society (e.g., the majority). Even genocide can be justified as a utilitarian moral good.

Today, researchers continue to use utilitarian reasoning to guide the morality of their actions, but, as these two problems highlight, utilitarianism must be tempered with certain inalienable maxims of right and wrong in order to guide actionable practice and to prevent the rationalization of atrocities.

Learning Check

Which of the following statements are examples of consequentialist approaches to ethics?

- "It is logical. The needs of the many outweigh the needs of the few." — Spock (Star Trek)

- "Ask not what your country can do for you but what you can do for your country." — John F. Kennedy

- "The means we employ must be as pure as the ends we seek." — Martin Luther King, Jr.

- "Society's needs come before the individual's needs." — Adolf Hitler

Virtue Ethics

Virtue ethics focuses on the development and expression of virtues themselves as the fundamental indicator of moral action. Whereas a consequentialist might say that virtues are good insofar as their development leads to social benefits and deontologists might say that fulfilling one's duty to universal laws is the only guiding virtue, virtue ethicists "will resist the attempt to define virtues in terms of some other concept that is taken to be more fundamental," such as happiness or duty (Hursthouse, 2017).

Virtues are excellent traits or dispositions that are considered to be worthy of cultivation in the moral actor. In the case of the child being taught not to lie, a parent might explain "you should be an honest person, and honest people do not lie." Becoming an honest person, then, is the reason that the child should not lie, without reference to universal maxims or consequences of dishonesty. Developing the virtue (in this case, the disposition of honesty) is the measure of morality. An honest person, in this way, will still generally tell the truth but will do so with consideration for complexities of others' feelings, the ramifications of the truth, an abhorrence of dishonesty, and a consideration of other important virtues (e.g., love and concern for the other). Whether or not a person is truly honest (or has developed the virtue of honesty) will be manifested in their overall behavior, not a single act, and will take into account their intentions and reasoning.

Though developing virtue is considered in this approach to be the fundamental goal of ethics, it is tempered with an understanding that practical wisdom is necessary in all things. Just as we might say that "so-and-so is honest to a fault," we can simultaneously value honesty but also recognize that its enactment requires tact, thoughtfulness, and balance. An honest child might behave differently than an honest adult not because of a different natural propensity to honesty but simply because the adult has learned to wisely enact honesty in any situation.

Though originally postulated by Aristotle over 2,000 years ago, virtue ethics remains popular and is heavily debated. In recent years, new approaches to ethical reasoning have been proposed that have marked similarities to virtue ethics, such as care ethics (Sander-Staudt, 2020) and feminist ethics (Norlock, 2019). In these approaches, love, caring, and relationship-building are considered essential virtues, to be understood and appreciated in non-reductive ways. In the education literature as well, being caring (Laine, Bauer, Johnson, Kroeger, Troup, & Meyer, 2010), student-centered, and humanizing (Salazar, Lowenstein, & Brill, 2010) are typically treated as essential virtues for teachers to cultivate. Importantly, such caring dispositions are not seen as "fixed personality traits" but, rather, "are commitments and habits of thought and action that grow as the teacher learns, acts, and reflects" (Diez & Murrell, 2010, p. 14), meaning that in education we expect professionals to develop specific virtues in relation to their practice.

It seems reasonable that this should be applied to education researchers as well and that caring for our students and our research participants is an essential virtue that should be cultivated by every teacher and researcher. However, the obvious difficulty with applying virtue ethics to professional decision-making is that it requires us to determine, define, and agree upon what those core virtues are. Thus, we might agree that the virtue of caring is essential to education research, or we might not. And even if we do agree that caring is essential, we might not agree on what this actually means or how it is wisely enacted in every case.

Early in my career, I was discussing a curricular decision with a senior colleague, wherein I was pushing him to consider a small group of students in his class whose needs were not being met. He interpreted the gist of my comments as an unspoken question "Don't you care about your students?" He then responded curtly: "I don't care about my students individually. I only care about them collectively."

Though not prevalent, I have occasionally heard different variations on this same theme many times since then from diverse education professionals who interpret the virtue of caring as an abstract concept that is applied to groups in a disinterested, generalist manner rather than to individuals in a focused, concrete manner. I see this as a gross misunderstanding of the virtue of caring because caring for a group implies caring for the individuals within it. So, if you do not care for the individuals, then you can never care for the group. And just as I would not want my child to have a teacher that failed to cultivate the virtue of caring toward them, I would not want to participate in a study with a researcher who did not cultivate the virtue of caring toward me. Thus, even if we agree on virtues, what any virtue actually means in practice may be contested.

To further explore this issue, part of the reason that virtue-based decision-making requires wisdom is that virtues can lead to contradictory conclusions. A simple case arises from the virtues of justice and mercy. Most would agree that both justice and mercy are virtues, but whenever they are applied in specific cases they are almost always at odds with one another (Kidder, 2009). If a student plagiarized a paper but was later penitent, then a just instructor might fail them while a merciful instructor might give them another chance. Since the actions are contradictory in this situation, which virtue should the instructor enact, and does it depend on the context (e.g., the student's understanding of what plagiarism is and whether they were properly taught)? Again, in my professional practice I have grappled with colleagues in these sorts of cases, and it can be very difficult for our shared wisdom to see eye-to-eye.

Today, virtue ethics is applied in a variety of professional practices where key dispositions are identified as being paramount, even though specific enactment of those dispositions may be left uncodified to allow for wise application to each context or situation. According to the Nuremberg Code, researchers should exercise "careful judgment," "good faith," risk-avoidance, and humanitarianism, all of which seem to be dispositional or virtue-based in nature. In addition, some common dispositions expected of education researchers might include being caring, empathetic, just, equitable, committed, competent, respectful, and contemplative, just to name a few.

Learning Check

Which of the following statements are examples of virtue ethics?

- "By doing just acts the just man is produced, by doing temperate acts, the temperate man; without acting well no one can become good." — Aristotle

- "Be ye therefore perfect, even as your Father which is in heaven is perfect." — The Holy Bible

- "Success is not final, failure is not fatal: it is the courage to continue that counts." — Winston Churchill

- "Human happiness and moral duty are inseparably connected." — George Washington

Confronting Ethical Dilemmas

From this brief introduction to ethics, it is not surprising that there is so much disagreement about what constitutes moral action and that people often struggle to determine what they should do in a given circumstance. I will now close this chapter with some practical guidance on how to move forward as a researcher and circle back to the original dilemma presented at the beginning, providing a solution for whether the science teacher should perform the study or not.

The reason that some of the choices we face are dilemmas is that it is often difficult to parse out where we can compromise and where we cannot. For instance, if we believe in two conflicting maxims (such as "it's wrong to lie" and "it's wrong to hurt someone's feelings"), then how do we know which to follow?

Furthermore, the role of human agency and intentionality in ethics is even more difficult to understand with certainty, because sometimes we believe that an action is good even if it has bad results as long as the intent was honorable (e.g., Good Samaritan clauses) and at other times we believe that intentionality doesn't matter for determining the morality of an action at all (e.g., a man who sexually harasses or abuses a woman but feels there was nothing inappropriate about his behavior).

To help us through this, here's a procedure that employs all three approaches to ethics in a way that attempts to capitalize on their strengths and to defuse their weaknesses. It is specifically designed to help you determine whether or not (and how) to conduct a research study, and for your convenience, Table 1 can also be used as an organizing tool for documenting your reasoning as you work through the dilemma.

To solve the dilemma, you should first reflectively identify the core virtues that you're seeking after in your personal and professional life by filling in the blank of "I am (virtue)." This allows you to clarify the central tenets and guiding principles that shape your vision for who you are and who you want to be. We do this first so that we can ensure that these virtues are foremost in our thinking and to ensure that any course of action we take will not violate our central moral purpose. As you do this, you do not need to list absolutely every virtue that you strive for or think is good but only those that relate directly to the problem at hand. In the case of the science teacher, she might identify her core pertinent virtues as being caring (she cares about her students' wellbeing), equitable (she doesn't want to disadvantage one student to another), helpful (she wants to benefit as many students as possible), career-focused (she wants to improve her career), and competent (she wants to do good research to produce the results that the grantor is asking for). Place these core virtues in Column 1 of the table.

Second, you should identify any requirements that are placed upon you either by general maxims you have adopted (which may come directly from your core virtues), laws that you must abide by, or expectations that are placed upon you by an outsider. Do this by completing the statement "I must (requirement)." Again, you should focus here only on the requirements that pertain directly to the matter at hand and do not need to include truisms that might matter to you in a different circumstance. In this case, the science teacher recognizes that she is required by the grantor to conduct studies using control groups, and she also has a requirement emanating from her core virtues dictating that she will not disadvantage students in the long-term. Place these requirements in Column 2.

These requirements, along with the core virtues in Column 1, represent our uncompromisables, meaning that violating any of them would constitute either a moral violation or a technical impossibility in the given case. In this situation, there is nothing inherently moral about conducting studies using control groups, but it is, nonetheless, a requirement placed upon the teacher by the grantor and must, therefore, be deontologically observed. As uncompromisables, any conflicts that exist within these columns constitute the heart of the dilemma, and we must eventually find a way to move them out.

Once you have drafted your uncompromisables, then you are ready to engage in the utilitarian process of asking what the potential benefits of the proposed action would be. Do this by completing the statement "Wouldn't it be great if (potential benefit)," and place your answers in Column 3. These are your goals or the best-case results of the proposed action.

The last two columns mirror Columns 1 and 2 but represent Secondary Virtues (instead of Core) and Guidelines (instead of Requirements). These take the same grammatical form as the Core Virtues and Requirements but are of lower priority and are therefore compromisable in comparison to the uncompromisables. Add any virtues or expectations that you considered for Columns 1 and 2 that you ended up not including because they weren't absolutely core or necessary. In the science teacher's case, she recognized that being helpful to the research community and benefiting more students through her work would both be good things to do but that they shouldn't be prioritized at the same level as her other entries.

Table 1

Example table for solving the science teacher's dilemma

| Core Virtues | Requirements | Potential Benefits | Secondary Virtues | Guidelines |

|---|

| "I am..." | "I must..." | "It would be great if..." | "It's generally good to be..." | "Whenever possible, I should..." |

Caring

Equitable | Not disadvantage students in the long-term 1

Gain informed consent/assent from participants

Allow participants to withdraw 2 | We had more solid evidence that the curriculum was working

More students could be impacted by the curriculum | Helpful

Committed

Career-Focused

Competent | Benefit more students through my work

Not disadvantage students in the short-term

Advance my career

Only conduct studies using long-term control groups 1, 2 |

| Uncompromisables | Potentialities | Compromisables |

| 1 | 2 | 3 | 4 | 5 |

1, 2 These items are in conflict and cannot remain together in the Requirements column.

As you engage in this process, feel free to move items back and forth between Columns 1 and 4 and between Columns 2 and 5. As you do this, you are engaging in a process of discerning what is essential to the morality of the issue in question and what is not. By seeing the requirements lain out like this, you can quickly see if any conflicts exist, and if they do, then you must resolve the conflict by moving one of the items to the Compromisables category.

In the case of the science teacher, she determines that some of her initial Uncompromisables are actually Compromisables. Being career-focused, for instance, and seeking to advance her career are treated as secondary when compared to the other items in Columns 1 and 2 and are therefore moved to Columns 4 and 5.

The central dilemma that eventually becomes clear to the teacher is a conflict between being competent (and only conducting studies using long-term control groups) and being caring and equitable (and not disadvantaging students in the long-term). After weighing these against each other, she concludes that being equitable and caring is more important than being competent and moves the latter to Column 4.

This effectively resolves the dilemma and makes clear that though it would be good for the teacher to do the study and follow the guidelines in Column 5, it would be wrong for her to do it in a way that violated any items in Column 2. She, therefore, concludes that she will not do the study unless the grantor changes the "long-term control group" requirement to a guideline.

The conclusion that the science teacher arrives at, then, is that choosing to conduct the study in its present form would have been immoral and that though it is unfortunate that she will not be able to conduct the study, she has nonetheless behaved morally by choosing to abstain.

The purpose of this chapter has been to lay out normative approaches to ethics and to introduce the problems of moral relativism when applied to research. In future chapters, I will explain specific moral requirements that are placed upon researchers, how ethical research considerations are arbitrated by institutional review boards, and how specific ethical problems should be approached across multiple research paradigms.

References

Alexander, L. (2016). Deontological ethics. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/ethics-deontological/

Baghramian, M. (2015). Relativism. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/relativism/

Diez, M. E., & Murrell, Jr., P. C. (2010). Dispositions in teacher education — Starting points for consideration. In P. C. Murrell, Jr., M. E. Diez, S. Feiman-Nemser, and D. L. Schussler (eds.), Teaching as a moral practice. Cambridge, MA: Harvard Education Press.

Driver, J. (2014). The history of utilitarianism. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/utilitarianism-history/

Hursthouse, R. (2017). Virtue ethics. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/ethics-virtue

Kidder, R. M. (2009). How good people make tough choices: Resolving the dilemma of ethical living. HarperCollins.

Laine, C., Bauer, A. M., Johnson, H., Kroeger, S. D., Troup, K. S., & Meyer, H. (2010). Moving from reaction to reflection. In P. C. Murrell, Jr., M. E. Diez, S. Feiman-Nemser, and D. L. Schussler (eds.), Teaching as a moral practice. Cambridge, MA: Harvard Education Press.

McConnell, T. (2018). Moral dilemmas. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/moral-dilemmas/

Miller, F. G. (2013). The Stateville Penitentiary Malaria Experiments: A case study in retrospective ethical assessment. Perspectives in Biology and Medicine, 56(4).

Norlock, K. (2019). Feminist ethics. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/feminism-ethics/

Salazar, M. C., Lowenstein, K. L., & Brill, A. (2010). A journey toward humanization in education. In P. C. Murrell, Jr., M. E. Diez, S. Feiman-Nemser, and D. L. Schussler (eds.), Teaching as a moral practice. Cambridge, MA: Harvard Education Press.

Sander-Staudt, M. (2020). Care ethics. The Internet Encylcopedia of Philosophy. Retrieved from https://www.iep.utm.edu/care-eth/

Sinnott-Armstrong, W. (2019). Consequentialism. In Edward N. Zalta (ed.), Stanford Encyclopedia of Philosophy. Retrieved from https://plato.stanford.edu/entries/consequentialism/