“Everything we evaluate is designed. Every evaluation we conduct is designed. Every report, graph, or figure we present is designed. In our profession, design and evaluation are woven together to support the same purpose—making the world a better place.”

President John Gargani

American Evaluation Association

Announcing the Annual Conference theme for 2016

Evaluation is a transdisciplinary field. While teachers and nurses will receive specific training and certifications to work in schools and hospitals, trained evaluators work in a variety of workplaces and businesses. Evaluation is like statistics in this sense. People benefit from using statistics in a variety of different occupations. In fact, people effectively use statistics in their jobs all the time, even when they have limited training and a rudimentary understanding of the statistics they use. As a transdisciplinary art, evaluation is practiced in a variety of contexts. It could be argued that nowhere is evaluation more prevalent than in the field of instructional design. And while designers often conduct evaluations without receiving extensive evaluation training, training and practice will improve their ability to evaluate well; and as a result, it will improve their design and development efforts.

What is an Instructional Product?

As evidenced by the instructional design models developed in the late 1900s (e.g., ADDIE, Dick & Carey), the focus of instructional design was just that, the development of instruction or training. The instructional product was instruction. The modality of the instruction was typically limited to in-person classroom instruction (both academic and corporate). The process included the development of instructional objectives, tests to measure the learning outcomes, and resources (primarily textbooks, learning activities, and videos) the designer believed would achieve the specific instructional goals of that course. The designer would structure the course using a pedagogy they felt would facilitate the intended learning. This method of creating instruction still happens; however, as technology advanced and the internet became more widely available, the notion of what constitutes an educational product has expanded. In addition to instruction, instructional products include educational technologies, learning apps, and educational services in the form of collaborative learning tools, resource repositories, how-to guides, self-improvement and skill development apps, educational games, discussion boards, communication tools, and crowdsourcing apps. The primary modality for delivering instruction has also changed. In addition to classroom instruction, instructors provide training using e-Learning and online instruction, both synchronous and asynchronous, in blended and informal learning environments. In addition, some instructional products have educational purposes related to the facilitation and support of learning in general; these products are not tied to a specific course, and learners use these resources for numerous purposes and in a variety of ways.

It might be helpful to differentiate instructional products (those directly used for training and classroom instruction) from the more generic term of educational products (any product used in an educational setting), but they all have a similar end goal, to facilitate and support learning.

You will also be aware that many users of instructional products would not classify themselves as students. The intended users for a product may include teachers, administrators, and students attending school in a traditional classroom setting. However, more recently, designers have been creating instructional products for non-traditional learners seeking educational opportunities outside of the classroom and any formal educational context. Many eLearning tools are created as supplementary learning resources and knowledge creation services for corporate training or personal enrichment. Several of the more contemporary instructional design approaches (e.g., rapid prototyping and design-based research) have adapted earlier instructional design models to accommodate this expanded view of what an instructional product might be. They still all utilize similar product development stages as all instructional products need to be designed, developed, tested, and maintained, which inevitably requires evaluation.

An instructional product might include any educational resource that facilitates or supports learning regardless of the setting or context.

Instructional Design Models

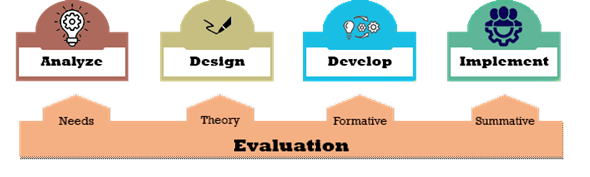

Many instructional design models have been proposed, but all tend to be an adaptation of the ADDIE model. ADDIE stands for Analyze, Design, Develop, Implement, and Evaluate.

Figure 1: Original phases of the ADDIE Instructional Design Model. |

| |

From the acronym for this model, you may erroneously assume that evaluation only occurs after the designer has implemented the product. This was never the intent, and in practice, evaluations of various types are conducted throughout the project, as depicted in Figure 2.

Figure 2: Evaluation Integration within the original ADDIE Instructional Design Model. |

| |

Scholars have created many innovative adaptations of the ADDIE model, including the Armed Forces' own modifications to their original training development framework. The PADDIEM version of ADDIE includes a planning phase to augment the analysis phase and a maintenance phase which expands the original purpose of the implementation phase. And while this and other design models each make subtle improvements to ADDIE, they all incorporate an analysis (concept planning), a design (theoretical planning), a development (creation), and an implementation (distribution and testing) phase. A few are presented here as examples of where evaluation occurs in the process.

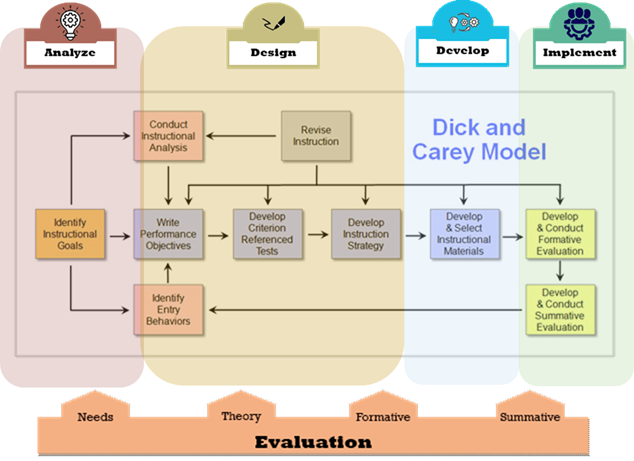

The Dick and Carey ISD model and ADDIE

Dick and Carey's ISD model was one of the early efforts to formalize the instructional design process. This model focused on lesson planning for classrooms and formal training situations. It was intended to help a designer figure out what to teach and how to teach it. It relies heavily on what has recently become known as "backward design." It starts by creating learning objectives and developing assessment instruments (tests) to measure whether students achieved the expected learning outcomes. The findings from the formative evaluation step informed revisions in the instruction. The summative evaluation took the form of an objectives-oriented evaluation, which focused primarily on whether students' test scores were deemed adequate. Achieving adequate test scores was seen as an indicator that the instruction was good and often was the only criteria used to judge the quality of the instructional product.

Figure 3: Evaluation Integration within Dick and Carey's Instructional Design Model. |

| |

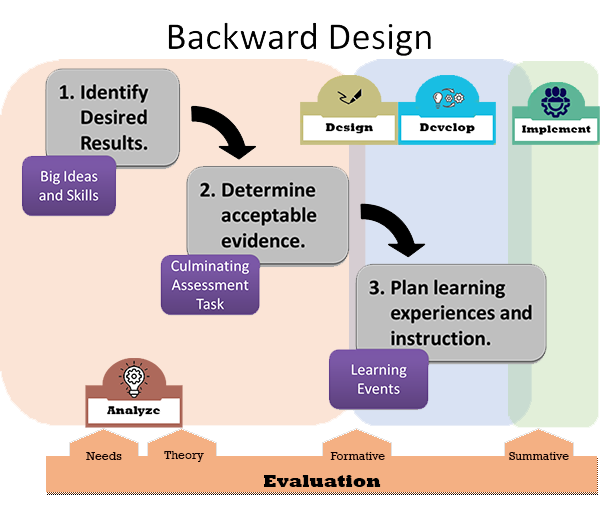

Figure 3a: Evaluation Integration within Backward Design Approach to Design. |

| |

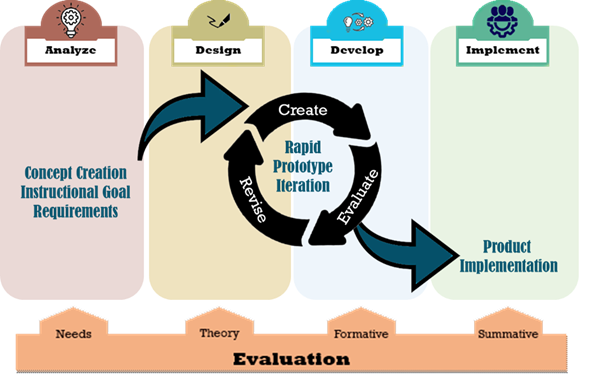

Rapid Prototyping and ADDIE

Rapid prototyping was first used in the manufacturing industry. Instructional designers and others adopted rapid prototyping as a quick and cost-effective way to build and test a working version of their product. The innovation that rapid prototyping offers the design process is a quick iterative design and development cycle. The principle supporting this is similar to that used in action research, a trial and error method. The evaluation aspect of this model includes a quick formative review process that informs needed improvements and is repeated until the product meets specifications. Rapid prototyping activities are:

- Define instructional goals and requirements,

- Formulate a feasible solution.

- Start building the product

- Test it on users and others (evaluate)

- Refine your design

- Repeat the process until the product works as required

While this approach is practical, it still follows the same phases of the ADDIE model – just more quickly. In this model, the needs assessment is often limited, and a summative assessment may not occur. This model focuses heavily on the design and development phases. What this model tends to lack is a systematic evaluation of the theory and principles that support the design, which is not uncommon in other models as well.

Figure 4: Evaluation Integration within a Rapid Prototyping Instructional Design Model. |

| |

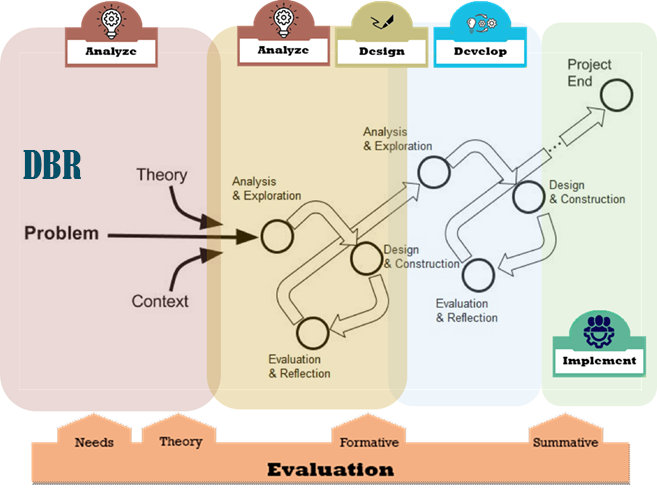

Design Based Research (DBR) and ADDIE

McKenny and Reeves (2012) outlined three core processes of DBR: (a) analysis and exploration, (b) design and construction, and (c) evaluation and reflection. A hallmark of the DBR approach is its iterative nature, but you will note that the DBR approach represents another adaptation of the ADDIE model. This approach to design is similar to rapid prototyping but a bit more systematic. Each design iteration is formative in that the designer refines and reworks the product based on understandings obtained in the evaluation and reflection phase of each iteration. How a designer conducts each cycle will depend on the evaluation finding of the previous iteration, and a designer may perform different analyses and use different evaluation methods to complete a cycle. While the core processes identified by McKenny and Reeves do not explicitly state this, we can assume that, in addition to the analysis & exploration that occurs during development, a needs analysis would occur before designers initiate the development process. In addition, we can reasonably assume that a summative evaluation of the final product would occur.

Figure 5: Evaluation Integration within a Design Based Research Model. |

| |

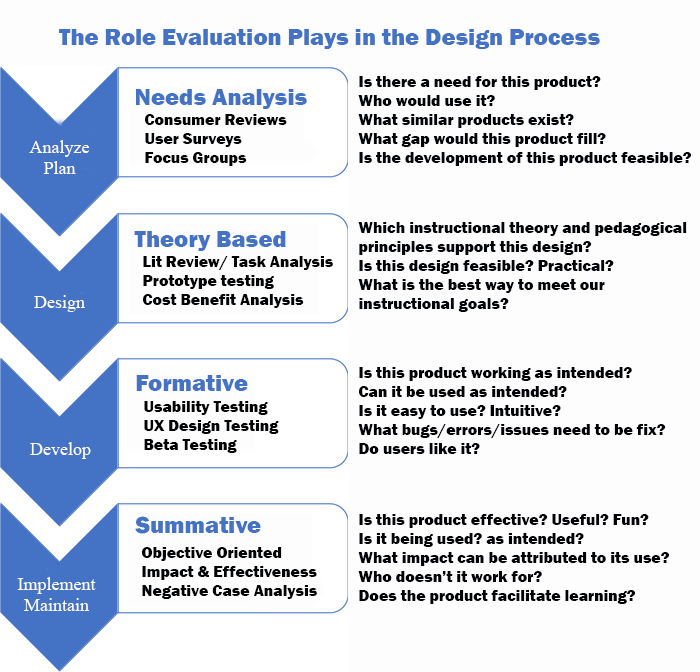

The Role of Evaluation in the Design Process

Evaluation is an integral part of the design and development process. Evaluation makes our designs better and helps improve the products we produce. We use evaluation throughout this process. Evaluation is an activity carried out before, during, and after a product has been designed and developed. The following graphic illustrates the various roles evaluation can play within specific stages of the instructional design process.

Figure 6: Role of Evaluation in the Design Process. |

| |

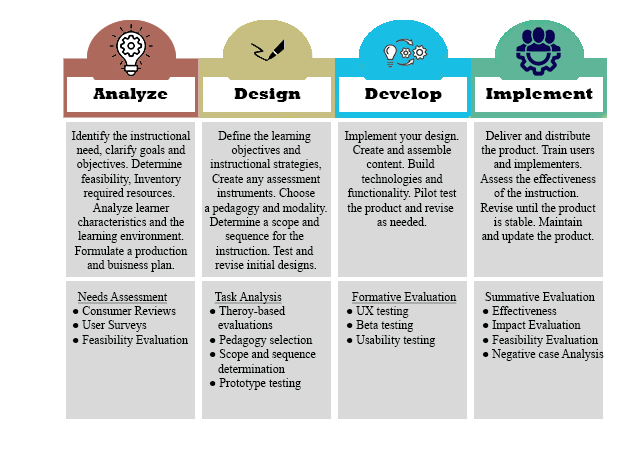

Evaluation by Design Phase

Designers use different types of evaluation at each phase of the design process; this is because they need to answer different design questions throughout the process. When referring to "an evaluation type," you will note that we refer to an evaluation with a specific focus or purpose. All evaluations follow a fairly standard structure; the purpose, methods, and scale of the evaluation are the things that tend to change.

While the previous chart seems to imply that we designate specific types of evaluation to a specific phase of the process, this is not the case in practice. While a specific type of evaluation may be particularly appropriate for a specific stage, smaller, more focused versions of a specific evaluation may need to be conducted in other phases of the process. For example, a designer may need to conduct a theory-based evaluation in both the design and development phases to help them make decisions. Likewise, designers may conduct consumer reviews as part of a needs analysis evaluation in the analysis and implementation stages, albeit in a modified form and for slightly different purposes.

The following discussion of evaluation roles does not represent a mandate for where evaluation must occur; it simply explores possibilities. We will discuss details of various evaluation approaches and types of evaluation in the next chapters.

Evaluation in the Analysis Phase

The analysis phase of the design process is mainly conceptual. In this phase, the designer analyzes their learners (the target audience and their needs) and attempts to understand any learning requirements and context restrictions (goals and constraints). The main evaluation activity for this phase revolves around needs analysis. A vital component of a needs analysis requires that the evaluator identify any gap that might exist between what is and what we want (need) things to be. For example, the designer may identify a gap between what students know and what they need to know (or be able to do). They then might identify a gap between the quality, effectiveness, or functionality of existing instructional resources and what is needed to facilitate the learning students are expected to accomplish. The designer might conduct a consumer review as part of the needs analysis. A consumer review evaluation will involve surveying users and reviewing and comparing existing products. It may also involve a theory-based evaluation of the product. Results from gap analysis and consumer reviews inform the designer's decision of whether to create a new product or utilize existing solutions. After identifying a need, the designer might conduct another needs analysis to determine the resources needed to produce a new product and the viability of such a project.

You will recall that a planning phase was added to the ADDIE training development framework to meet a specific need that wasn't being met in the original model. Planning focuses primarily on determining the project goals (objectives), requirements, constraints, budget, and schedules (i.e., project management stuff). Planning of this type is needed once the decision-maker decides there is a need for a product to be created or revised—project management benefits from a different set of evaluation activities.

Often designers work for a corporation or an academic institution as part of a design team (e.g., teachers or corporate trainers). In these situations, the client may not expect the products they produce to be sold for profit; they create them to serve a purpose (meet an instructional need within the organization). However, a designer often creates an instructional product to be sold. In these cases, the planning phase may also require the developer to create a business plan to evaluate the viability and cost of product development and whether there is a market for the product.

Unfortunately, too often, very little time is allowed for the planning and analysis phase. At times, clients and designers make quick decisions without carefully considering the need for a product. Cognitive biases that affect decisions made here include action bias, availability heuristic, planning fallacy, survivor bias, and the bandwagon effect. We may perceive a need simply because our personality compels us to act, or we see others developing products and feel compelled to do likewise. We may identify a genuine need but underestimate the cost and risks (viability) of developing the product. Likewise, a genuine need may exist, but designers are reluctant to revise instruction or develop new products out of fear or denial. In the analysis phase, designers should carefully evaluate needs and make informed decisions.

Evaluation in the Design Phase

The design stage focuses on the design of the learning experience and the resources needed to support the experience. When designing an educational product, this phase requires a designer to consider the functionality of the product and how the product's design will accomplish its purpose and goals.The purpose of the design phase was initially conceived as a task analysis of the training a designer was hired to develop. A task analysis requires the designer to identify essential components of the learning and problems users experience when learning. Designers then make several decisions regarding the product's design (see Gibbon's layers). A designer must choose which content (information, exercises, activities, features) to include. Designers must also judge the best ways to present the content (i.e., the message) and how a student will interact with the product (modality). Evaluation activities in this phase often involve theory-based evaluation of the pedagogical ideas and principles that might best facilitate the learning and ways an instructional product can mitigate challenges students experience. Theory-based evaluations involve a review of research, and for existing products, an evaluation designed to judge the degree to which a product adequately applies pedagogical theory and principles. Prototype testing is also conducted in this phase to evaluate the viability of a design.

Evaluation in the design phase is essential because if the overall product fails, it is most likely due to a flaw in the design. Designs often fail because the designer neglects to consider existing research and theory related to the product. Even when theory is considered, the teaching and learning process is complicated. People have diverse needs, abilities, and challenges. They also have agency. Rarely will a single instructional design work for all learners. As a result, there is no certainty that all students participating in a learning experience will accomplish the expected learning objective. Likewise, experts often disagree on the best ways to teach. Designers need to judge for themselves which designs are best.

Evaluation in the Development Phase

The purpose of the development stage is straightforward. In this phase, developers implement the designer’s vision for the instructional product – they create and build the learning assets outlined in the design phase. This might include the creation of assessments (tests and quizzes), assignments, practice exercises, lesson plans, instructor guides, textbooks, and learning aids. Developers may need to create graphics, videos, animations, simulations, computer programs, apps, and other technologies. They will also need to test and refine each of these assets based on formative feedback from experts, implementers, and the intended end-users.

As noted, the evaluations conducted in this phase are formative. The purpose of a formative evaluation is to identify problems. Formative evaluation can involve usability and user experience (UX) testing, both of which identify issues learners and providers might experience when using a product. It also utilizes beta testing to see whether products can be used and intended or as the designer envisioned (commonly called usability testing). The evaluator might use durability, usability, efficacy, safety, or satisfaction as criteria for their judgments. The methods used in these evaluations might include observations, interviews, surveys, and personal experience (trying it out for yourself).

Evaluation in the Implementation and Maintenance Phases

The implementation phase begins once the product is stable and ready to be used by consumers (e.g., instructors and students). Being stable does not mean the product is perfect – it just means it is functional. The product will likely need to be revised and improved through this and the maintenance phase based on additional testing.

Evaluation activities in this phase can be extensive if the developers decide to employ them. Effectiveness evaluation judges the degree to which learners can use the product to accomplish the intended learning outcomes. Impact evaluation considers what long-term and sustained changes have occurred in the behaviors and abilities of learners — does the learning last, and does it make a difference? Implementation fidelity evaluations judge whether consumers of this product can and are using the product as intended. Often beta testing is conducted under ideal conditions; implementation fidelity testing considers suboptimal conditions and unexpected circumstances (use in the wild). Testing in the development phase may suggest that users like everything about the product and indicate they would use the product. However, during implementation testing, you may find consumers only use some of the product features (they find some features beneficial but not others). Continued UX testing can also occur during this phase. Testing in this phase may also involve negative case analysis. Rarely will a product work well for all learners. A negative case analysis tells us who uses the product and who does not; it tells us which learners benefit from using the product and which do not.

The Navy added the maintenance phase to their ADDIE design model in recognition of the fact that products age. The maintenance phase is a commitment to continuous improvement of the product through its life cycle and requires ongoing product evaluations similar to those conducted in the development and implementation phase.

Figure 7: Role of Evaluation and Potential Guiding Questions |

| |

Chapter Summary

- An instructional product might include any educational resource that facilitates or supports learning regardless of the setting or context.

- Evaluation occurs throughout the design process.

- Most design models are adaptations of the ADDIE model.

- Four design phases occur in all design models: Analyze, Design, Develop and Implement.

- Specific types of evaluation are used in each phase of the design process.

- Evaluation is essential to improving the design decisions we make.

Discussion Questions

- Consider an educational product that you use. What do you like about it? How does it compare to other similar products? Describe something the product lacks that would be nice to have. Describe something missing in the product that users might consider essential. Give reasons why the designer may have decided not to include the missing feature in the product's design.

- Think about an instructional product people use. Describe the type of person (a persona) who tends to use this product. Think of a label you might use to describe the type of person who uses the product. Suggest reasons why some groups of consumers might use the product and not others?

- Think about a learning activity instructors use when teaching their class. Why would a teacher believe it’s a good learning activity? What pedagogical theory supports its use? Is the activity always effective? If not, why not?

References

Mckenney, S., & Reeves, T.C. (2012) Conducting Educational Design Research. New York, NY: Routledge.