This paper presents the evaluation and reflective implementation of iteratively designed and developed online open educational resources (OER) for geoscience undergraduate classes in the context of a long-term educational design research project. The results indicated that the use of free and open software and social media allowed low cost in video content hosting and broadened cross-disciplinary review and evaluation. These methods provide insights for scientists, teachers, instructional designers, educational researchers, and librarians in the use of social media and Search Engine Optimization (SEO) for OER implementation and reuse. Pros and cons of using these evaluative and reflective methods, especially during the Covid-19 pandemic, and the sustainability of maintaining OER are discussed.

Introduction

The design, development, and use of open educational resources (OER) are increasingly broadening learning access (Cooney, 2017; Luo et al., 2020). OER enables the possibilities of integrating the collective wisdom of subject experts through intentional curation of existing and ever-growing digital resources. OER also creates engaging learning experiences using interactive digital technologies and reusable learning objects grounded in learning science (Wiley, 2000). In addition, OER has real potential to lower the costs of textbooks and related educational materials. In the disciplines of science, technology, engineering, and mathematics (STEM), these digital and/or online OER can enable low-cost access to active learning experiences in both virtual and face-to-face learning spaces. For example, OER can be used to simulate live experiences in laboratory settings with interactive content engagement when access to real world scientific equipment is limited (Ackovska & Ristov, 2014; Gaba & Prakash, 2017; Liu & Johnson, 2020). The design and development of STEM OER are ideally based upon learning science, media production principles, and technology affordances (Liu & Johnson, 2020). To successfully adopt sound design and development of OER and evaluate its effectiveness and impact, it is necessary to test and document both local viability and wider adoption practices (Larson & Lockee, 2019; McKenney & Reeves, 2019). Rigorous review and evaluation methods in an open education environment are needed to ensure learning strategies integration, continued improvement of OER quality, and sustainability of OER adoption and growth (Atenas & Havemann, 2013; Clements & Pawlowski, 2012; Kimmons, 2015; Mishra & Singh, 2017).

In this multi-year and multi-phase OER educational design research project, the researchers conducted iterative cycles of OER creation, evaluation, and class-based adoptions for geoscience education with funding from both federal and state grants. As the design, development, and preliminary implementation were refined through formative evaluation, simultaneously OER products were promoted to broader audiences and used in target contexts to investigate their impact (Liu, Johnson, & Haroldson, 2021; Rolfe, 2016). Iteratively geoscience OER content was gradually set in place to meet instructional and funding goals. Unfortunately, revision of content format and structure, evaluation, reflective implementation, promotion, and continued refinement for broad adoption appear to have been of lower priority in the development of and documentation of most geoscience OER development projects. References to existing theoretical and practical frameworks are scarce, creating a lack of strategies and techniques to broaden the reach of OER (Rolfe, 2016).

The needs of problem-solving in OER design research kept emerging, which required “the iterative development of solutions to practical and complex problems” and provided “context for empirical investigation” (McKenney & Reeves, 2019). To optimally reach a broad audience with minimal cost (especially with limited funding cycles), the investigators of this research hosted OER videos on YouTube and performed project management with Open Science Framework (OSF), a cost-efficient content management repository. Review feedback was invited with the free version of hypothes.is, class surveys, and the comments feature in PressbooksEDU. Google and YouTube analytics were also used to adjust Search Engine Optimization (SEO) (Rolfe, 2016). The challenge of OER development evaluation was found to minimize the “tension between collecting meaningful impact data and ensuring the materials remain open and accessible through the process” (Pitt et al., 2013, p. 2).

Within the context of this educational design research project, this paper focuses on the evaluation and reflective implementation stages of the design research and provides an exploratory answer to the question, how can a series of laboratory-based STEM (geoscience) OER be reviewed and evaluated to optimize their reach of use and reuse? The literature review explores the various aspects of this research, followed by research methods and data collection for the OER developed in this study. The results are analyzed along with reference to the relevant literature as well as to the researchers’ reflections.

Literature Review

Because of the rising attention to the costs of textbooks and instructional resources as well as the availability of increasingly ubiquitous digital technologies, OER have emerged in STEM education (Allen, et al., 2015; Alves & Granjeiro, 2018; Harris & Schneegurt, 2016). The essential goal for the design, development, and implementation of OER is to enable open and inclusive learning access for all students.

The STEM OER projects in this paper were funded with the purposes of lowering textbook costs for students, maximizing availability and accessibility of laboratory equipment to students in classroom-based and online settings, and flexibly integrating active learning strategies. To replace dated and costly textbooks for the geoscience undergraduate microscopy classes, OER were created from actual teaching practices in laboratory settings. Educational design research and iterative evaluation were adopted for the OER development and implementation (Brown, 1992; Collins, 1992; McKenney & Reeves, 2019; Plomp & Nieveen, 2007; van den Akker et al., 2006). Educational design research (EDR) probes, develops, adopts, enacts, tests, and “sustainably maintains” solutions to problems in learning settings within the real context for empirical investigation (McKenney & Reeves, 2019). EDR is usually collaborative, responsively grounded, and iterative. The interventions that emerge from EDR are oriented to practically solving real-world problems in authentic settings, rather than intended to test hypotheses (McKenney & Reeves, 2019).

Literature in related disciplines and categories has provided a sound foundation for the formative evaluation, identification of methods, and reflective implementation of these newly developed OER. These include learning strategies in OER, review and evaluation of OER and related follow-up issues, and optimizing the implementation and spread of OER (McGill et al. 2013; Rolfe, 2016).

Engaging Student Learning with OER

Shaped by learners’ needs, technologies, and systemic changes, learning environments have been extended beyond physical classrooms and can provide resources and expertise online to support the search of evidence and connection of ideas, as well as enable broader problem-based learning (Jonassen & Rohrer-Murphy, 1999; Liaw, Huang, & Chen, 2007). Students’ abilities and skills to connect learned concepts and theories with the search for evidence and pursuit of connection between various concepts, skills, and subject areas need proper scaffolding (Lawson, 1989). Based on social constructivism and learning cycles in science education, the scaffolding can only take place effectively with intentionally designed learning activities that introduce the to-be-learned concepts and enable student exploration and application through cooperative and collaborative learning with consistent self-assessment (Blank, 2000).

Engaging student learning in STEM education has unique challenges because of the need to blend learning of scientific theories and principles with practical applications of laboratory and field procedures as well as data evaluation and synthesis and creation and testing of new hypotheses. Traditional textbooks have played critical roles in information dissemination, acting as reference materials, and providing directions for learning assessment. With the fast-evolving innovative technologies and the progression of active learning strategies in STEM education, student perception and interaction with the content in an OER environment can be captured and measured (Dennen & Bagdy, 2019; Liu, Johnson, & Haroldson, 2021; Luo et al., 2020).

POGIL (process-oriented guided inquiry learning) is an active learning strategy that provides instructional content presentation with guiding objectives, core questions, and procedural assessment so that students can focus on deep learning (Brown, 2018; Purkayastha et al., 2019). POGIL was initially developed to engage student learning in chemistry courses (Farrell, Moog, & Spencer, 1999) and later adopted widely in teaching and learning of other sciences. It has the roots in a Piagetian model of learning and has integrated the principles and concepts of inquiry-based learning and experiential learning cycles in science and laboratory education (Abdulwahed & Nagy, 2009; Kolb, 2014; Piaget, 1964). There is strong evidence of the effectiveness of POGIL in science learning (Freeman et al., 2014).

Along with POGIL, deep learning is reflected with learning behaviors such as seeking meanings, relating ideas, use of evidence and interest in ideas (Entwistle, Tait, & McCune, 2000). These have been found to be related to student perception of teaching quality and learning experiences for students (Entwistle & Tait, 1990; Liu, St. John, & Courtier, 2017; Richardson, 2005). Educators have been probing what can potentially lead to student deep learning in ever evolving learning environments (Entwistle, etc. 1979; Jonassen & Land, 2012; Meyer & Parsons, 1989). Studies have been conducted in various contexts and disciplines to investigate contextual factors associated with student deep approaches to learning. Student perception of learning with geoscience OER, especially their self-perceived confidence in geology instrumentation and research, remains an underexplored area.

Review and Evaluation of OER for Verification of Design Ideas

OER design and development require problem-solving in an evolving and real learning environment with multiple and multi-level stakeholders. This can be optimally strategized and operationalized with educational design research which is defined as “the iterative development of solutions to practical and complex educational problems” (McKenney & Reeves, 2019, p. 6). These include iterative review and evaluation with local viability implementation to verify their fulfillment of instructional design and development concepts and procedures (Larson & Lockee, 2019; McKenney & Reeves, 2019). In this way, the designers and subject matter experts (SME) become researchers, with the mindset of being good teachers, and are thus afforded a rich and “humbling opportunity” for discovering the perception of usability and the effect on student learning (McKenney & Reeves, 2019).

In their OER integration study, Dennen and Bagdy (2019) investigated the perception of learning with OER by undergraduate students in an educational technology class. Students were given surveys with closed and open-ended items regarding their perception of using customized OER at the local institution. Students felt favorably towards the benefits of free and customized text and readings for their classes. Formative evaluation was also conducted with mixed methods of surveys and observations, based on literature from instructional design and OER discipline-specific learning studies (Liu, Johnson, & Haroldson, 2021; Liu, Johnson, & Mao, 2021). OER usability questionnaires were based on the pre-existing criteria of assessing multimedia learning objects (Benson et al., 2002; Kay & Knaack, 2008). These evaluative studies focused on learner experiences of content presentation, interactivity, navigation, and accessibility within a specific context and as case studies.

Evaluation of newly designed OER, seen as a new educational design research product within an evolving learning environment, can consist of various stages of testing, collecting perception and reflection data of soundness and feasibility from teachers, students, and designers (Liu, Johnson, & Haroldson, 2021; Liu, Johnson, & Mao, 2021). For the study presented in this paper, after an initial pilot, student feedback and instructor reflection have been primarily used as formative evaluation to guide the design and development of courses using the customized OER.

Evaluation and Reflective Implementation for OER Spread and Sustainability

Evaluative procedures can ensure the quality of OER and their sustained impact on learning, enhancing the realization of iterative and sustainable changes (de Carvalho et al., 2016; Kimmons, 2015; Tlili et al., 2020). The breadth of implementation is viewed as the foundation of promoting OER to wider adoption and retesting with evidence of learning effectiveness (Bodily et al., 2017). The reuse, replication, and modified reproduction can also be a method to generalize the EDR output (McKenney & Reeves, 2019). Therefore, both lines of validating OER quality through reviews and evaluation and identifying mechanisms of elevated discovery are needed.

A decade of OER development is found to be “characterized largely by a proliferation of content only” (DeVries, 2013, p.57). To move forward to an OER culture for diverse curriculum-level adoption, existing OER content needs to look for further development of learning activities, assessment items, continually enhanced navigational options and discoverability, and course integration strategies (Luo et al., 2020; Wiley et al., 2017). Multiple methods of data collection and sources on OER use and evaluation, beyond host institutions and disciplines, are recommended for OER research (Pitt et al., 2013). The evaluation of OER impact, however, has its unique opportunities and challenges. After a systematic review of 37 papers in peer-reviewed academic journals on OER studies, Luo, Hostetler, Freeman, and Stefaniak (2020) discovered a split perception of OER quality between faculty and students. Their review indicated that students perceived OER with favor mainly because of their openness and cost-saving, and that faculty had split views because of awareness or concerns of quality and lack of support for contextualized instructional strategy integration. They recommend incorporation of instructional strategies in close alignment with learning outcomes and newer models of supporting OER adoption, with enabling technologies for OER implementation.

Questions were also raised regarding the formalized review of OER versus traditional publishing procedure, breadth of reach, approaches of access, and regions and disciplines of reach for broader and sustainable OER adoption. Algers and Ljung (2014) used the fourth generation of Activity Theory and conducted a study on peer review of an animal welfare education OER. Data were collected from students, teachers, and scientists. The results indicated that “OER is a new kind of learning approach which can be based on participatory enquiry; and since openness is both the objectives and the instruments, a peer review assessment of the artifact cannot be comprehensive.” In contrast to the original intent of OER, “striving to share the same object between multiple activity systems complicates the issue of power and passion.” (Algers & Ljung, 2014, p. 371).

Review and evaluation to ensure the quality was just half of the journey to sustain OER. Building discoverable OER with search engine optimization (SEO) was recommended with the UKOER project report and contributing scholars (McGill et al., 2013; Rolfe, 2016; Rolfe & Griffin, 2012). Search engine optimization (SEO) is a suite of keyword design and related implementation mechanisms to enhance searchability of web-based content, which can be used for web-based marketing and research, content reach, and customer services (Chen-Yuan et al., 2011; Malaga, 2010). In the UKOER project, maximizing the reach of OER to broader audiences had to rely on Wordpress.org platform because of easy integration of SEO. This highlighted the value of using and enhancing SEO to enable the discoverability of OER, which related to metadata-like refined keywords so that OER could be easily discovered by end users. This also raised the OER relation to integration with content management systems or learning management systems for learner access, increasing attention to institutional support to an OER adoption culture of reuse, revision, remixing, and redistribution (Rolfe, 2016; Wiley et al., 2017). Ranking of OER, support and incentives, and possible adoption models were also studied or proposed in the global setting for OER sustainability (Koohang & Harman, 2007; Mishra & Singh, 2017; Tlili et al., 2020; Zaid & Alabi, 2020).

Truly open OER resources are suggested to be developed with existing technology and low-cost (and sustainable) media platforms in mind (McGill et al., 2013; Rolfe, 2016; Rolfe & Griffin, 2012). The development and implementation of OER can build in social media and free software components in its iterative refinement process, ideally with consistency for its sustainability. OER researchers also need to intentionally incorporate these strategies and tools in projects that have limited funding cycles. Therefore, a suite of tools with carefully designed research protocols for the OER evaluative implementation are presented in this paper. Understanding the feedback between learner experiences and valid and transparent reviews and how it impacts revisions is the core concern of this current study.

Research Context

Identification of minerals and rock textures using a commonly available piece of equipment called a petrographic microscope is a standard skill set for most geoscience curricula. Increasingly common is the use of more sophisticated equipment to measure physical and chemical properties of geologic materials, and the theory and practice of using these analytical methods are usually taught in middle- to upper-level required and elective courses in the geosciences. Learning in these classes must rely on lab equipment such as petrographic microscopes which cost thousands of dollars apiece, as well as specialized equipment which can cost hundreds of thousands of dollars or more. The classes also need to use one or more expensive and very specialized textbooks, and usually require small-group or individualized laboratory instruction. All these factors motivate the need for more open, accessible, interactive, and customized instructional content and learning activities. Therefore, OER are much sought after to meet student learning needs in laboratories, field trips, and undergraduate research, because of their low cost, potential for engaging learning activities with intentional design, and digitally more portable access.

The purpose of this project was to design, develop, and pilot OER on the parts, theory, and applications of the petrographic microscope to identify and analyze minerals, which could be used at the host university as well as in a variety of Earth Materials courses at other institutions. The OER is entitled Introduction to Petrology (Using the Petrographic Microscope). Proposed as a multi-stage project, this phase was funded by a 2019 spring Virtual Library of Virginia (VIVA) redesign grant and based on the design, development, and pilot implementation of a National Science Foundation (NSF) funded Analytical Methods in Geoscience OER for a blended learning course between 2016 and 2020. For the Introduction to Petrology OER, the project investigators completed 2 chapters, 13 units and a preface, with related self-assessment interactive exercises written by the geoscientist researcher in this project and parallel-using OER and a learning management system, videos, and 79 interactive H5P objects, published at https://viva.pressbooks.pub/petrology/.

The local use of the OER has been in a required 4-credit mid-level course for the Bachelor of Science (B.S.) in Geology, specifically the Introduction to Petrology class. The course includes lecture, lab, and field components. It is offered at least once a year, and in recent years has been offered in the spring semester only. There is an approximate 20-person cap in each section of the course because of the limited number of microscopes and seats available to use in the classroom, and to accommodate the need for individualized attention from the instructor and laboratory assistant. The course is intended to be taken by sophomores and juniors, although some senior-level students (usually transfer students) also enroll for credits. The skills and competencies learned in this class are necessary for upper-level electives and the required a capstone 6-week summer field course. Each course contains more than 10 lab assignments utilizing petrographic microscopes and other lab equipment like a scanning electronic microscope (SEM).

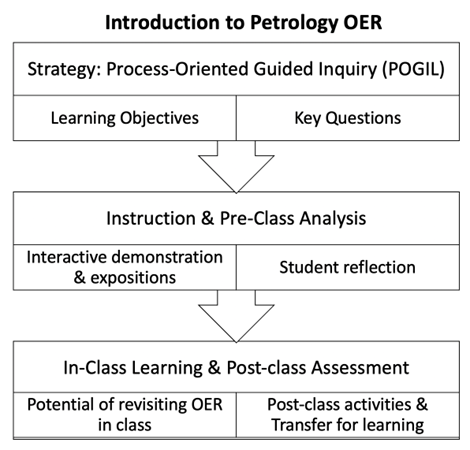

In this OER research, each module unit was created using a customized Process-Oriented Guided Inquiry Learning (POGIL) process, presenting learning objectives followed by comprehension questions and higher-order reflective prompts with visual and interactive expositions or demonstrations (as Figure 1) (Liu, Johnson, & Haroldson, 2021; Liu & Johnson, 2020; Moog et al., 2006). With the focus on online and digital content access and learning interactivity, the original grouping strategies in POGIL were not adopted in this customization. H5P learning objects as interactive learning objects based on HTML5 were developed for learner self-paced interactivity and self-assessment. Metadata were intentionally developed with these H5P objects to enhance their searchability.

For the purposes of easy access, open adoption, and sustainability after the funding cycles, the researchers published the video content on the Analytical Methods in Geoscience (AMiGEO) YouTube Channel, with edited YouTube auto-captioning, to enhance the accuracy and accessibility of scientific terminologies. Because of limited funding cycles and multiple collaborative partnerships, the core researchers were only able to edit the scientific accuracy for some of these videos auto-captioning. Project management, including tracking versions and archiving raw images and video footage, utilized a combination between physical portable hard drives and an institutionally subscribed Open Science Framework (OSF). Using OSF enabled the flexibility of flipping the content between private and public mode. Each publicly shared object from OSF could carry a unique Digital Object Identifier (DOI) (Foster & Deardorff, 2017). These features were included in the OER design project management for future reuse.

Figure 1

Unit Design of Introduction to Petrology OER

Unit design of Introduction to Petrology OER uses the strategy of Process-oriented guided inquiry (POGIL) directs formation of learning objectives and key questions, followed by instruction and pre-class analysis, with interactive demonstration and exposition, student reflection. Then in-class learning and post-class assessment, with potential revisiting OER in class and post-class activities and transfer of learning.

Unit design of Introduction to Petrology OER uses the strategy of Process-oriented guided inquiry (POGIL) directs formation of learning objectives and key questions, followed by instruction and pre-class analysis, with interactive demonstration and exposition, student reflection. Then in-class learning and post-class assessment, with potential revisiting OER in class and post-class activities and transfer of learning.Research Methods and Data Collection

Because of the novelty of OER design in geosciences with adapted POGIL strategies and the emerging evaluative angle in an authentic setting, the researchers have adopted an educational design research approach (McKenney & Reeves, 2019). This paper focuses on the stages of evaluation and reflection, and implementation and spread of this research study. Reflective and empirical data have contributed to the review and evaluation which “concerns early assessment of design ideas,” verified local viability for OER integration in the geoscience classes and produced feedback and reflection about wider implementation and spread (Larson & Lockee, 2019; McKenney & Reeves, 2019).

The researchers conducted iterative educational research cycles, encompassing pursuing solutions through needs analysis, experiments of aligning content development with active learning strategy integration, dissemination of preliminary OER at geoscience and education research conferences and workshops, inviting review feedback, and attempting to build a community of geoscientists, librarians, and instructional designers and evaluators to sustain the reuse, refinement, and informed continuity of these OER. A mixed-methods design was adopted to conduct this educational design research through its analysis and exploration, design and construction, evaluation and reflection, and implementation and spread stages (McKenney & Reeves, 2019). After the Institutional Review Board approval of the research protocol, the researchers collected quantitative and qualitative data from undergraduate students, faculty members, participants at geoscience conferences, and reviewers, with their informed consent. The preliminary implementation of OER was ushered with formative evaluation (Liu, Johnson, & Haroldson, 2021; Liu, Johnson, & Mao, 2021). Several approaches for data collection in this current study are presented below.

Student Perception and Performance

Students enrolled in two geoscience undergraduate classes in a public university on the east coast of the United States were invited to complete pre- and post-class surveys on their perception of using OER and knowledge gain in spring 2020 and spring 2021. The OER were piloted with a Learning Management System (LMS)-Canvas customized integration in the host institution’s Introduction to Petrology class, according to the proposed timeline of the project and approved by VIVA. Because of the immediate needs of open access to instructional resources in the pandemic transition in Spring 2020, the chapters on creating thin sections (polished slices of rock on glass slides which are used in the petrographic microscope) were also integrated into an upper-level elective course called Laboratory Techniques in Geology which taught theory and methods of a variety of laboratory equipment used in geoscience research. The data collection in spring 2020 for the pre-assessment was interrupted by a system transition of the university survey platform at the beginning of spring 2020, after informed consent was collected from the classes. Later into the semester, the COVID-19 pandemic pivot to emergency remote learning then affected the post-assessment survey deployment along with the trying disruption to teaching and learning norms (Hodges et al., 2020). There was simply no room for the instructor, the researcher, and students to spare time and attention on the research side of this project. In addition, because of the COVID-19 lockdown, the equipment needed for the lab-based course was not available to the instructor nor students. Therefore, the originally proposed and IRB approved class observations were not possible.

However, even in the pivot to emergency remote learning, the instructor persisted teaching and used the OER in the Introduction to Petrology course. Student learning with the piloted content was assessed in a variety of ways, including pre- and post-class informal knowledge checks, informal Zoom poll “quizzes,” homework or lab assignments based upon questions in the OER, and summative assessments in the form of quiz or test questions. These were all in alignment of the class curriculum plan and objectives, as part of the class learning activities. In the Laboratory Techniques in Geology class, student learning was assessed with written report assignments in part based upon questions in the OER and evaluated with a standard rubric developed for an earlier project ((Liu, Johnson, & Haroldson, 2021; Liu, Johnson, & Mao, 2021). In spring 2021, students in Introduction to Petrology were asked about their confidence in understanding materials in the Zoom polls and were also asked to provide feedback on each chapter in the OER as part of their homework assignments. The 19 students enrolled in this class all gave consent to participate in pre-assessment and 16 consent to post-assessment questionnaires administered with a web-based survey platform, QuestionPro. All data was collected in aggregate and anonymous form and with informed consent.

Reviewer Feedback

The OER were shared and reviewed at a workshop at the 2018 AGU (American Geophysical Union) Fall Meeting for user experience review (with the content developed through the NSF funding cycle) and as part of the Earth Educators’ Rendezvous (EER) conference in summer 2019. Written notes from 2018 AGU meeting group review discussions were collected and the audio conversation of the EER workshop, in a large room with nearly 30 participants, was recorded and transcribed after the conference. Between fall 2019 and spring 2020, a science librarian and a geoscientist from a similar public university in a different state were invited to review the published OER. The reviewers were invited to mark up and comment the OER on the content rigor, navigation, and accessibility, using the free web-based annotation software hypothes.is. One reviewer chose to use hypothes.is. The other wrote a review report and sent to the project investigators via emails. Comment function within PressbooksEDU was also enabled for feedback for those who would have access through class integration or URL search.

Reflective Implementation

The researchers also attempted a pilot call for implementation with social media, Twitter and Facebook. Data was collected on SEO through Google Analytics enabled by VIVA. YouTube Analytics were collected from the Analytical Methods of Geoscience (AMiGEO) channel.

According to the originally planned research, the data collection was proposed for observations, usability test, design documentations and logs, pre- and post-test after pilot use of developed OER content, conference workshop feedback from faculty and geoscience graduate students, librarian and geoscience faculty content review feedback, and students using OER for class assignments. The STEM classroom observation checklist (Stearns et al., 2012), Koretsky, Petcovic, and Rowbotham’s (2012) Geoscience Authentic Learning Experience Questionnaire, Benson et al (2002) Usability Test for e-Learning Evaluation, and Motivated Strategies for Learning Questionnaire (MSLQ) (Pintrich & de Groot,1990) were planned for evaluation and reflection for this study.

Unanticipated issues, challenges, and opportunities arose in the 2020-21 parts of this pilot study due to the COVID-19 pandemic and a change in the institutional survey platform. The second half of the spring 2020 semester was conducted solely online from home, with limited conferencing and internet resources for students and instructors and pandemically mandated asynchronous teaching. This severely limited the Introduction to Petrology and Laboratory Techniques courses taught that semester and prevented meaningful evaluation of student experiences with OER. The Introduction to Petrology course was taught in spring 2021 but was completely rearranged due to a required two-week online only period at the start of the semester, and a prerequisite course being taught solely online with no hands-on work. One benefit to this re-arranged schedule was that the entire online OER microscopy module was used to teach the students this topic, first online and then as a support for laboratory exercises later in the semester. Improved technologies allowed student feedback through Zoom polls as well. The persistence of online content presence, learning access, and SEO with Google and YouTube analytics did provide valuable data for the results.

Results

This section presents the results of this study from 1) analysis of student performance and perception with OER adoption, 2) reviewers’ comments and corresponding modification process; 3) SEO analytics from Google and YouTube.

Student Learning Performance

These results consisted of the key data of viability that provided evidence of the design intervention of OER and effectiveness of its adoption in classes. This was measured with the combination of pre-class warm-up just-in-time reflections based on the key questions built in the interactive textbook, laboratory note-taking, post-class short quizzes, and assignments graded with a rubric that was generated in the NSF-funded project (DUE #1611798 and DUE #1611917).

The students in the spring 2020 Introduction to Petrology class did very well on the homework questions about the OER reading and improved their average score from the quiz (beginning of the semester) to the two exam questions based upon knowledge of mineral identification, according to the class instructor notes. For the spring 2021 Introduction to Petrology class, informal Zoom poll “quizzes” were launched at the beginning of online class lectures in January and February 2021, to engage students in completing online assignments and attending online lectures during the first half of the semester when the course transitioned from online-only to a hybrid format. These “quizzes” were NOT graded. These were given at the beginning of class to remind students of content covered previously. Several multiple-choice or multiple-answer questions were posted using Zoom polling, and students had 2-4 minutes to respond to these polling questions. Students were able to use notes or online materials if they wished, but the polls were meant as a quick review so that there was no time to search for responses at length.

Polls were conducted during five classes at the beginning of the semester. The poll on the first day of class asked students to recall information about minerals covered in the pre-requisite course taken in the previous semester. On a scale from 1=very unsure to 5=very confident, the average student confidence in their responses on this first pre-module quiz was 2.4/5. Students were more confident about their responses to similar Zoom polls after covering use of the microscope in the OER, even with the class still meeting completely virtually. Students ranked their confidence in understanding the parts and how to use the microscope as 4.1/5 after completing the OER and ranked their understanding of intermediate and advanced analytical procedures using the petrographic microscope as 3.2/5 even though they had not yet touched a microscope for any class.

There were one graded quiz and 3 questions in an exam in spring 2020 that were associated with student use of the OER content. The quiz contained 9 points of questions about mineral formulas and 21 points of questions about minerals in the thin section. The average quiz grade remained the same (within error) as the previous 2 semesters, even with the pandemic interruption in Spring 2020. The average score of quiz grades was 77.8, in comparison with the average of spring 2019, 81.4, and spring 2018, 76. The exam covered the first half of the semester, which included many other topics and labs which apply the knowledge from the microscopy unit in the Intro to Petrology OER. Only three questions from the exam directly related to identifying minerals from formulas or in thin sections (with no other additional skills required). Students did better on these 3 questions than they did on the exam as a whole. The average % correct for these 3 questions was 90%, with 14 students having answered 100% correctly among 21. The average for the individual part of the exam (53 questions) was 64%.

In the pre- and post-assessment questionnaires with QuestionPro (a total of 31 Likert-scale items: 1=strongly disagree to 5=strongly agree), there were 5 questions focusing on student learning expectations, experiences, and confidence in laboratory-based classes. The Pre-assessment questionnaire collected demographic information and previous geoscience learning experience for the spring 2020 petrology class. Among the 19 pre-assessment participants with research participation consent, there were 47% with female identity and 53% as male; 82% White, 12% Hispanic and Latinx, and 6% Asian and Asian American; 35% senior, 35% junior, 18% sophomore, and 12% other. The completion of previous relevant courses was widely diverse from physical geology, mineralogy, historical geology, introduction to environmental studies to calculus or statistics (Appendix A).

There were student perceived gains regarding the following laboratory-based learning experiences, with an average gain of .21 (Appendix B):

- To reflect on what I was learning (+.49)

- To discuss elements of my investigation with classmates or instructor (+.35)

- To be confident when using equipment (+.29)

- To share the problems that I encountered during my investigation and seek input (+.28)

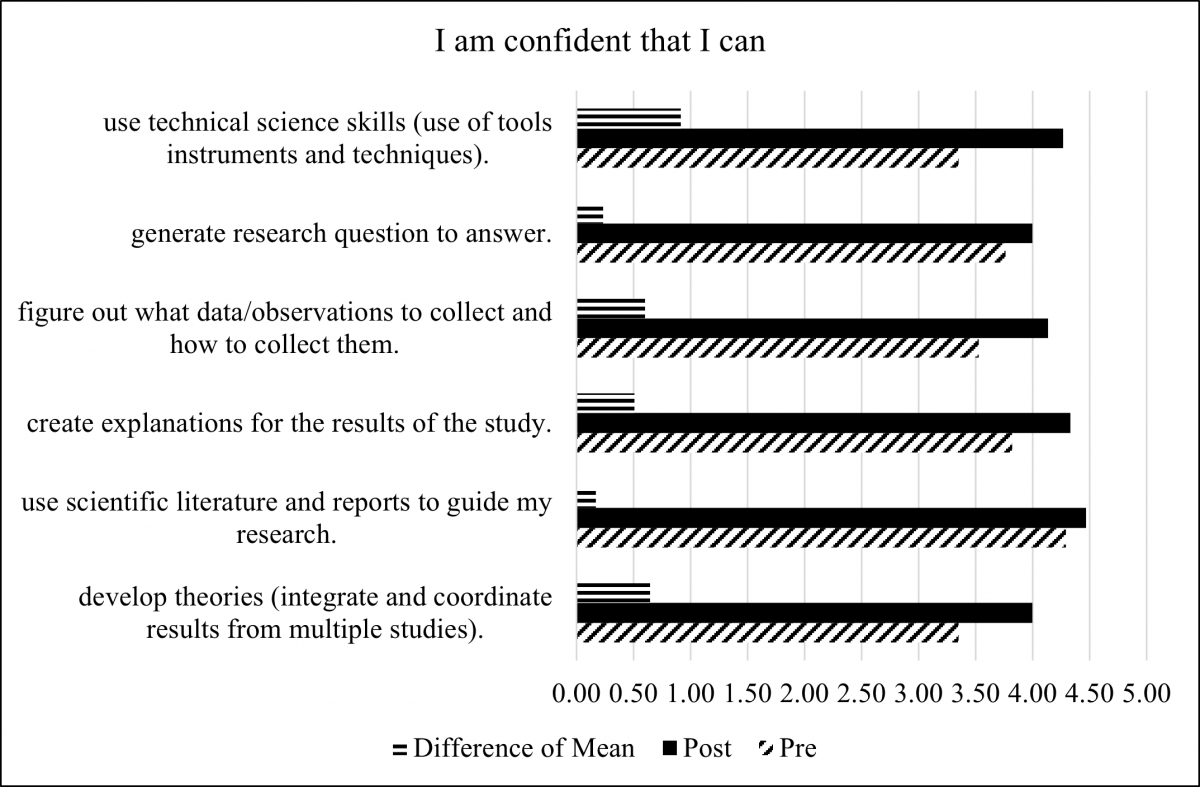

More importantly, students gained confidence through the use of OER with an average gain of .52, based on the comparison of pre- and post-assessment survey results (Figure 2). The instructor reflected, “this is not significantly different from previous semesters. So, we aren't hurting student progress by using the online [OER] materials! I sort-of had the materials together in 2018 and 2019, but not in the nice interactive current form.” The results coincided with those from previous studies, “While remaining silent on the issue of whether OER use is associated with better learning outcomes, Hilton concludes that OER use is not associated with decreases in student learning” (Wiley et al., 2017, p. 64).

Student Perception of Learning with OER

Students also provided qualitative feedback through an open-ended question at the post-assessment, as the following:

- This course was very helpful in learning and understanding thin sections which was something I struggled with before this class.

- I was able to get hands-on lab time which I think is necessary for the identification of rocks. My confidence was not where I hoped it was at the begging of the semester, but now as we have completed the course I feel far more confident in my abilities.

- This course did a great job of showing how to use petrographic microscopes and collect data using observations and overall theories.

Figure 2

Pre- and Post- Assessment of Student Confidence

This is a bar chart showing student confidence gains in the following:

This is a bar chart showing student confidence gains in the following:

I can generate research question to answer.For most of the graded homework assignments on the microscopy modules in spring 2021, students were asked to respond to this question at the end of the assignment:

How could this page be improved? Did you find any errors, or can you think of a better way to present the materials on the textbook site?

Although some students simply responded that it was “good” or that they couldn’t think of much for improvement, many did provide comments. These comments included technical and content suggestions or were a mixture of both. For example, one student wished that an image could be enlarged, and another would like an interactive diagram to work in a different way. Content comments tended to focus on applications, such as how to use a diagram to complete a task or question.

In terms of perception of using OER, students were motivated to provide comments when the activity became part of an assignment for credit. Each time students were assigned an OER module to complete reading and answering the online questions, there was a corresponding graded assignment in the course in Canvas. In the same graded assignments, students were also invited to contribute open comments for improving OER. The last question in each graded homework assignment asked students to provide feedback about how to improve the OER - what content to include, or how to present the material better. Students usually provided varied responses. As long as they wrote a response, the instructor gave them credit for that question.

In addition to positive feedback on the OER, students also suggested improvement of design layout, interactivity, and content enrichment with excerpts like the following:

- “It would be nice if on the question about the microscope parts they put a model of like a side view of a microscope so that I can reference to where each part is.”

- “I like the quizzes at the end of each section. For this section, I think the mini-quiz helped me understand what causes the issues and how they can be resolved.”

- “I did not find any errors, and it really cleared up some misconceptions I had on pleochroism. It would be nice to see more pictures.”

Students were also asked to complete pre- and post-surveys (Koretsky et al., 2012) prior to the use of this interactive OER textbook and at the end of its use of a semester, to measure the perceived changes in knowledge and attitude. The questionnaires included student perception of learning problem solving skills, being confident when using equipment, being intrigued by the instruments, using observations to understand the physical or chemical properties of the sample, interpreting the data beyond only doing calculations, feeling the procedures to be simple to do, developing confidence in the laboratory, thinking about what is happening at the atomic or molecular level, and being excited to do laboratory analyses. Because of the survey platform transition resulting in the failure to capture the pre-assessment at the beginning of the semester, and the pandemic hiatus toward the end of the spring 2020, the data from these surveys were not valid for analysis.

The reflection from the course instructor indicated that proactive (and well-formulated) questions were asked by students after viewing the OER online. The instructor’s reflection also revealed that students tended to ask more questions that were relevant to the operation of the instruments. Students’ questions contained information basis from the online OER which they were required to review before their lab sessions.

Review Feedback

The researchers adopted several approaches to collecting review feedback in this educational design research project. The research team conducted workshops at AGU and Earth Educator’s Rendezvous conference, focusing on a preliminary user experience and navigation of the content before the OER was formally published. The geoscience expert on the research team presented the techniques of OER content presentation with the PressbooksEDU platform and development of interactive H5P learning objects. Participants provided suggestions on the navigation friendliness of the OER and learning management system integration. These were incorporated in the later development and pilot implementation of OER, for example, with more subheadings and hyperlinks to primary sources.

The hypothes.is annotation review from the science librarian focused on the navigation of the published content, consistency of references and acknowledgement, and accessibility of images within interactive H5P learning objects. These were modified accordingly, as time allowed and before the traumatic pandemic transitions, for instance, including modifying font size with Adobe Illustrator images, reference formatting, and alt text in Pressbooks media. The content suggestions from a geoscientist in another university enriched the resources linked to the OER later of the Spring 2020.

Another result was related to the commenting features in PressbooksEDU. These comments could be from anyone who had access to the OER through search engines or third-party links. There was some very positive feedback, like “I really like how you have structured everything about your questions and answers I’ll like to need more to answer”. There were also “junkish” comments, given the openness. The incorporation of review feedback was very time consuming, especially during the COVID-19 pandemic and along with envisioning the close-out of the funding cycle. However, these formal and informal review feedback provided directions and implications for some future directions of the project development, such as attention to accessibility when selecting technologies and managing workflow and balancing automated feedback to informal learning assessment interactive objects with human touch.

Wider Reach and Reflective Implementation

The researchers planned a launching campaign in spring 2020 as the grant proposal designed funding cycle. Starting from February of 2020, Twitter and Facebook (now Meta) were used to disseminate the published OER. Because of the professional networks already set up for the researchers, the retweets mostly occurred among professionals and educators in STEM, learning science, and instructional design. Nearly 720 retweets provided valuable information, including adding more meaningful feedback to users upon completion of interactive H5P questions.

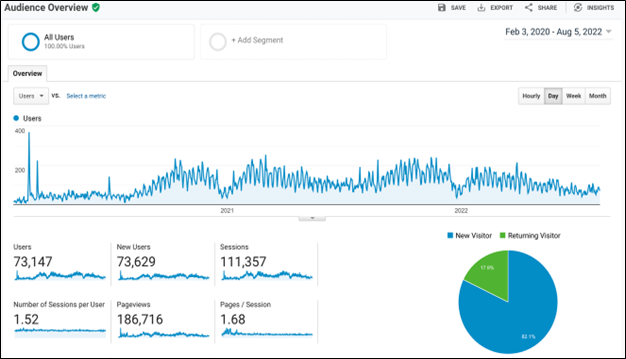

Figure 3

Total Usage and Page Views between February 2020 and August 2022 of Introduction to Petrology on Google Analytics

Google Analytics - Total usage and page views between Feb 2020 and Aug 2022. The graph of users through time shows a weekly cycle of higher and lower use, and an overall increase of use in the fall of 2020 continuing through 2022. There were 73,000 unique users during this time, and 73,147 active users at the time of the screen capture.

Google Analytics - Total usage and page views between Feb 2020 and Aug 2022. The graph of users through time shows a weekly cycle of higher and lower use, and an overall increase of use in the fall of 2020 continuing through 2022. There were 73,000 unique users during this time, and 73,147 active users at the time of the screen capture.The VIVA system has enabled Google Analytics for Introduction to Petrology OER, which serves the reflective implementation of the OER with SEO strategies. “The four principal sources of traffic to a site include direct views where the visitor has knowledge of the URL, organic traffic from search engine retrieval, referrals where the URL has been placed as a ‘back-link’ in another location, and via social media. Typical SEO activity includes the researching of appropriate keywords that are then strategically placed ‘on-site’ within the written content, alongside ‘off-site’ marketing activity.” (Rolfe, 2016, p. 298). Figures 3, 4, 5, and 6 are the Google Analytics of Introduction to Petrology, with the researchers’ Twitter promotion and retweets between February 2020 and August 2022. The Google Analytics of the OER Introduction to Petrology documented that there were 186,716 page views on different continents globally, 73,147 users, 17.9% returning users, and a highest point of 4,101 active users on a 28-day interval in October 2021 (Figures 3 and 4).

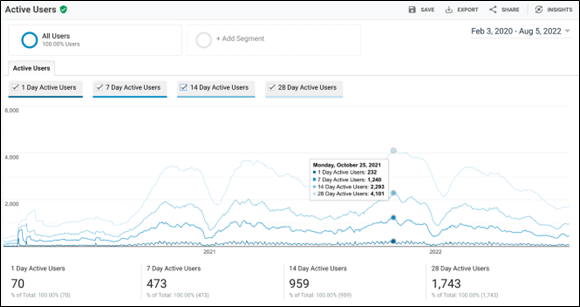

Figure 4

Active Users Between February 2020 and August 2022 of Introduction to Petrology on Google Analytics

Google Analytics screenshot showing active users for the Petrology OER between February 2020 and August 2022.This graph shows the highest monthly activity was in October 2021 with 4,101 users.

Google Analytics screenshot showing active users for the Petrology OER between February 2020 and August 2022.This graph shows the highest monthly activity was in October 2021 with 4,101 users.Rolfe recommended that “an additional digital marketing technique is the creation of content in multiple formats for easy dispersal across the web, and this was embedded within our projects and also served to promote interoperability and OER accessibility” (2016, p. 298). This was also preliminarily verified in the Sait Kyzy and Ismailova (2022) study of SEO used to promote Moodle-based digital instructional materials. In the OER project of this paper, the Google analytics demonstrated that there was 53.29% standard computer access and 45.29% of mobile access, meaning that the mobile access to these OER would be of equally important development and promotion strategies (Figure 5).

Figure 5

Distribution of Access Technologies

This graph shows the distribution of technologies used to access the VIVA petrology OER. 3% of students used a desktop, 45% used a mobile device and 1.4% used a tablet.

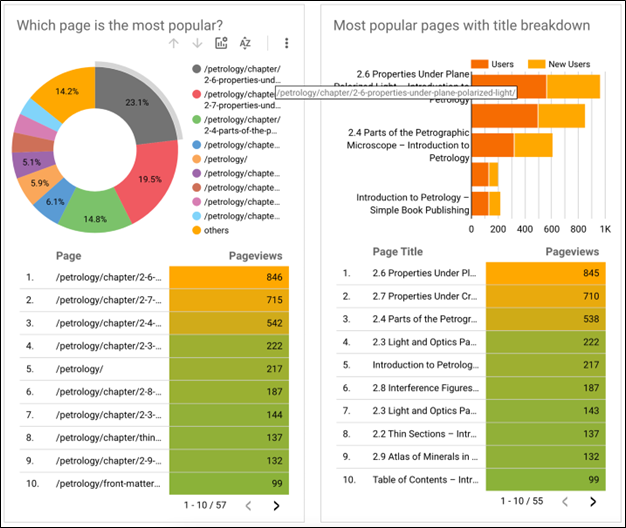

This graph shows the distribution of technologies used to access the VIVA petrology OER. 3% of students used a desktop, 45% used a mobile device and 1.4% used a tablet.The usage information provided by Google Analytics identified what content was the most sought after by the audience. As indicated with Figure 6, the most popular content pages for Introduction to Petrology were 2.6 and 2.7, the simple and intermediate applications of the petrographic microscope to identify minerals, followed by 2.4 describing the parts of the petrographic microscope (Figure 6). Given limited resources and time, these analytics informed the further refinement of the most frequently visited chapters.

Figure 6

The Most Popularly Visited Pages between February 2020 and August 2022

The most popularly visited content for the Petrology OER between February 2020 and August 2022-

The most popularly visited content for the Petrology OER between February 2020 and August 2022-

A pie chart shows that the most popular pages were section 2.6 and 2.7 covering basic to intermediate concepts of how to use the microscope, followed by section 2.4 on parts of the microscope. These OER texts are usually connected with video expositions or demonstrations on the AMiGEO YouTube Channel [https://www.youtube.com/@amigeo]. Therefore, the YouTube Studio analytics have been part of the SEO data collection.

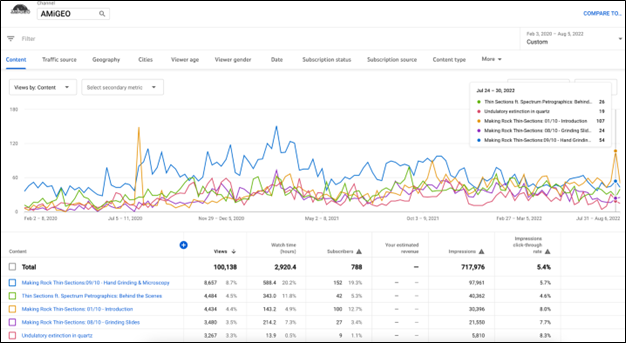

These YouTube analytics revealed that most popularly viewed videos were demonstrations of how petrographic thin sections were created with Hand Grinding & Microscopy as well as a Thin Section creation process at Spectrum Petrographics (Figure 7). The AMiGEO Channel analytics also confirmed previous research on SEO that the metadata driven backlinked strategies would optimize the OER visit (Rolfe, 2016). In a more recent study using SEO, page content and site organization structure, together with marketing strategies have been found related to the online instructional content visibility in Central Asia universities (Sait Kyzy & Ismailova, 2022).

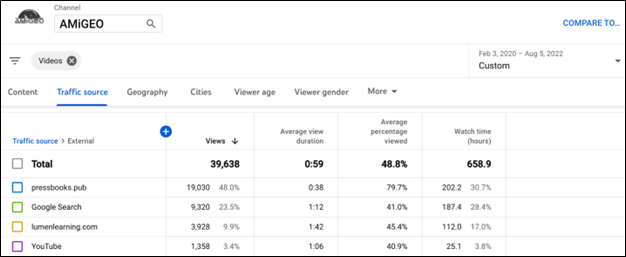

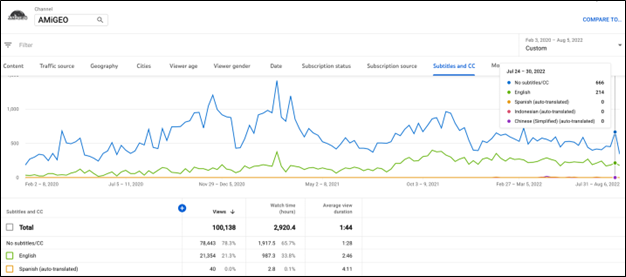

In this Introduction to Petrology OER, the VIVA- sponsored and maintained Pressbook.pub attracted nearly half of the traffic (48%) external to direct YouTube visitors (Figure 8). The YouTube Analytics also provided information about user accessibility needs. Of the 100,138 views between February 2020 and August 2022, 21% used English subtitles/CC, which counted toward 33.8% of a total 2,920 hours of watch time (Figure 9). The closed caption usage analytics confirmed the value of researchers’ edits of the YouTube auto captioning English version before publication to ensure scientific accuracy when supporting accessibility.

Figure 7

Engagement with Video Content on AMiGEO YouTube Channel

Most visited AMIGEO Youtube video content from February 2020 to August 2022-

Most visited AMIGEO Youtube video content from February 2020 to August 2022-

The most commonly visited videos included content about how to prepare samples for microscope analysis in the form of thin sections of rock adhered to glass slides.Figure 8

How the Audience Reaches the AMiGEO YouTube Channel

This table shows how the audience reaches the AMIGEO YouTube videos. Most find the videos through the OER (VIVA Virtual Virginia Library Pressbooks), followed by Google Searches.

This table shows how the audience reaches the AMIGEO YouTube videos. Most find the videos through the OER (VIVA Virtual Virginia Library Pressbooks), followed by Google Searches.Figure 9

Engagement with Video Subtitles/CC on the AMiGEO YouTube Channel

This graph shows viewer engagement with subtitles / closed captions on AMIGEO YouTube videos. 21% of users used the provided English subtitles.

This graph shows viewer engagement with subtitles / closed captions on AMIGEO YouTube videos. 21% of users used the provided English subtitles.Conclusion and Discussion

This paper reports part of a multi-phase OER design, development, and the potential of broad implementation, using an evaluative lens to answer the question of how can a series of geoscience OER be reviewed and evaluated to optimize their reach of use and reuse? The OER is published through an academic library consortium sponsored open publishing platform and enables student access to interactive content with no cost. The interactive content, aligned with the modified POGIL questions, and self-assessment, provide opportunities for students to engage close observations of instrumentation, and gain analytical skills with self-reflection. Each unit with accompanying customized POGIL components provides a base for instructional strategy recommendation for reuse and adoption.

This strategy was very welcome during the COVID-19 pandemic in spring 2020 and spring 2021 when the actual instrumentation became inaccessible or was only accessible some of the time, which indicated the need for more laboratory-based OER like the one in this study. The results of this education design research, along with many OER studies, confirmed that OER have the potential to increase student savings by integrating free materials for any courses that teach students about rocks and minerals with instrumentation for physical and chemical analysis. With proper instructional design, assessment methods, and integration of active learning strategies, these OER were perceived favorably by students and elevated student confidence in geoscience scientific instrumentation and laboratory-based learning (Liu, Johnson, & Haroldson, 2021; Liu, Johnson, & Mao, 2021).

This current study provides valuable insight and unique research procedures and results for reviewing and evaluating OER adoption and implementation in STEM education, with cost-efficient and free software. In addition to adapting online POGIL strategies to scaffold students’ inquiry in geosciences, using social media and open-source software has substantially reduced the cost of publication, supported management of OER files and projects, ensured accessibility with closed captioning, and enabled transparent review. These also provide purposeful and interactive guidance for edits, adoption, and broadening the impact.

Along with engaging students’ learning through interactive content and activities, OER textbooks will help develop sustained self-regulated learning for students with diverse abilities and with no textbook expense. Since educational design research is associated with analysis strategies in policy analysis, field portrait, and perception polls (McKenney & Reeves, 2019), this study can also provide practical techniques and strategies to promote OER visits by coordinated use of multiple methods, embedding learning-centered high-traffic metadata in the online content, using the low or no-cost SEO to reach audience cross disciplines, in different geographical locations, and beyond an individual institution.

When reviewing and analyzing these results in reference to OER literature, the researchers of this project also fully recognize limitations and challenges of sustaining the continued development and effective adoption of these OER. Faculty awareness of OER and their reuse remains limited (Baas, Admiraal, & Berg, 2019). There are still tensions in applying robust research methodology in open access of OER by diverse populations (Pitt et al., 2013). For instance, the results of learning engagement with OER in this study are constrained by the small sample size in real-world teaching practice (vs. an experimental environment) and interruption of data collection in the trying COVID-19 pandemic pivot to emergency remote learning. In the meantime, the costly design and development of quality OER count on financial support from funding agencies, institutional resource and technology infrastructure support, and dedication of individuals. The results of this study reveal that there are still barriers between being truly open and the ability and resources to evaluate and edit the OER content to sustain their quality and reuse.

“Studies repeatedly alluded to systemic burdens embedded within higher education institutions that make it difficult not only to start an OER initiative, but to continue it after initial funding runs out. . . . Such a format of implementation narrows the work burden to individuals rather than sharing the load and does not address cultural and structural barriers across an institution” (Luo et al., 2020, p. 146). With the funding cycle and phased interest and balance between career and research for OER developers including subject matter experts (SMEs, in this project the geoscientist), instructional designers, and media developers, further philanthropic and systematic investment in OER may need to look at alternative models to increase quality and retain openness (Downes, 2007; Wiley et al., 2017).

Further, after the OER creation and repository compilation, the discoverability of discipline specific OER needs combined expertise of library science and instructional design as well as sustained support from institutions and professional communities (Luo et al., 2020; Tlili et al., 2020). Sustainably adopting and reusing OER, to keep the longevity of OER, rely on cohesive coordination of OER adoption, institutional steering, and academic and scholarly culture. For example, rpk Group and State University of New York (SUNY) (2019) have proposed a framework of OER adoption which points out the supportive roles of academic libraries in OER source curation and discipline-specific support with professional development. Studies have also proven that libraries can play connective roles at subject and institution levels, to reduce barriers to OER adoption with institutional strategic resource and infrastructure support (Baas, Admiraal, & Berg, 2019; Thompson & Muir, 2020).

As DeVries (2013) suggests, the acknowledgement of challenges encourages pursuing solutions to effectively reusing OER and catalyzing institution-based or community-based culture to fully repurpose these open resources. Building communities of users is recommended by OER researchers and projects like UKOER, after a multi-year creation and adoption of OER in multiple UK higher educational institutions (McGill et al, 2013; Rolfe & Griffin, 2012). The OER development community can possibly involve student creation and reuse “renewable assignments” (Wiley et al., 2017). Wiley and other researchers (2017) have summarized several well-known reusable assignment OER projects with students as creators and documented the success of the integration in student learning. There have also been attempts to experiment with a diverse variety of business, community, or academic-based OER building and adoption models (de Carvalho et al., 2016; Downes, 2007; Koohang & Harman, 2007). Baas, Admiraal, and Berg (2019) have also verified the needs of OER user community with their study in a large university in the Netherlands.

These will continue to evolve as OER development, discovery, and adoption are facilitated and optimized through SEO tools and strategies along with the maturity in conceptualizing and operationalizing OER use and reuse. The procedures and techniques of research projects like the educational design research project reported in this paper are valuable and significant for instructors, instructional designers, media producers, OER platform providers, librarians, and stakeholders in open education and OER decision- and policy making.

Acknowledgements

The authors acknowledge the support from National Science Foundation (NSF DUE #1611798 and DUE #1611917), Virtual Library of Virginia (VIVA), and James Madison University in making possible these OER design, development, implementation, dissemination, and research with evaluation. Acknowledgement also goes to the many collaborators of these OER projects and reviewers of the manuscript in its evolving formation.

References

Abdulwahed, M., & Nagy, Z. K. (2009). Applying Kolb's experiential learning cycle for laboratory education. Journal of Engineering Education, 98 (3), 283-294. https://doi.org/10.1002/j.2168-9830.2009.tb01025.x

Ackovska, N., & Ristov, S. (2014). OER approach for specific student groups in hardware-based courses. IEEE Transactions on Education, 57 (4), 242-247. https://doi.org/10.1109/TE.2014.2327007 https://ieeexplore.ieee.org/document/6839053

Algers, A., & Ljung, M. (2014). Peer review of OER is not comprehensive- Power and passion call for other solutions. European Distance and E-Learning Network (EDEN) Conference Proceedings, 2, 365–374. https://www.ceeol.com/search/article-detail?id=847505

Allen, G., Guzman-Alvarez, A., Molinaro, M., & Larsen, D. (2015). Assessing the impact and efficacy of the open-access ChemWiki textbook project. Educause Learning Initiative Brief, 1-8. https://library.educause.edu/resources/2015/1/assessing-the-impact-and-efficacy-of-the-openaccess-chemwiki-textbook-project

Alves, L. S., & Granjeiro, J. M. (2018, June). Development of digital Open Educational Resource for metrology education. In Journal of Physics: Conference Series (Vol. 1044, No. 1, p. 012022). IOP Publishing. https://iopscience.iop.org/article/10.1088/1742-6596/1044/1/012022/meta

Atenas, J., & Havemann, L. (2013). Quality assurance in the open: an evaluation of OER repositories. INNOQUAL-International Journal for Innovation and Quality in Learning, 1 (2), 22-34. https://eprints.bbk.ac.uk/id/eprint/8609/1/30-288-1-PB.pdf

Baas, M., Admiraal, W. F., & Berg, E. (2019). Teachers' adoption of open educational resources in higher education. Journal of Interactive Media in Education, 2019 (1), 1-11.

Bailey, C. P., Minderhout, V., & Loertscher, J. (2012). Learning transferable skills in large lecture halls: Implementing a POGIL approach in biochemistry. Biochemistry and Molecular Biology Education, 40 (1), 1-7. https://doi.org/10.1002/bmb.20556

Benson, L., Elliott, D., Grant, M., Holschuh, D., Kim, B., Kim, H., ... & Reeves, T.C. (2002). Usability and instructional design heuristics for e-learning evaluation (pp. 1615-1621). Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/p/10234/

Blank, L. M. (2000). A metacognitive learning cycle: A better warranty for student understanding?. Science Education, 84 (4), 486-506. https://doi.org/10.1002/1098-237X(200007)84:4%3C486::AID-SCE4%3E3.0.CO;2-U

Bodily, R., Nyland, R., & Wiley, D. (2017). The RISE Framework: Using learning analytics to automatically identify Open Educational Resources for continuous improvement. International Review of Research in Open and Distributed Learning, 18 (2), 103–122. https://doi.org/10.19173/irrodl.v18i2.2952

Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. The Journal of the Learning Sciences, 2 (2), 141–178. https://doi.org/10.1207/s15327809jls0202_2

Brown, J. A. (2018). Producing scientific posters, using online scientific resources, improves applied scientific skills in undergraduates. Journal of Biological Education, 1-11. https://doi.org/10.1080/00219266.2018.1546758

Chen-Yuan, C., Bih-Yaw, S., Zih-Siang, C., & Tsung-Hao, C. (2011). The exploration of internet marketing strategy by search engine optimization: A critical review and comparison. African Journal of Business Management, 5 (12), 4644-4649. https://doi.org/10.5897/AJBM10.1417

Clements, K. I., & Pawlowski, J. M. (2012). User‐oriented quality for OER: Understanding teachers' views on re‐use, quality, and trust. Journal of Computer Assisted Learning, 28 (1), 4-14. https://doi.org/10.1111/j.1365-2729.2011.00450.x

Collins, A. M. (1992). Towards a design science of education. In E. Scanlon & T. O’Shea (Eds.), New directions in educational technology (pp. 15–22). Springer. https://doi.org/10.1007/s10551-006-9281-4

Cooney, C. (2017). What impacts do OER have on students? Students share their experiences with a health psychology OER at New York City College of Technology. International Review of Research in Open and Distributed Learning, 18 (4), 155–178. https://doi.org/10.19173/irrodl.v18i4.3111

Creswell, J. W., & Creswell, J. D. (2018). Research design: Qualitative, quantitative, and mixed methods approaches. Sage publications.

de Carvalho, C. V., Escudeiro, P., Rodriguez, M. C., & Nistal, M. L. (2016). Sustainability strategies for open educational resources and repositories. 2016 XI Latin American Conference on Learning Objects and Technology (LACLO), 1–6. https://ieeexplore.ieee.org/document/7751806

Dennen, V. P., & Bagdy, L. M. (2019). From proprietary textbook to custom OER solution: Using learner feedback to guide design and development. Online Learning, 23 (3), Article 3. https://doi.org/10.24059/olj.v23i3.2068

DeVries, I. (2013). Evaluating Open Educational Resources: Lessons learned. Procedia - Social and Behavioral Sciences, 83, 56–60. https://doi.org/10.1016/j.sbspro.2013.06.012

Downes, S. (2007). Models for sustainable Open Educational Resources. Interdisciplinary Journal of E-Learning and Learning Objects, 3 (1), 29–44. https://www.learntechlib.org/p/44796/

Entwistle, N., & Tait, H. (1990). Approaches to learning, evaluations of teaching, and preferences for contrasting academic environments. Higher education, 19 (2), 169-194. https://doi.org/10.1007/BF00137106

Entwistle, N., Tait, H., & McCune, V. (2000). Patterns of response to an approach to studying inventory across contrasting groups and contexts. European Journal of Psychology of Education, 15 (1), 33-48. https://doi.org/10.1007/BF03173165

Farrell, J. J., Moog, R. S., & Spencer, J. N. (1999). A guided-inquiry general chemistry course. Journal of Chemical Education, 76 (4), 570-574. https://doi.org/10.1021/ed076p570

Foster, E. D., & Deardorff, A. (2017). Open Science Framework (OSF). Journal of the Medical Library Association: JMLA, 105 (2), 203–206. https://doi.org/10.5195/jmla.2017.88

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111 (23), 8410-8415. https://doi.org/10.1073/pnas.1319030111

Gaba, A. K., & Prakash, P. (2017). Open Education Resources (OER) for skill development. Journal of Educational Planning and Administration, 31 (4), 277-287.

Harris, D., & Schneegurt, M. A. (2016). The other open-access debate: Alternate educational resources need to be further developed to counteract an increasingly costly textbook burden on university students. American Scientist, 104 (6), 334-337.

Hodges, C. B., Moore, S., Lockee, B. B., Trust, T., & Bond, M. A. (2020). The difference between emergency remote teaching and online learning. EDUCAUSE Review. https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning

Jonassen, D. H., & Rohrer-Murphy, L. (1999). Activity theory as a framework for designing constructivist learning environments. Educational Technology Research and Development, 47 (1), 61-79. https://doi.org/10.1007/BF02299477

Kay, R. H., & Knaack, L. (2008). A multi-component model for assessing learning objects: The learning object evaluation metric (LOEM). Australasian Journal of Educational Technology, 24 (5), Article 5. https://doi.org/10.14742/ajet.1192

Kimmons, R. (2015). OER quality and adaptation in K-12: Comparing teacher evaluations of copyright-restricted, open, and open/adapted textbooks. International Review of Research in Open and Distributed Learning, 16 (5), 39-57. https://doi.org/10.19173/irrodl.v16i5.2341

Kolb, D. A. (2014). Experiential learning: Experience as the source of learning and development. FT press.

Koohang, A., & Harman, K. (2007). Advancing sustainability of open educational resources. Issues in Informing Science & Information Technology, 4. https://proceedings.informingscience.org/InSITE2007/IISITv4p535-544Kooh275.pdf

Koretsky, C. M., Petcovic, H. L., & Rowbotham, K. L. (2012). Teaching environmental geochemistry: An authentic inquiry approach. Journal of Geoscience Education, 60 (4), 311-324. https://doi.org/10.5408/11-273.1

Land, S., & Jonassen, D. (Eds.). (2012). Theoretical foundations of learning environments (2nd Ed.). Routledge. https://doi.org/10.4324/9780203813799.

Larson, M. B., & Lockee, B. B. (2019). Streamlined ID: A practical guide to instructional design. Routledge. https://doi.org/10.4324/9781351258722

Lawson, A. E. (1989). A theory of instruction: Using the Learning Cycle to teach science concepts and thinking skills. NARST Monograph, Number One, 1989. https://files.eric.ed.gov/fulltext/ED324204.pdf

Liaw, S. S., Huang, H. M., & Chen, G. D. (2007). An activity-theoretical approach to investigate learners’ factors toward e-learning systems. Computers in Human Behavior, 23 (4), 1906-1920. https://doi.org/10.1016/j.chb.2006.02.002

Liu, J. C., & Johnson, E. (2020). Instructional development of media-based science OER. TechTrends, 64 (3), 439-450. https://doi.org/10.1007/s11528-020-00481-9

Liu, J. C., Johnson, E. A., & Mao, J. (2021). Interdisciplinary development of geoscience OER: formative evaluation and project management for instructional design. In B. Hokanson, M. Exter, A. Grincewicz, M. Schmidt, & A. A. Tawfik (Eds.) Intersections Across Disciplines (pp. 209-223). Springer. https://doi.org/10.1007/978-3-030-53875-0_17

Liu, J. C., Johnson, E., & Haroldson, E. (2021). Blending geoscience laboratory learning and undergraduate research with interactive Open Educational Resources. In A. G. Picciano, C. D. Dziuban, C. R. Graham, & P. D. Moskal (Eds.). Blended Learning: Research Perspectives, Volume 3 (pp. 315-332). Routledge. https://doi.org/10.4324/9781003037736

Luo, T., Hostetler, K., Freeman, C., & Stefaniak, J. (2020). The power of open: Benefits, barriers, and strategies for integration of open educational resources. Open Learning: The Journal of Open, Distance and e-Learning, 35(2), 140–158. https://doi.org/10.1080/02680513.2019.1677222

Malaga, R. A. (2010). Search engine optimization—black and white hat approaches. In Advances in Computers (Vol. 78, pp. 1-39). Elsevier. https://doi.org/10.1016/S0065-2458(10)78001-3

McGill, L., Falconer, I., Dempster, J.A., Littlejohn, A. & Beetham, H. (2013). UKOER/SCORE Review report. https://ukoer.co.uk/synthesis-evaluation/ukoer-review-report/

McKenney, S., & Reeves, T. (2019). Conducting educational design research (2nd ed.). Routledge. https://doi.org/10.4324/9781315105642

Meyer, J. H., & Parsons, P. (1989). Approaches to studying and course perceptions using the Lancaster Inventory—a comparative study. Studies in Higher Education, 14 (2), 137-153. https://doi.org/10.1080/03075078912331377456

Mishra, S., & Singh, A. (2017). Higher education faculty attitude, motivation and perception of quality and barriers towards OER in India. In C. Hodgkinson-Williams & P. B. Arint (Eds.) Adoption and impact of OER in the global South, 425-456. African Minds.

Moog, R. S., Spencer, J. N., & Straumanis, A. R. (2006). Process-Oriented Guided Inquiry Learning: POGIL and the POGIL project. Metropolitan Universities, 17 (4), 41–52. https://journals.iupui.edu/index.php/muj/article/view/20287

Piaget, J. (1964). Part I: Cognitive development in children: Piaget development and learning. Journal of research in science teaching, 2 (3), 176-186. https://eric.ed.gov/?id=EJ773455

Pintrich, Paul R., & de Groot, Elisabeth V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82 (1), 33-40. https://doi.org/10.1037/0022-0663.82.1.33

Pitt, R., Ebrahimi, N., McAndrew, P., & Coughlan, T. (2013). Assessing OER impact across organisations and learners: Experiences from the Bridge to Success project. Journal of Interactive Media in Education, 2013 (3), 17. https://doi.org/10.5334/2013-17

Plomp, T. & Nieveen, N. (2007). An introduction to educational design research. Netherlands institute for curriculum development. https://ris.utwente.nl/ws/portalfiles/portal/14472302/Introduction_20to_20education_20 design_20research.pdf

Purkayastha, S., Surapaneni, A. K., Maity, P., Rajapuri, A. S., & Gichoya, J. W. (2019). Critical components of formative assessment in Process-Oriented Guided Inquiry Learning for online labs. Electronic Journal of e-Learning, 17(2). 79-92. https://eric.ed.gov/?id=EJ1220140

Richardson, J. T. (2005). Students' perceptions of academic quality and approaches to studying distance education. British Educational Research Journal, 31 (1), 7-27. https://doi.org/10.1080/0141192052000310001

Rolfe, V. (2016). Web strategies for the curation and discovery of Open Educational Resources. Open Praxis, 8 (4), 297–312. https://www.learntechlib.org/p/174270/

Rolfe, V., & Griffin. (2012). Using open technologies to support a healthy OER life cycle. Proceedings of Cambridge 2012: Innovation and Impact-Openly Collaborating to Enhance Education, 411–415. https://oro.open.ac.uk/33640/5/Conference_Proceedings_%20Cambridge_2012.pdf

rpk Group & SUNY (2019) OER field guide for sustainability planning: Framework, information and resources. https://oer.suny.edu/wp-content/uploads/2021/09/rpkgroup_SUNY_OER-Field-Guide.pdf

Sait Kyzy, A., & Ismailova, R. (2022). Visibility of Moodle applications in Central Asia: analysis of SEO. Universal Access in the Information Society, 1-11. https://doi.org/10.1007/s10209-022-00923-6

Stearns, L. M., Morgan, J., Capraro, M., & Capraro, R. M. (2012). A teacher observation instrument for PBL classroom instruction. Journal Of STEM Education: Innovations & Research, 13 (3), 7-16.

Thompson, S. D., & Muir, A. (2020). A case study investigation of academic library support for open educational resources in Scottish universities. Journal of Librarianship and Information Science, 52 (3), 685-693. https://doi.org/10.1177/0961000619871604

Tlili, A., Nascimbeni, F., Burgos, D., Zhang, X., Huang, R., & Chang, T.-W. (2020). The evolution of sustainability models for Open Educational Resources: Insights from the literature and experts. Interactive Learning Environments, 1–16. https://doi.org/10.1080/10494820.2020.1839507

van den Akker, J., Grvemeijer, K., McKenney, S., & Nieveen, N. (2006). Educational design research. Routledge. https://doi.org/10.4324/9780203088364

Wiley, D., Webb, A., Weston, S., & Tonks, D. (2017). A preliminary exploration of the relationships between student-created OER, sustainability, and students’ success. The International Review of Research in Open and Distributed Learning, 18 (4). https://doi.org/10.19173/irrodl.v18i4.3022

Wiley, D. A. (2000). Connecting learning objects to instructional design theory: A definition, a metaphor, and a taxonomy. The instructional use of learning objects, 2830 (435), 1-35.

Zaid, Y. A., & Alabi, A. O. (2020). Sustaining Open Educational Resources (OER) initiatives in Nigerian universities. Open Learning: The Journal of Open, Distance and e-Learning, 1–18. https://doi.org/10.1080/02680513.2020.1713738

Appendix A

Pre-assessment: Courses Completed prior to Taking OER-integrated Introduction to Petrology

| Check the college courses that you have completed (either at JMU or elsewhere). (Please select All That Apply.) |

|---|

| Courses taken prior to Intro to Petrology | Percentage (%) | Count(N) |

| Physical Geology | 13% | 17 |

| Stratigraphy | 6% | 8 |

| Earth Studies | 4% | 5 |

| Structural Geology | 6% | 7 |

| Mineralogy | 13% | 17 |

| Minerals and Rocks | 2% | 3 |

| Historical Geology | 9% | 11 |

| Petrology | 1% | 1 |

| Geochemistry | 2% | 2 |

| Hydrology | 3% | 4 |

| Ocean Systems | 2% | 2 |

| Environmental Systems/Cycles | 1% | 1 |

| Introduction to Environmental Studies | 2% | 2 |

| Earth System Studies | 2% | 2 |

| Ecology | 2% | 2 |

| General Chemistry with a lab | 13% | 16 |

| General Biology with a lab | 3% | 4 |

| General Physics with a lab | 6% | 8 |

| Calculus or Statistics | 12% | 15 |

| Total | 100% | 127 |

Appendix B

Difference of Mean of Student Perceived Laboratory-based Learning Experiences in Pre- and Post-assessment Survey Results

| pre | post | gained difference |

|---|

| to learn laboratory skills that will be useful in my life. | 4.35 | 4.53 | 0.18 |

| to develop confidence in the laboratory. | 4.59 | 4.73 | 0.14 |

| to be certain about the purpose and the procedures. | 4.35 | 4.43 | 0.08 |

| to interpret my data beyond only doing calculations. | 4.25 | 4.33 | 0.08 |

| to make mistakes and try again. | 4.50 | 4.73 | 0.23 |

| to focus on procedures, not concepts. | 3.44 | 3.73 | 0.29 |

| to use my observations to understand the physical or chemical properties of my sample. | 4.44 | 4.67 | 0.23 |

| to use my observations to understand the broader geological or chemical questions addressed by the data. | 4.56 | 4.67 | 0.11 |

| to be confident when using equipment. | 4.38 | 4.67 | 0.29 |

| to learn problem solving skills. | 4.56 | 4.60 | 0.04 |

| discuss elements of my investigation with classmates or instructors. | 3.25 | 3.60 | 0.35 |

| reflect on what I was learning. | 3.38 | 3.87 | 0.49 |

| help other students collect or analyze data. | 2.75 | 2.87 | 0.12 |

| share the problems I encountered during my investigation and seek input on how to address them. | 3.25 | 3.53 | 0.28 |