During the 2021/2022 school year, the Course Production Team (CPT) at the University of North Carolina at Charlotte adopted the interactive-constructive-active-passive (ICAP) framework to aid in online course development processes. Using this framework, the CPT analyzed courses for areas where we could take passive learning, such as watching videos and reading, to a higher level of engagement. One of the ways we did this is through the creation of interactive presentations using Articulate Rise 360. Our goal with creating interactive presentations is to add a layer of kinesthetic movement for students along with small applications of the content through knowledge checks and checkpoints. In this article, we present the steps taken to adopt the ICAP framework, how we transformed faculty-led lectures into higher engaging (active) interactive lectures, and present three case-studies where this has been implemented.

At the University of North Carolina at Charlotte (UNC Charlotte), online course development takes place in a centralized department within the School of Professional Studies (SPS). Faculty work directly with the Course Production Team (CPT), which consists of a Director, five instructional designers (ID), an online media designer (OMD), and three online multimedia specialists. Three development cycles occur during the academic year aligned with the fall, spring, and summer semesters lasting 12-16 weeks. During these cohorts, the CPT collaboratively produces 20-25 online courses with faculty members for a total of around 60 online courses a year. IDs work directly with four-to-five specific courses, while the online media designer (OMD) and online multimedia specialists work on all courses as needed.

During the semester-long process, IDs collaborate with faculty to design and develop an online course. There are five phases in the course development process: onboarding, planning, development, polishing, and deployment. This article will focus specifically on the collaborative media integration that occurs during the planning and development phases. See Figure 1 for a sample cohort schedule.

Figure 1

Sample Course Development Overview

Image showing a sample course production timeline. The timeline includes onboarding (2 weeks), planning phase (6 weeks), development phase (4 weeks), polishing phase (3 weeks), and an end of cohort celebration.

Image showing a sample course production timeline. The timeline includes onboarding (2 weeks), planning phase (6 weeks), development phase (4 weeks), polishing phase (3 weeks), and an end of cohort celebration.Once the planning (or thinking) phase begins, faculty complete a course planning map that helps them to identify course-level objectives, module-level objectives, module topics, and assessments that will determine whether or not students are truly meeting the course objectives. During this time, the IDs review the course planning map to ensure alignment, clarity, and implement best practices for online teaching and learning. Behind the scenes, the ID works with the OMD to review past content and identify potential areas for media that will enhance the learning experience and increase student engagement.

Lecture videos such as faculty recordings of themselves, links to YouTube, course introductions, and demonstrations were frequently relied upon as the sole media delivery type in our online courses. In past cohorts of development, faculty would work with an ID to identify areas where further explanation was needed and/or where lecture often took place in the face-to-face classroom. Once these were identified, faculty would write scripts using previously created PowerPoints and record these in a studio using a teleprompter. Since the same content and PowerPoints were used in the transition from face-to-face to online, recorded videos were often over 20 minutes long.

Upon the hiring of our first OMD in Spring 2021, we revisited how we defined media in online courses. With the hopes of increasing engagement and providing multiple means of engagement and representation (Center for Applied Special Technology [CAST], 2018), we began to research best practices for media in online courses.

Literature Review

Guo et al. (2014) studied students’ engagement with videos embedded in four edX Massive Open Online Courses (MOOCs). Each video in these courses contained a practice problem-set that students had the option to complete after watching the video. Data collected included the length of time a student spent on a specific video and records of whether or not the practice problem-sets were attempted. Findings from the study suggested that shorter videos with a length of about six minutes increased engagement. They hypothesized that this was due to the planning and scaffolding it takes to explain a concept succinctly in six minutes (Guo et al., 2014, p. 45). Findings also suggested that tutorial-style videos were more engaging than PowerPoint lectures and screencasts. Students often re-watched certain parts of the tutorial for concepts that they may have struggled to understand. Guo et al. (2014) hypothesized that this was due to how the content was presented at the student-level rather than being “talked at” in a lecture.

Mcgowan and Hanna (2015) studied the relationship between video lecture capture and student engagement in a computer programming course. Using Guo et al.’s (2014) suggested findings that shorter videos are more engaging, Mcgowan and Hanna (2015) presented the lecture capture videos in short “key moments,” rather than the entire length of the lecture. The study conclusions reiterated Guo et al.’s (2014) findings that shorter videos of around five minutes had more student engagement than longer lecture videos. Additionally, the study found that students were more likely to engage with lecture videos during the beginning of the semester and when videos were directly related to a specific assessment or assignment. Furthermore, students appreciated the ability to rewatch certain parts of the lecture videos to “reinforce traditional learning experiences” (Mcgowan & Hanna, 2015, p. 7).

To expand on this research in our own community, we conducted an internal study to analyze the amount of video students actually watched relative to the length of the video. Data was collected from an asynchronous process-based introductory calculus mathematics class with video lengths ranging from 12 minutes to 20 minutes. Videos analyzed were designed during a design and development cohort, as described in the introduction. Only unique viewings of a video were considered, and viewers’ total completion of a video was used to determine their completion percentage. Our results indicated that students engaged with slightly more than half of a video viewing in total. This finding is in conjunction with Guo et al.’s (2014) and Mcgowan and Hanna’s (2015) findings that videos greater than five-six minutes in length have less student engagement. As shown in Table 1, the videos used in our analysis were all greater than twelve minutes in length and show a lack of completion rate with half of the video.

Table 1

Video Completion Percentages Relative to Length of Video

| Video Length | Unique Viewers (n) | Average Total Watched (%) |

|---|

| 00:12:42 | 1186 | 58.07 |

| 00:17:13 | 1252 | 63.43 |

| 00:19:47 | 1352 | 48.40 |

| 00:20:37 | 1162 | 55.89 |

| 00:20:13 | 1220 | 58.03 |

Using this research and data as validation for lack of student engagement with videos, we began to experiment with alternative media to convey information. In doing so, we decided to expand the use of our already adopted Articulate Storyline and Rise. These alternative media technologies allowed us to create engaging and interactive learning experiences for students outside of watching a lecture video. In this article, we will present the literature driving the changes, our process for creating more engaging media opportunities along with specific examples, and our lessons learned and next steps.

Driving Theory

One strategy to increase student engagement is through the use of active learning. Active learning has always been a major component of teaching and learning at UNC Charlotte. On campus, we have many programs that support and encourage the use of active learning in face-to-face classes, including the Active Learning Academy and the use of active learning classrooms. As Eison and Bonwell (1991) discuss, learning must include active actions such as taking notes, problem-solving, and/or discussion for knowledge retainment to occur. Incorporating active learning allows students to actually think about what they are doing as they are learning (Eison and Bonwell, 1991). To help with our goal of increasing student engagement, the Course Production Team adopted the Interactive-Constructive-Active-Passive (ICAP) Framework.

The ICAP framework focuses on the students’ own levels of engagement to define and guide engagement opportunities students can have while learning the content of a course (Chi, 2009; Chi et al., 2018; Chi & Wylie, 2014). ICAP is divided into four modes of learning which build upon each other to increase learning and promote a deeper understanding of the content with higher-level thinking (Chi, 2009; Chi et al., 2018; Chi & Wylie, 2014). These modes include: passive, active, interactive, and constructive.

Passive engagement is the lowest level of engagement with instructional materials. Examples include reading content, listening to podcasts, watching a lecture, or reading a website (Chi & Wylie, 2014). In this mode, prior knowledge is often not activated, leading to poor knowledge retention and more often memorization (Chi et al., 2018). Active engagement encourages students to interact with the instructional materials through guided note-taking, reflection, and making observations (Chi, 2009; Chi et al., 2018; Chi & Wylie, 2014). Prior knowledge is often activated allowing for better storage and retrieval of knowledge as it is associated with a previous student experience or knowledge of the information (Chi et al., 2018). The next level is constructive, where students use their activated prior knowledge from the active level and apply it to the new content (Chi et al., 2018). At the constructive level, students often create a product that showcases how the knowledge has been integrated into their own experiences (Chi et al., 2018). Examples include jigsaw activities where students explain new concepts to others, create diagrams and concept maps to show connections, and/or reflect upon their learning based on their prior experiences (Chi, 2009; Chi et al., 2018; Chi & Wylie, 2014). Finally, the highest mode of engagement is interactive. At this level, students interact with peers in a way where they both come away with new perspectives and a deeper understanding of the content (Chi & Wylie, 2014; Delahunty et al., 2014). Students have now activated their prior knowledge, assimilated the new knowledge, and can now make inferences based on others’ own understanding of the material (Chi et al., 2018). For the purposes of this exploration, we focused on moving passive video engagement into active by creating interactives that students could interact with within their courses.

Applying Theory to Practice

In the application of the ICAP Framework to practice, our goal was to transform passive engagement to the active level in our online courses. Similar to the findings in Mcgowan and Hanna (2015), our goal was to supplement traditional learning experiences, such as readings and videos, with interactive media that students engaged with at an active level. We believed that by doing this, we would increase student engagement and increase the likelihood that instructional media was fully completed by the student.

Media Identification Process

As presented in the introduction, the process of online course development at UNC Charlotte begins with the instructional designer and faculty member working collaboratively to plan out the course in its entirety. One of the roles of the ID during this time is to analyze the current instructional materials to identify items that can be transformed into a different type of student engagement. As an example, if readings are presented to students as instructional materials, the ID will suggest the addition of guided note-taking, self-reflection, and/or sharing of new findings to peers. For lecture videos over 20 minutes or that cover many topics, the ID will suggest working with the online multimedia specialists to break these into smaller, topical chunks of information that students can revisit at their leisure. If creating an interactive lecture is aligned with the content presentation, the OMD will create interactive presentations that allow students to personally interact with the content through built-in knowledge checks, checklists, and a mindful presentation of the instructional materials with interactivity blocks. These small tweaks of instructional materials take a passive activity to a more active one as outlined by the ICAP framework.

Process of Creating Interactive Presentations from Faculty-Led Lectures

After the instructional designer and faculty identify specific points in the course where faculty-led lectures can be transformed into interactive presentations, the media is sent to the OMD to begin development. This process begins with an assessment of the materials that already exist, including lecture videos, presentation files, PDF files, and additional documents. During this assessment, these materials are “chunked” or sectioned into digestible pieces of information and a prototype is drafted. This prototype is then presented to the faculty member for approval. Once approved, the OMD will begin creating the interactive presentation in the software, Articulate Rise 360.

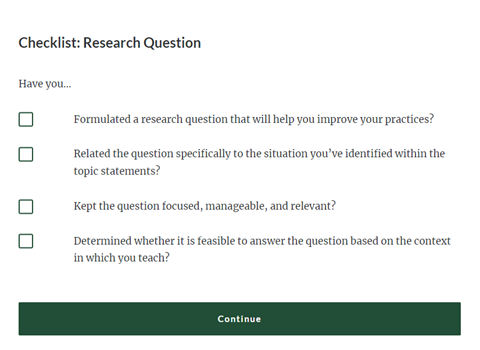

There are two important considerations during the transfer from video to interactive presentation: 1. Allowing students to interact with the content at their own pace and 2. Engaging students at an active level. To encourage students’ pacing, a time estimate of how long a section will take to complete is provided. This information allows students to be aware of their expected time commitment and to approach each section of information at their own leisure. Additionally, a “continue” button is integrated after each section. This button is presented purposefully after a theme or concept has been completed and can be seen as an achievement or milestone in the instructional materials. While students can self-pace the entire module, these built-in pacing guides help to serve as a break in the content, allowing students to plan out their learning and return to the content as needed (Mcgowan & Hanna, 2015). See Figure 2.

Figure 2

Continue Button Example Built into Articulate Rise

Image of a checklist in Articulate Rise. Students select the responses they have completed and then select the green continue button to move on.

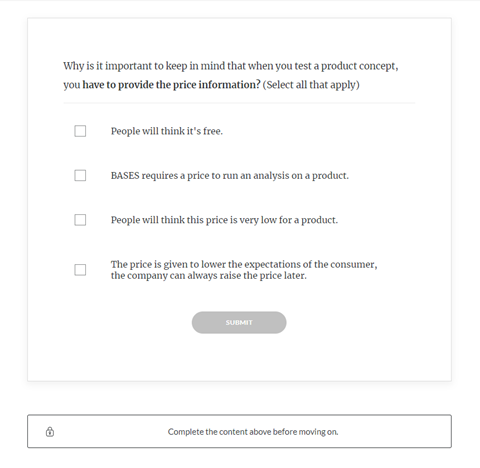

Image of a checklist in Articulate Rise. Students select the responses they have completed and then select the green continue button to move on.The interactive presentations also include simple knowledge checks that align to other assignments and help prepare students for larger assessments. A knowledge check allows students to pause and reflect on the information they consumed or reference the appropriate information nearby to complete the knowledge check. These can also assist students as a study aide and be reviewed prior to a large assessment or assignment (Mcgowan & Hanna, 2015). See Figure 3.

Figure 3

Knowledge Check Example Built into Articulate Rise

Image of a knowledge check example in articulate rise. Students select the correct answers and select submit. The continue button is locked until students submit the correct answer.

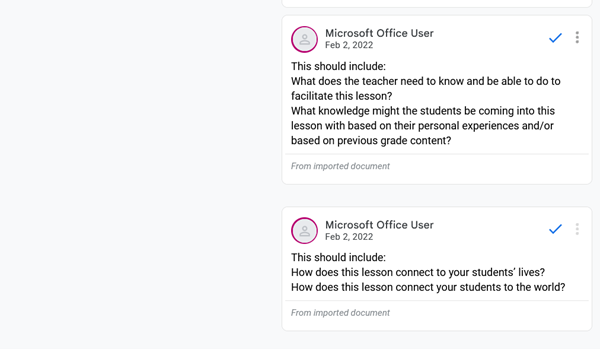

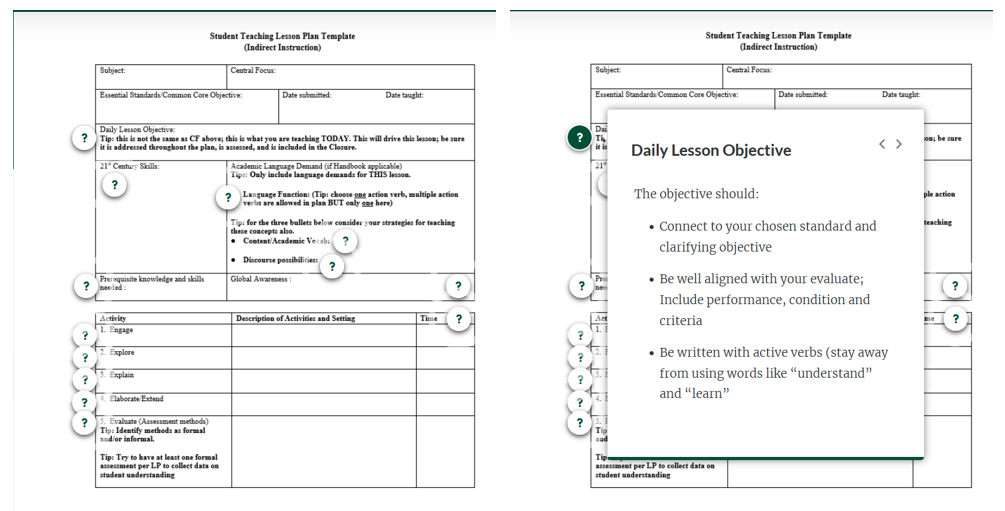

Image of a knowledge check example in articulate rise. Students select the correct answers and select submit. The continue button is locked until students submit the correct answer.We are also very mindful of utilizing student-to-content interactions to increase levels of engagement while learning. Traditionally, definitions of words or phrases are presented in a Word document or on a single slide in a presentation (passive level of engagement). To increase engagement within these types of instructional materials, we can implement hotspots, drop down boxes, accordion style openings, interactive images, and flip cards. Figures 4 and 5 show a before (passive) and after (active) transformation. In this example, a lesson plan template began as a passive Word document and was transformed into an interactive piece of content using Articulate Rise 360’s hotspots. Students were able to engage with the material in a unique way and reference the material as needed later in the course.

Figure 4

Lesson Plan Template Example at the Passive Level

Image of the original lesson plan at the passive level. The lesson plan was in a Google document with highlights and comments indicating what students were to complete. The comments went below the document and did not line up with where they were indicated in the document.

Image of the original lesson plan at the passive level. The lesson plan was in a Google document with highlights and comments indicating what students were to complete. The comments went below the document and did not line up with where they were indicated in the document. Figure 5

Lesson Plan Template Example at the Active Level

Image of the lesson plan with hotspots. Students select the hotspots to view the instructions for that section. They can then close out of them and view another.

Image of the lesson plan with hotspots. Students select the hotspots to view the instructions for that section. They can then close out of them and view another. Case Studies

Course #1

Course #1 consisted of a graduate-level course in an elementary teaching program on assessment, design, and implementation of classroom reading instruction. The course required the administration of four specific assessments to students in grades 1-6 (elementary). Previous to working with our team, the instructors created a video for each assessment with step-by-step instructions, guiding their students through the rationale and assessment. The videos were of various lengths between 00:09:35 and 00:25:16. With these how-to videos, students were often required to follow exact directions. In the videos, students were encouraged to listen to directions, pause the video, complete the task, and then unpause the video for the next step. Additionally, if a step was missed, the student could rewind the video and revisit the information. These specifics were extremely important in the transformation from a how-to lecture video to an interactive presentation.

In the original set up of these how-to videos, the graduate-level students in the online course would watch the videos and download a copy of the actual assessment to be used with their own students. To help align the instructions in the video with the specific pieces of the assessments, the interactive presentation integrated images of the assessments. These images were created to be interactive by having students select parts of a form or equation to get a breakdown of what the expectation was for that area. By doing this, students were guided from one section to the next, all self-paced without rewinding a video. References to the exact material being used were supplied under each image for further supplemental reference, whereas in video it would need to be in a comment or could be impossible to make it link in the video itself.

With the interactive presentation, students were able to move at their own pace through each step, without needing to pause and could easily review and revisit steps. The integration of knowledge checks also helped keep the students engaged and confirmed that they understood the process explained. Due to the structure of each video, chunking was also appropriate to shorten the lengths to pieces that applied to specific steps being taught at that time. In this case, the original video was still integrated into the interactive presentation. Each video was broken down and placed at the end of each step for review if the user chose to view it.

Course #2

Course #2 was for graduate students in an economics class on financial management pursuing a Master of Business Administration (MBA). The course was originally designed to be taught face-to-face and was transitioned to emergency remote learning during the 2020-2021 school year. During this transition, nine lecture-style videos were created with presentation visuals. The videos were of various lengths from 00:29:30 to 01:41:17, with most being over an hour. The videos were set up to be passive engagement, or little to no learner engagement within the videos or opportunities for students to pause and reflect on the content being presented.

Given the process-based course content, we created interactive presentations where students were shown specific steps for completing a problem and then presented practice problems where students could apply the content to real-world scenarios. Due to the length and initial development of videos for emergency remote teaching, we opted to remove the videos completely. Instead, we utilized quotes and audio clips from the instructor as reinforcement at certain points to ensure the student still had a connection to their teacher.

Course #3

Course #3 was a graduate-level economics of business decisions course for students pursuing an MBA. The overall course structure was based on critical thinking and decision-making in unique situations that applied some process-based concepts. The course was originally designed to be taught in a hybrid modality, meaning half of the course took place in the classroom and the other half was conducted online. In the original course, eight videos were created with screen-recording, typing answers into a Word document for students to check their answers, and an explanation of the rationale behind the answers. These videos are of various lengths from 00:14:51 to 00:27:33.

Since the length of videos was less of an issue, we focused more on changing the presentation type of a screen recording showing typing in a document with voice over. First, images and diagrams were transformed by creating clean graphs and encouraging students to determine the answers themselves through scaffolded instructions rather than giving the answer in a Word document. To help break up the presentation of images and graphs, instructor audio clips were mindfully integrated to reinforce concepts or for information that was difficult or too long to convey as text.

Lessons Learned and Next Steps

As we work towards transforming passive instructional media to active, we continue to grow our knowledge base and improve our processes. Our familiarity with the ICAP Framework has increased, allowing us to easily assess previously created instructional media for opportunities to transform to active engagement. Additionally, we have many prototypes to showcase, allowing us to explain our ideas to faculty with specific visuals and examples. On the interactive media build side, we have improved the ways in which we present the instructional media. For example, the “continue button” was initially used to lead from one module to another, serving no purpose other than skipping ahead in a video. However, we learned that by placing them strategically, either after long swaths of text or an in-depth amount of information, we could help chunk content. This built-in break of content allows students to plan out their learning and give specific points that students could return to for review before an assessment. Furthermore, we are able to use the continue button as a lock (see Figure 3) to force students to complete a knowledge check to confirm their understanding of the previous material before moving on.

Our overall goal with moving instructional materials from passive to active was to increase student engagement with instructional media which will hopefully have a positive impact on student success and retention. Since this is a new initiative with rollouts of these interactive presentations beginning in Summer 2022, we do not have data to conclude if our goal has been met. However, anecdotal data from faculty has been positive. One faculty member stated that the “exercise worked pretty well. It motivated students to explain their choices and engage in discussion with each other.” To collect direct student feedback, we have integrated a Likert-scale survey at the end of each interactive presentation to measure their level of engagement. Initial findings have also been positive. Moving forward, we plan to continue creating interactive presentations, collect student survey data, and present our findings of the initiative.

References

Bonwell, C. C., Eison, J. A., & Eison, J. A. (1991). Active learning : creating excitement in the classroom. School of Education and Human Development, George Washington University.

Center for Applied Special Technology. (2018). Universal Design for Learning guidelines version 2.2. Retrieved from https://udlguidelines.cast.org

Chi, M. T. H. (2009). Active-constructive-interactive: A conceptual framework for differentiating learning activities. Topics in Cognitive Science, 1 (1), 73–105. https://doi.org/10.1111/j.1756-8765.2008.01005.x

Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice.Cognitive Science, 42 (6), 1777-1832. https://doi.org/10.1111/cogs.12626

Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49 (4), 219–243. https://doi.org/10.1080/00461520.2014.965823

Delahunty, J., Jones, P., & Verenikina, I. (2014). Movers and shapers: Teaching in online environments. Linguistics and Education, 28, 54–78. https://doi.org/10.1016/j.linged.2014.08.004

Guo, P. J., Kim, J. & Rubin, R. (2014). How video production affects student engagement: an empirical study of MOOC videos. In Proceedings of the First ACM conference on Learning @ Scale (L@S '14), 41–50. https://doi.org/10.1145/2556325.2566239

Mcgowan, A., & Hanna, P. (2015). How video lecture capture affects student engagement in a higher education computer programming course: A study of attendance, video viewing behaviours and student attitude. eChallenges, 1–8. https://doi.org/10.1109/eCHALLENGES.2015.7440966