In this book chapter, we provide guidelines and best practices to instructional designers working in higher education settings on how to use learning analytics to support and inform design decisions. We start by defining learning analytics and frame such a definition from a practitioner point of view. Then, we share best practices on how to use learning analytics to support and inform design decisions in designing courses in higher education. We share examples of learning analytics—through screenshots—gathered from our instructional design experience in higher education and comment on what implications such examples have on design decisions. We conclude by sharing a list of commonly available tools that support gathering learning analytics that instructional designers can put at their disposal throughout the design process, and in conducting needs analyses and formative/summative evaluations.

What is Learning Analytics?

Learning analytics has become a popular buzzword among educators. Unfortunately, like most buzzwords, it has slowly lost meaning as it has become more widespread. The Society of Learning Analytics Research (SoLAR) defines learning analytics as a field of research interested in understanding student learning based on their interactions with digital environments. While this definition is generally satisfactory for research purposes, it fails to capture the breadth of practices instructional designers, instructors, administrators, and other stakeholders in higher education engage in under the umbrella of learning analytics. The definitions used by researchers are also often overly broad. The definition given above by SoLAR implies that any time a student touches a computer and data is collected, learning analytics is happening. However, if we look at the body of practices actually taking place under the banner of learning analytics, we see that learning analytics has a much more focused goal. Learning analytics is the use of digital technology to capture learning as it happens (Seimens, 2013). Learning analytics captures students reading, clicking, and in other ways interacting with computers and uses this data to attempt to explain the act of learning. This differs from other related fields, such as educational data mining, which takes a more outcome-driven approach to analytics, but is not (always) as interested in the exact way in which learning is happening.

The field of learning analytics is often perceived as an esoteric quantitative field, out of reach of anyone without an advanced degree in mathematics or computer science. However, one area of increasing interest to learning analytics researchers is the implementation of descriptive tools that allow teachers, students, and designers to easily reflect on the learning process and improve instruction (Bodily & Verbert, 2017; Phillips & Ozogul, 2020; Sergis & Sampson, 2017; Wise & Jung, 2019). While the amount of researchers being conducted in this area is extensive, there are three main categories of learning analytics tools that are of particular interest and utility to instructional designers:

1. Student-facing: Student-facing learning analytics simplify the complex data collected from learning management systems and other digital sources to create dashboards and other visualizations that allow students to reflect on the current state of their knowledge and set goals to improve their learning.

2. Teacher-facing: Teacher-facing tools tend to give a more complex and nuanced view of learning than student-facing tools. They often include class or course-level summaries of students’ time interacting with different digital resources, as well as the ability to explore the activities of individual learners and the patterns across learners.

3. Designer-facing: Designer-facing tools often are similar to or even synonymous with teacher-facing tools in their design. However, because of designers’ unique roles, these same tools are often leveraged in different ways. While a teacher may be interested in adjusting their day-to-day instructional strategy, a designer may be more interested in adjusting the overall structure of the course.

While some learning analytics tools may have different dashboarding features for students, teachers, and designers, in many cases, they are merged into a single tool, and it is the job of the instructional designer to customize the dashboards to meet the needs of the specific audience. As a whole, we refer to these three learning analytics tools as instructional-focused learning analytics.

Best Practices for Instructional-focused Learning Analytics in Higher Ed

In this section, we discuss seven best practices (BPs) for using instructionally focused learning analytics:

- Be data-informed, not data-driven.

- Default to the simplest analysis that answers your question.

- Interpret results in context.

- Understand how your software is generating metrics.

- Focus on interpretation over prediction.

- Seek opportunities for professional development.

- Where possible, seek collaboration with researchers.

As a general guiding principle and a best practice, we first recommend that instructional designers use instructional-focused learning analytics as a design tool in a designerly way (Lachheb & Boling, 2018) to inform/support their design decisions and judgments (Boling et al., 2017; Gray et al., 2015; Lachheb & Boling, 2020; Nelson & Stolterman, 2014). This entails adopting a data-informed design approach and not a data-driven design approach. Data-driven design is an approach that usually implies fast cycles of user-testing and design iterations based on feedback/data received and, essentially, data and algorithms deciding what designers do. In designing for learning, a fully data-driven approach is not only impractical and raises some ethical questions, but also goes against the nature of instructional design practice—designing for learning and human performance demands a careful, slow, deliberate, and well-thought-out process, to design for learners, not customers. Designers must be the guarantors of design, not the data they have (Nelson & Stolterman, 2014). Data reflect a subjective worldview that must be accounted for when design judgments and decisions are made (Datassist Inc We All Count, 2021).

Second, when using data that belong to any category of instructional-focused learning analytics, we recommend that instructional designers always default to the simplest analytics necessary to solve the problem they are facing. Some of the most informative learning analytics are simply counts of the frequency of certain occurrences. For example, the frequency of how many times a page was accessed/viewed shows what content is getting the most attention by students. Designers who are more comfortable with complex analyses can engage with more complex learning analytics, but often these analyses do not reveal any additional information.

Third, we recommend that instructional designers interpret numbers within the context of the course. For example, an LMS dashboard can show a student consistently submitting late assignments and keeps getting a “B” grade in all assignments. These two numbers can suggest some kind of relationship on its surface. For example, late submitted assignments are of poor resulting in low scores. However, this relationship might not exist at all because the course has a late submission policy that takes a letter grade for each submitted assignment, regardless of its quality. In this case, if this late submission policy were not in place, all the students’ assignments would score an “A”.

Fourth, we recommend that instructional designers understand how the tools that generate instructional-focused learning analytics “crunch the numbers”. For example, many video platforms display the average “drop-off” percentage for each video, which indicates the length of time a student watched a video before closing the page or pausing the video and never restarting it. A video platform can calculate this number in 25% increments (i.e., the average drop-off percentage is measured by viewers reaching playback quartiles). A 10-minute video lecture, for example, can include in its last quartile (i.e., its last 2.5 minutes) a recap/summary and/or the instructor talking about optional resources they have shared already with the students. Most likely, students who are used to this format of learning will not watch the last part of the video lecture because it was optional, and they got what they need from the lecture deeming that the least 2.5 minutes was not worth their time. That being said, the highest average drop-off percentage for this video lecture that could be hoped for is 75%.

Fifth, we recommend that instructional designers focus on interpretation over prediction. Researchers are still searching for the “holy grail” of predictive analytics, with moderate success (e.g. Piech et al., 2015). Still, unless the designer has a strong understanding of statistical methods, this is probably not the most effective use of their time. For example, when finding out that the 10-minute video lecture —mentioned in the previous example—had an average drop-off rate of 75%, the focus should be on interpretation over prediction. An appropriate interpretation should be as follows:

For this video lecture, and given how the video platform calculates the average drop-off percentage, 75% is a good indicator that the students watched the whole 7.5 minutes of the video lecture, but they skipped watching the rest of it since it was optional and included repetitive content.

A prediction analysis that will not lead to a sound design decision, in this case, would be:

Students in this course do not watch lectures that are longer than 7.5 minutes, thus, future lectures should be no longer than 7.5 minutes, otherwise, students will not watch them. Or, students who skipped to watch the optional resources will fail their exam.

Sixth, we recommend that instructional designers seek out opportunities for professional development to increase their proficiency with analytics tools and methods. The same barriers for learning analytical tools that existed in the past have begun to break down. Websites such as Udemy.com, Coursera.com, and Skillshare.com offer affordable courses on statistical analysis, machine learning, learning management systems, and many more topics. Courses are gauged towards a variety of audiences from those with no experience with analysis to those with years of experience looking to learn a highly specific skill. Resources specific to learning analytics are less common. Still, the Society of Learning Analytics Research’s yearly summer institute offers various workshops on both technical and pedagogical concerns in learning analytics.

Last, we recommend that instructional designers collaborate with researchers interested in or with expertise in learning analytics. Instructional designers working in higher education can take advantage of the available scholars and research centers across campuses to collaborate on learning analytics initiatives. This collaboration could be quid pro quo for the researcher and the instructional designer. Learning analytics researchers are constantly complaining they cannot get buy-in from practitioners (Herodotou et al., 2019) and designers can rely on the researchers’ expertise to empower their suite of design tools with instructional-focused learning analytics.

Examples in Practice

In this section, we share examples of learning analytics—through screenshots and explanations—gathered from our instructional design experience in higher education and we provide commentary on what implications such examples have for design decisions. Examples of how these examples may be used to inform teachers, students, and designer-facing dashboards are indicated and throughout, we reference the best practices outlined previously (e.g., “BP #2”).

Example 1: Canvas LMS Student Analytics

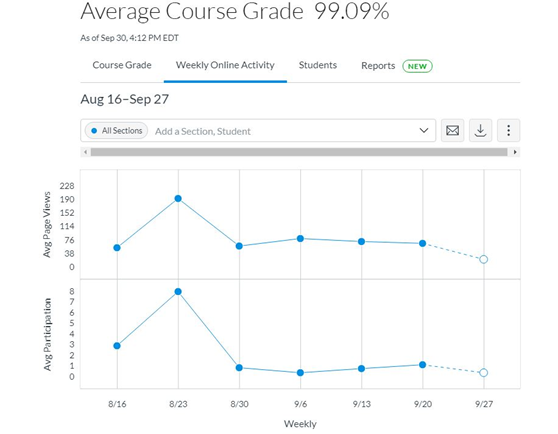

The dashboard of learning analytics in figure 1 shows students’ weekly online activity on the course, the average page views, average participation, and other analytics. This type of data provides an idea of how frequently students interacted with learning materials. It is also possible to identify certain patterns in terms of what type of content students frequently viewed and at what time, as well as what type of content was most viewed. This information can help instructional designers identify what the most viewable type of content was (e.g., a specific discussion topic) which could help instructors identify what topics lead to more engagement among students. A simple surface view of Figure 1 and 2 do not provide insight as to why students interacted more with a particular learning material or activity. Designers should consider BP #3 (interpret in context) when viewing this information. Week 1 may not be comparable to other weeks because it represents syllabus work. Use BP #5 (Focus on interpretation) to consider the content of each week when interpreting data. If week 5 contains the first mid-term, we may expect an increase in activity.

Figure 1

A dashboard of Weekly Online Activity from a Canvas Dashboard

A dashboard of Weekly Online Activity from a Canvas Dashboard

A dashboard of Weekly Online Activity from a Canvas Dashboard

Figure 2

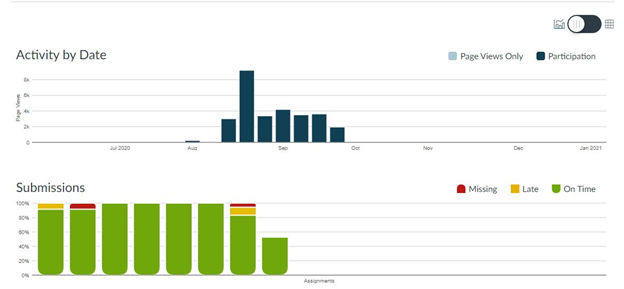

Aggregate Student Activity by Date

Aggregate Student Activity by Date

Aggregate Student Activity by Date

Knowing which week students were most active helps both instructional designers and instructors seek insight as to the type of content that is more engaging and seek student feedback on why content was . Further, looking at this type of data provides instructional designers an opportunity to reflect on the variety of activities and content given to students. Instructional designers can review learning material, such as readings, learning activities, quizzes, or discussions, and identify potential factors contributing to student interest or lack of interest in a particular topic. For instance, readings could include various perspectives and experiences of diverse populations meaning students were able to identify personally with the material. Additionally, learning activities based on real-life cases and clearly written, allow students to easily understand what they were asked to do and draw on lived or vicarious experiences when answering questions. It is noteworthy that a more complex analysis would, in this case, most likely not lead to greater interpretability. What is needed to augment page view data is a qualitative review of learning content (BP #2).

Tracking student activity, such as frequency of page views, duration of viewing certain pages, and inconsistency of student online activity, encourages instructional designers to think about potential difficulties students might experience accessing learning materials. This could be due to various reasons such as, insufficient internet bandwidth, slow internet speed, or outdated software. Implications for future design can include alternative ways of presenting learning material. For example, high-resolution images can cause webpages to take a long time to load. Removing such images and replacing them with lower resolution images or links to external pages may improve accessibility (see figure 3).

Figure 3

Examples of Different Approaches to Styling LMS Pages

Examples of Different Approaches to Styling LMS Pages

Examples of Different Approaches to Styling LMS Pages

Note. The page on the left would take more time to load because of the images used.

Example 2: Students’ Analytics in an Online Course (Canvas LMS)

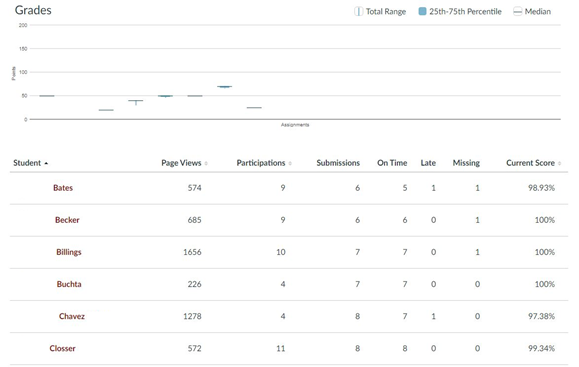

This dashboard in Figure 4 shows a detailed snapshot of students’ weekly online activity on the course, including page views, submissions status (i.e., on time, late, missing), and current grade. It may be tempting to attempt to use this information to predict student’s future grades; indeed, there is a large body of research that attempts just that (see Piech et al., 2015). However, in line with both BP #6 (seek professional development opportunities) and BP #7 (collaborate with researchers), we suggest that designers refrain from such analysis unless they are comfortable with complex analysis or have the opportunity to collaborate with learning analytics researchers who are interested in predictive modeling. Instead, in line with BP #5 (focus on interpretation over prediction), we suggest that instructional designers focus on interpretation in context. This type of engagement can be used to create a user experience map – a map of a student’s end-to-end experience. Because Canvas displays metrics at many levels of granularity (see Figures 4 and 5), instructional designers can map a student’s journey at many levels: module-by-module, week-by-week, or even day-by-day. This type of experience mapping can lead to the identification of drop-out or high-difficulty points in the course. After points are identified, context is important in determining why a certain activity presents a challenge for students by asking if the material is inherently difficult, or is something poorly explained or designed?

Figure 4

Examples of Student Level Engagement

Examples of Student Level Engagement

Examples of Student Level Engagement

Figure 5

Assessment Summary (Canvas)

A Picture of an Assessment Summary in Canvas

A Picture of an Assessment Summary in Canvas

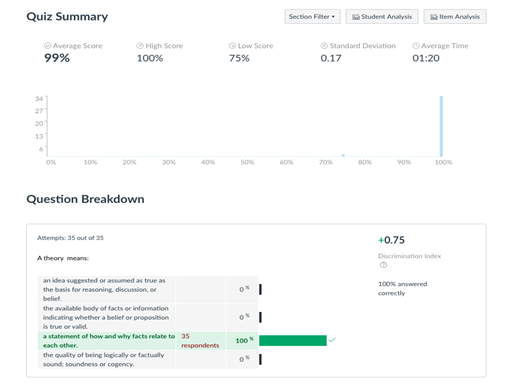

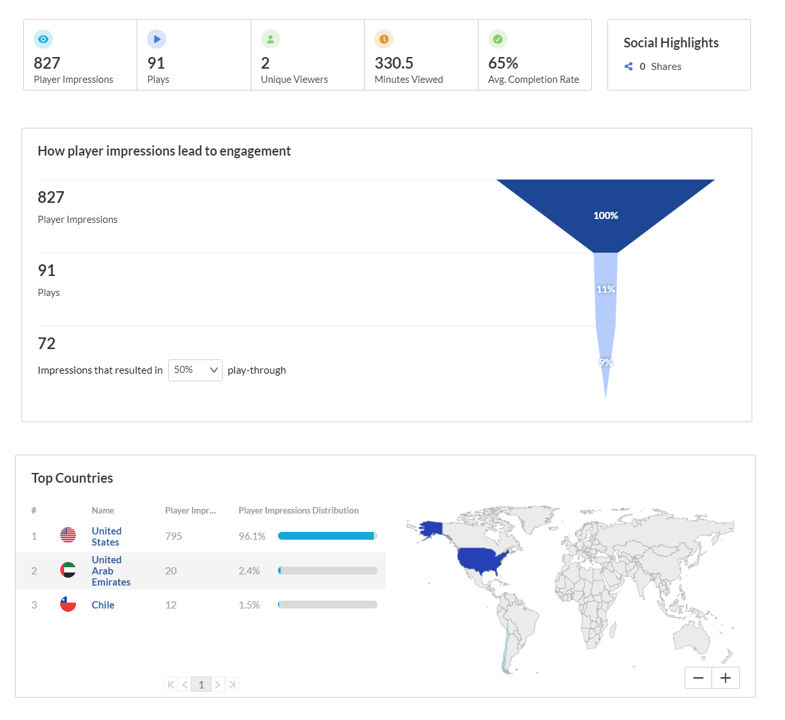

Example 3: Video Engagement in an Online Course (Kaltura Video Platform)

The dashboard in figure 6 shows a detailed snapshot of students’ views, total minutes, completion rate, video engagement based on impressions, countries in which video was viewed, and the device overview. This type of data helps both instructional designers and instructors identify what topics were most interesting for students based on the number of views. For instance, if only a few students viewed a given lecture or additional video material, instructional designers and instructors can further analyze the material to see what could be improved to increase engagement and interactivity (e.g., length of a video, narration style, use of more visual aids or examples). Video dashboards are an important example of how BP #4 (understand how software generates metrics) can be utilized. In figure 6, we see that the “average completion rate” is 65%. However, different video software tabulates completions differently. Some software considers a video completed if it has been “mostly” finished, while others require it to run through until the last second. Depending on the content of a specific video and the way the software records completions, this may radically change the interpretation of this metric. If a video is a recording of a class, and the last minutes contain no content, then completion may be a meaningless metric. In this case, Kaltura reports both the number of plays and total minutes viewed, which allows us to manually calculate average minutes watched per view, which may be a more useful metric.

Figure 6

A dashboard of Video Engagement

A Picture of a Dashboard of Video Engagement

A Picture of a Dashboard of Video Engagement

Overall, it is important to consider that data only gives instructional designers a static snapshot of students’ activity and performance. It does not tell the full story and it is up to each instructional designer to interpret this data to determine how to make effective use of it to improve design practices and future learning experiences. Interpretation is an integral part of the design process that helps designers grasp the nuances of an existing design situation. Such interpretation is a subjective process during which instructional designers draw on their own unique previous experiences and call on their core judgments which include one’s values, perceptions, and perspectives (Nelson & Stolterman, 2014) as to what a good learning experience should be. While interpreting data for future designs, it is important to reflect on one’s beliefs and biases which would allow consideration of multiple points of view, perspectives, and experiences. Having an open and flexible mind can help instructional designers consider the unique characteristics of a learning context or learners to create inclusive learning environments.

Example 4: Student-Facing Dashboards for Mastery Learning

Student-facing dashboards serve a variety of purposes. More advanced dashboards can recommend learning resources, prompt discussion with other students, or even recommend other courses. However, most of these applications require extensive analytical and design customization while scalable versions of these tools still seem to be several years away. Many dashboards simply ask students to reflect on their learning experience (e.g., time on task, progress towards completion) and revise goals and plans for completing instruction. These types of dashboards are often coupled with simple instructional tools, such as worksheets, that prompt students to further reflect on their learning.

Unfortunately, there are still not widely scaled student-facing dashboards, even for reflection. Scholars are widely in consensus about what student-facing dashboards for reflection on learning should do and look like. Therefore, we believe that these types of dashboards will shortly be widely available. If instructional designers are adept developers or have access to developers, it may be feasible to design such dashboards. If not, we still feel it is important for instructional designers to be aware of this type of reflective learning analytics positioned to integrate into learning management systems in the near future.

Implications for the Instructional Designer(s)

Learning analytics is best used as a design tool that can support design decision-making and the subjective judgments made by instructional designers . The use of learning analytics as a design tool implies the following:

● Learning analytics are powerful in their descriptive nature but alone are not sufficient to predict or modify learning.

● Learning analytics provide designers the ability to support their design decisions and/or to defend their design judgments when challenged by other design stakeholders.

● Learning analytics do not show the whole picture and must be contextualized within the broader instructional context.

● Learning analytics will be gathered automatically by some tools, but often manual data collection is still necessary for some contexts.

● Accessing, analyzing, and/or gathering learning analytics requires thoughtful collaboration with other stakeholders at the university, mainly system administrators and data analysts within IT departments.

● Learning analytics by themselves say little– the interpretation of the designer is what makes findings interesting and actionable.

● Learning analytics should supplement, not dictate, design decisions—data alone is frequently biased based on the context in which it was collected.

Available Tools

Most widely used learning management systems (LMSs), such as Blackboard and Canvas, provide learning analytics as a default feature. Typically, learning analytics supported within learning management systems provide data on student activity, submissions, and grades. In addition, data provide summaries of weekly instructor-student, student-student, and student-content interactions and student performance over the semester within LMSs. Further, instructional designers and instructors can see counts for assignments and type of submissions (e.g., quizzes, assignments) as well as storage utilization and recent student-login information. In sum, such learning analytics provide a snapshot of how frequently students interact with course materials (e.g., course website page visits), student overall performance, and individual student performance.

Additionally, platforms that host media, such as Kaltura, YouTube, and library databases, provide learning analytics by default. Learning analytics available within those platforms provide data on the number of views, minutes viewed, and location.

● Tools that provide learning analytics by default: Learning Management Systems LMS, platforms that host media (e.g., Kaltura, YouTube, library databases) grade books in each LMS, analytics tools supported within LMSs.

● Tools that help to collect learning analytics: survey tools (e.g., Quratlics, Google Form, Google Analytics)

● Researchers. The Society of Learning Analytics Research has a large body of researchers and practitioners who are often open to collaboration. They hold international and local events, with a summer symposium focused on practice, and can be contacted at https://edtechbooks.org/-kxM.

References

Boling, E., Alangari, H., Hajdu, I. M., Guo, M., Gyabak, K., Khlaif, Z., ... & Bae, H. (2017). Core judgments of instructional designers in practice. Performance Improvement Quarterly, 30(3), 199-219. https://edtechbooks.org/-KAqE

Bodily, R., & Verbert, K. (2017, March). Trends and issues in student-facing learning analytics reporting systems research. In Proceedings of the seventh international learning analytics & knowledge conference (pp. 309-318). https://edtechbooks.org/-JzEa

Datassist Inc We All Count (2021, January 14). The data equity framework. We All Count. https://edtechbooks.org/-LpzD

Gray, C. M., Dagli, C., Demiral‐Uzan, M., Ergulec, F., Tan, V., Altuwaijri, A. A., ... & Boling, E. (2015). Judgment and instructional design: How ID practitioners work in practice. Performance Improvement Quarterly, 28(3), 25-49. https://edtechbooks.org/-jCqf

Herodotou, C., Rienties, B., Boroowa, A., Zdrahal, Z., & Hlosta, M. (2019). A large-scale implementation of predictive learning analytics in higher education: The teachers’ role and perspective. Educational Technology Research and Development, 67(5), 1273-1306. https://edtechbooks.org/-QZkj

Lachheb, A. & Boling, E. (2020). The Role of Design Judgment and Reflection in Instructional Design. In J. K. McDonald & R. E. West (Eds.), Design for Learning: Principles, Processes, and Praxis. EdTech Books. https://edtechbooks.org/-zPFg

Lachheb, A., & Boling, E. (2018). Design tools in practice: instructional designers report which tools they use and why. Journal of Computing in Higher Education, 30(1), 34-54. https://edtechbooks.org/-HDB

Nelson, H. G., & Stolterman, E. (2014). The design way: Intentional change in an unpredictable world. MIT press.

Piech, C., Spencer, J., Huang, J., Ganguli, S., Sahami, M., Guibas, L., & Sohl-Dickstein, J. (2015). Deep knowledge tracing. arXiv preprint arXiv:1506.05908.

Phillips, Tanner, & Ozogul, G. (2020). Learning Analytics Research in Relation to Educational Technology: Capturing Learning Analytics Contributions with Bibliometric Analysis Problem Statement and Research Purpose. TechTrends, 64(6), 878–886. https://edtechbooks.org/-GDwC

Siemens, G. (2013). Learning analytics: The emergence of a discipline. American Behavioral Scientist, 57(10), 1380-1400. 10.1177/0002764213498851

Sergis, S., & Sampson, D. G. (2017). Teaching and learning analytics to support teacher inquiry: A systematic literature review. In Pena-Ayala (Ed.). Learning analytics: Fundaments, applications, and trends (pp. 25-63). New York City, USA, Springer.

Wise, A. F., & Jung, Y. (2019). Teaching with analytics: Towards a situated model of instructional decision-making. Journal of Learning Analytics, 6(2), 53-69. https://edtechbooks.org/-NIiv