Imagine you are at a dinner party and begin chatting with someone you have not seen for many years. Trying to be a good conversationalist, you ask him, "So, what have you been up to lately?" To which he responds, "Oh, lots of stuff, but the coolest thing I’ve been doing is a ton of research into dark matter."

Pause the scene. What do you think he meant when he said "research"? Did he mean that he was reading articles on Wikipedia? Did he mean that he was spending his nights at the library, poring over physics textbooks? Or did he mean that he regularly found himself deep underground working on a particle accelerator? In our vernacular, "research" could reasonably be interpreted in any of these ways. Literally, the word only means to look diligently for something (search) and then to look again (re-search). Yet, each of these interpretations is quite different and implies different processes, different types of effort, and different levels of expertise.

Key Terms

- Advocacy

- Adopting or encouraging a particular stance, solution, or policy.

- Critical Thinking

- Thoughtful analysis that emphasizes dispassionate, unbiased objectivity.

- Research

- Systematic, auditable, empirical inquiry. (This definition may be field-specific and only applies here to education research.)

Imagine now that you are an elementary school teacher and your principal swings by your office for a word. She is very excited because she just heard about a new reading program and thinks it will drastically help your students improve their comprehension and fluency. "And do you know what the best part is," she asks energetically. "It’s research-based. So, it should be easy to get the district to approve us to purchase it for every classroom."

Pause the scene again. What do you think she meant by "research-based?" Did she mean that the program was designed upon literacy principles found in research literature? Did she mean that the company that made the program also wrote a white paper about how a group of teachers they gifted the program to found it to be useful? Did she mean that a market analyst had contacted previous clients and asked them how much they liked it? Or did she mean that an unbiased, outside organization had conducted randomized classroom implementations to see how the program influenced standardized reading test scores? And if that was the case, then how valid was the test, how drastic were the student improvements that were attributable to the program, and were all students benefited equally?

Again, even in this professional education setting, the term "research" might mean any number of things, allowing interested parties to pass just about anything off as "research-based" in some form or fashion — potentially equating the most thoughtful, rigorous experimental project with the most superficial word-of-mouth check into a product’s value. You can probably guess why this is a problem.

What is research?

If the term "research" is applied to education in too broad a fashion, then suddenly anything can count as research. Reading a blog post is research, asking a friend is research, noticing a headline is research, and all of these might be implied in the same breath as a deep, multi-year, ethnographic study exploring the experiences of an underserved population. Such imprecision and willy-nilly use of the term makes the rigor and clout of research lose its persuasive sway and confuses us into thinking that we are talking about the same thing when we may not be.

Conversely, if we apply the term "research" too narrowly, we also might miss out on truly meaningful and insightful examples of research that are necessary for moving forward. If, for instance, we assume that randomized controlled trials performed in a sterile lab are the only activities that should really count as research, then where will we develop the theories that are used to justify those trials, and how can we be sure that the results of those trials will work in vibrant, messy (non-sterile) classrooms? So, though there does seem to be a clear need to define research more narrowly than it is used in the vernacular, we need to be careful not to describe it so narrowly that we lose its key benefits.

Whenever education researchers use the term "research," we might sometimes have different meanings in mind (favoring certain methods or focusing on particular problems; cf. Phillips, 2006), but it seems fair to suggest that we would generally agree on at least four things:

First, research operates as a form of inquiry (Mertens, 2010). The process of doing research is one of asking questions and seeking answers (Creswell, 2008). We might do this by audibly asking a question of a learner, relying on their own self-reports to find the answer, or by framing our question into an experimental test, using our own observations to find it. We might ask a broad question, like "what's going on in Zavala Elementary," or we might ask a narrow, specific question, like "does using Technology X significantly improve performance among 6th graders on the Super Valid Measure of Visible Learning (SVMVL) test?" We might ask a single question in a research study or we might ask many. Researchers operate differently in this regard, but we all ask questions for the purpose of finding answers.

Second, research is systematic (Mertens, 2010). While trying to answer our questions, there are specific, intentional steps we take. We don't just shout against the wall "Are learning styles real?!" and expect the universe to answer. Rather, we embark on a step-by-step process to ever-more-certainly approach the answer, such as proposing "if learning styles were real, then designing instruction for a student's reported style should help them perform better" and then performing necessary steps for checking to see if this is the case.

Third, research is auditable. As we systematically move toward answering questions, we document the steps that we are taking and how they either align with or deviate from the systematic approaches others have taken in the past. This makes the process we followed, from start to finish or from question to answer, visible to anyone else who might also be interested in our question, allowing them to interrogate any step we took and to determine for themselves whether the answer we found should be trusted.

Fourth, research is empirical. Unlike other forms of inquiry that operate exclusively in the internal mind of the inquirer, such as artistic expressions of one’s subconscious or mathematical proof generation, education research relies upon evidence observable in the external physical and social world. This means that research involves the observation or analysis of people or things, not just ideas, and though theory generation, literature synthesis, and critical reflection might all be valuable activities in their own right, they can only be called education research as they are combined with empirical analysis, such as of people or social institutions.

Merging these pieces together, we are able to construct a fairly simple and straightforward definition of education research as "systematic, auditable, empirical inquiry" that is done within the realm of education, such as to address problems impacting learners, educators, or their educational institutions.

Learning Check

Which of the following are empirical forms of inquiry?

- Observing classroom teachers

- Reviewing existing literature

- Testing student performance

- Interviewing parents

For whom do we conduct research?

By emphasizing systematicity, auditability, and empirical verification, this definition implies that as we undertake research we are doing it with a goal of influencing others' thinking. That is, if we find an answer, we want others to trust our answer and for them to find it compelling or persuasive based on the process we followed. This may further complicate matters in education, because the imagined audience that we conduct research for might represent a variety of people who have a stake in education, including teachers, students, parents, policymakers, administrators, or the general public.

Problems in education are complicated partially because they influence such different stakeholders, but also because they are found in complex and unique social institutions (e.g., schools, universities, communities) and involve various disciplinary knowledge domains (e.g., behavioral psychology, sociology, teacher practice, curriculum design). So, attempting to solve even a single problem in education might involve drawing upon several knowledge domains, implementing interventions in many unique social institutions, and attempting to influence diverse stakeholders. For researchers, this means that upon completing a study, we might write up our results in a journal article for other researchers to learn from, might summarize our findings for policymakers in a brief, might post a short practitioner guide on a parenting blog or teaching magazine, or might be interviewed by reporters about the implications of our work for the public. Such complexity makes education research quite different from research in many other fields because the expected audiences for our research are quite varied, and this influences what questions we will ask, how we will go about answering them, and where we will report our answers (Floden, 2006).

It turns out that each of these audiences has very different values, expectations, and practices when it comes to research, and as researchers, we will often find ourselves doing research for mixed audiences to achieve our goals. For instance, other researchers in our audience will value work that exhibits "careful design, solid data, and conclusions based on cautious and responsible inference" (Plank & Harris, 2006). Researchers also relish nuance and recognize the importance of context for their results to be valid, meaning that they may be reserved in providing sweeping policy suggestions or strategies for reform that are intended to work in every situation. Researchers will also generally target scholarly journals as publishing venues both to make their work available to other researchers and to meet scholarly rigor requirements placed upon them for tenure and promotion by their educational institutions.

Practitioners, on the other hand, such as teachers, instructional designers, curriculum developers, and other education professionals, will value ready applicability of research findings to their current needs and contexts. Teachers will not care whether STEM achievement is lagging nationwide if their own students are performing acceptably, and they will not adopt purported solutions to achievement gaps in their own classrooms if they do not think the solutions will be effective or can be implemented in their specific contexts. Because this context piece is so vital to teachers, they are more likely to trust their colleagues (who are closer to their contexts) than professional researchers (who might be more informed but also more contextually distant). This does not mean that practitioners are antagonistic toward emerging research or are resistant to change but rather that applicability is their top, guiding priority, and research that ignores context or reports in abstraction will generally be ignored.

As a third group, policymakers tend to be intensely interested in particular research questions that reflect issues of current concern to the general public, but such interest is typically fleeting, and "as public attention shifts, they quickly move on to other issues" (Plank & Harris, 2006). Policymakers will value research that is compatible with their prior beliefs and commitments, that are timely, that are simple, that have clear action-oriented implications, and that are generalizable beyond specific contexts (Plank & Harris, 2006). This leads to very blunt or simplistic interpretations of research findings that may lack nuance or engineered precision and also reflects limited trust in scientific findings wherein "Aunt Minnie's opinion may carry equal or greater weight [to a published research study] because of her 30 years as a teacher or parent" (Plank & Harris, 2006).

And finally, the general public is a fourth potential audience for research that both shapes what policymakers care about and also what practitioners are required to do (e.g., as parents make mandates upon school boards). Like some of the other groups, the general public has limited understanding of research methodology and limited interest in and access to scholarly journals. This means that they will typically rely upon popular news outlets, such as television news hours, news sites, and popular blogs, to only highlight research findings that will be of broad interest and to summarize research methods and findings in ways that are readily understandable and that connect to social issues and political topics of the day. Such outlets also will be heavily biased toward research studies with findings that seem to be novel, troubling, or contentious, because they will be more likely to garner readers, and will favor the dissemination of interesting pseudoscience and debunked beliefs to real science (cf., Willingham, 2012). A study that considers student STEM preparation, then, might only be reported by popular news outlets if it contradicts current wisdom, connects to a popular political agenda, or suggests a crisis (e.g., "the U.S. economy is becoming less competitive due to poor STEM preparation for the workplace"). This means that research will have a highly mediated relationship to the general public, where most people will only read research results that have been intentionally sifted, collated, and rebranded through multiple layers of editorial bias, thereby influencing general misunderstanding or misrepresentation of research results, which will, in turn, influence policymaking and practice through public pressure.

Such divides between potential research audiences are often lamented but may be necessary and deserving of respect in democratic societies. Elected officials, practitioners, and the general public cannot reasonably be expected to study and evaluate the research literature at the same depth that scholars do, and so the responsibility for bridging any such gaps will fall mainly on scholars to "make their work more accessible and useful to [other] audiences" (Plank & Harris, 2006). Thus, researchers cannot study for the sake of a journal article publication alone and be done with the research process, but interpreting and disseminating results to diverse and broad audiences should also be an integral part of the research process. How this is done via a variety of publication venues will be explored more deeply in later chapters on research reporting.

Learning Check

When considering the results of a research study, what will teachers likely consider to be most important?

- Rigor

- Consensus

- Applicability

- Broad Impact

When considering the results of a research study, what will researchers likely consider to be most important?

- Rigor

- Consensus

- Applicability

- Broad Impact

When considering the results of a research study, what will policymakers likely consider to be most important?

- Rigor

- Consensus

- Applicability

- Broad Impact

What makes a good education researcher?

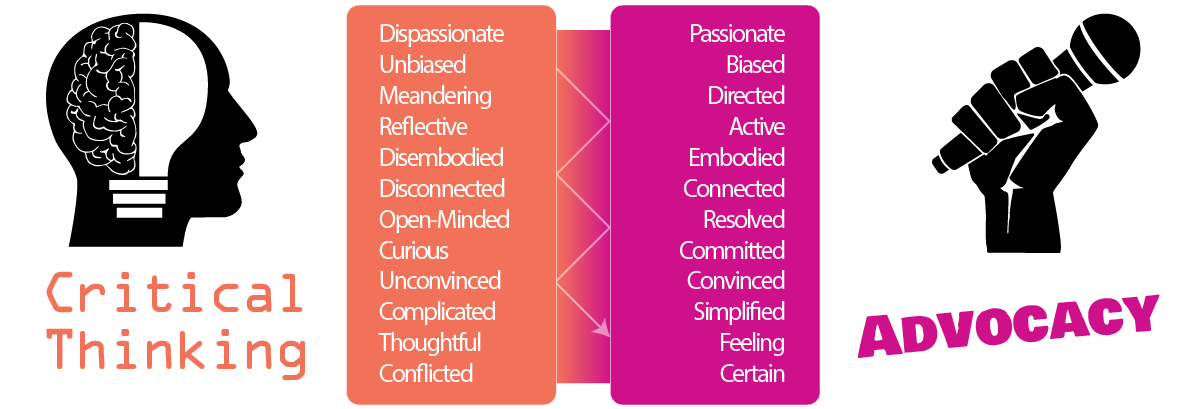

Fig. 1. Characteristics of critical thinking vs. advocacy

Fig. 1. Characteristics of critical thinking vs. advocacyResearch serves several important functions in our society, such as adding to general knowledge, improving practice, and informing policy debates (Creswell, 2008). Unlike other areas of inquiry where research might be undertaken for the sheer purpose of learning about the world (e.g., naturalists like Thoreau experiencing nature), education research tends to start with some specific assumptions and goals, and at the heart of these lies a central, golden premise that we go about doing research for the direct purpose of improving education.

What "improving education" means may vary between people, projects, or contexts, and it may include a host of goals including improving curricular quality, improving equity, reducing cost, improving people’s lives, or improving the economy. However, no matter what these underlying goals may be, education research is unique as a discipline, because it attempts to connect systematic data-gathering and analyses to better achieve improved educational outcomes either by informing what needs to be done or by adding legitimacy to what may already be happening. This typically takes the form of researchers tackling social problems and issues that can be very complex and highly politicized — such as school choice, racial segregation, economic disparity, individual learner differences, standardized testing, and so forth — and requires researchers to both rigorously grapple with problems in thoughtful, insightful ways via critical thinking and also to socially influence the world toward becoming a better place via advocacy.

This two-fold mission of education researchers may at first seem odd to some who are new to the discipline, because Western narratives of research have historically positioned the researcher as an objectivist outsider who observes the world at a distance, such as an ancient white man in an ivory tower peering down on the masses through a telescope. Such monastic views of the researcher have emphasized his (yes, his; we will discuss the role of gender in education research in a later chapter) role as a dispassionate observer who must be physically separated and free from the biases and problems of the day to provide dispassionate analysis of the state of the world from a privileged position. The reasoning for such a view is that in order to provide rational, valid guidance to the world, the researcher must prevent passions, connectedness, and proximity from skewing his rational judgment and unbiased analysis of the facts as they are. Through this separation, such narratives attempt to frame the researcher as the quintessential critical thinker, a purely analytic machine, an inhuman demigod, or a brain in a vat, so that we can confidently trust his judgments as thoughtful, unbiased, and fair.

Yet, though critical thinking is indeed a necessary requirement of the researcher, treating critical thinking as an unequivocal virtue and monastic approaches as the only or even best way of knowing seems dubious. In subsequent chapters, I will explore ways of knowing in more detail but will close this chapter with a brief explanation of why critical thinking and advocacy must work in tandem as the most useful and meaningful way forward for education researchers.

All humans face problems that require critical thinking on a daily basis. Critical thinking is characterized by thoughtfully weighing alternatives and evidence, resisting premature conclusions, and attempting to understand how our own biases and assumptions about the world may be influencing what we are experiencing (cf., Fig. 1). Such processes require concerted intellectual effort and can place us in a state of paralytic limbo where we willingly withhold action or judgment until we can fully weigh our options between which grocery item to purchase, which class to take, which book to read, which movie to watch, which person to talk to, or which words to use. Such activities are essential for our wellbeing because, without them, we would regularly purchase expired produce, speak to people in languages they cannot understand, and generally waste our time on efforts that have little or no benefit. Yet, we engage in critical thinking for the purpose of coming to a reasonable conclusion, and if we fail to come to a conclusion, then have we done it right?

Suppose I have a few hours to spare. I switch on a video streaming service and begin browsing the list of movies. If I spend the entirety of my time browsing the options but never settle on one to watch, then have I successfully employed critical thinking? I might have weighed my options, read the synopses, looked at ratings, and checked out the cover photos, but if this process doesn’t produce a decision that I then act upon, then was the effort worth it? If you and I start a conversation, and I stare at you blankly for 10 minutes, because I am trying to decide on the best words to use, then would you praise me as being a great critical thinker?

Critical thinking exists to serve a purpose: to inform action. As Reber (2016) explains, "thinkers can deliberate too much," leading to worse outcomes and suggesting that we need "a criterion regarding when to stop" (p. 29). Properly employed, then, critical thinking consists of temporary inaction that is undertaken to provide better-informed action. If an action does not follow, as in the cases of extreme intellectual skepticism, cynicism, and the paralytic social behaviors mentioned above, then such paralysis should not be lauded as expert critical thinking but as failed critical thinking. That is, critical thinking only serves its purpose if it leads us toward becoming advocates for something, by choosing which movie to watch, which words to say, or which educational initiative to champion.

By shifting from critical thinking to advocacy, we take an (again) temporary stance of confident action toward the problems we are trying to solve and act upon the guidance that our critical thinking has provided. This might mean adopting an intervention, advocating for a policy change, or writing a provocative op-ed. We boldly act on what we have learned and attempt to put it into action. Once we do this, however, the essential element that keeps us true to form in our roles as education researchers is that we always step back to critical thinking in the next movement, being willing to critically analyze new evidence, evaluating how our forward movements worked to influence our goals, and so forth.

The imagery that emerges from this constant stepping forward in advocacy and back in critical thinking is that of a pair of dancers aesthetically moving about a stage. At the macro-level, movement is occurring, change is happening, and the dance progresses to an eventual beautiful finale, even though the micro-footsteps of the dancers skirt back-and-forth in every direction. This is quite different from the regimented forward-marching of advocacy alone or the backpedaling and directionless navel-gazing of critical thinking alone in that each of these two essential components of research work together in tandem to lead us to the desired result.

We engage in this dance not to try to announce to the world from our tower that we understand the masses better than they understand themselves, nor to shout back up toward the tower "No thanks, we’ve got everything figured out!" Rather, the best education researchers find their homes in this constant dance of stepping back to think critically about evidence, stepping forward to advocate for improvement, stepping back to think critically about new evidence and assumptions, and so forth ad aeternum.

References

Creswell, J. W. (2008). Educational research: Planning, conducting, and evaluating quantitative and qualitative research (third edition). Upper Saddle River, NJ: Pearson.

Floden, R. E. (2006). What knowledge users want. In C. F. Conrad & R. C. Serlin (eds.), The SAGE Handbook for Research in Education. Sage. doi:10.4135/9781412976039.n2

Mertens, D. M. (2010). Research and evaluation in education and psychology: Integrating diversity with quantitative, qualitative, and mixed methods (third edition). Los Angeles, CA: Sage.

Phillips, D. C. (2006). Muddying the waters: The many purposes of educational inquiry. In C. F. Conrad & R. C. Serlin (eds.), The SAGE Handbook for Research in Education. Sage. doi:10.4135/9781412976039.n2

Plank, D. N., & Harris, D. (2006). Minding the gap between research and policymaking. In C. F. Conrad & R. C. Serlin (eds.), The SAGE Handbook for Research in Education. Sage. doi:10.4135/9781412976039.n2

Reber, R. (2016). Critical feeling: How to use feelings strategically. Cambridge: Cambridge University Press.

Willingham, D. T. (2012). When can you trust the experts? How to tell good science from bad in education. San Francisco: Jossey-Bass.