Learner experience in technology-enhanced learning environments is often evaluated or analyzed with traditional usability heuristics, as in Nielsen (1994a, 1994b), in order to understand if a certain tool is usable or user-friendly. However, Nokelainen (2006) has established that pedagogical usability is often neglected, an approach which takes into account issues of pedagogical design such as instructions and learning tasks. In addition, the social dimension for evaluating online or hybrid learning environments is largely absent in existing usability heuristics. We analyzed relevant literature in order to develop a conceptual framework that includes the three dimensions of social (S), technological (T) and pedagogical (P) usability. In this chapter, we present this as sociotechnical-pedagogical usability. We assert this framework can serve as the basis for future researchers to advance a new set of STP heuristics for learning design. Design recommendations are provided that address social, technical and pedagogical usability for evaluating online or other formats of learning with technologies.

1. Introduction

Typically, instructors, instructional designers, and learning designers do not develop new learning technologies from scratch. Rather, when developing courses or learning materials, they make course design decisions based on existing tools or using readily-available features of broader learning management systems (e.g., Canvas, Moodle, etc.), including how to arrange content and tools in novel ways so as to positively influence learner experience in online or hybrid learning arrangements. However, learners' engagement with learning technologies in real-world usage contexts often differs from what designers anticipated when designing and planning the technology (Schmidt & Tawfik, 2018; Straub, 2017). This disconnect can lead to situations in which the potential of the learning design is not fully realized due to a variety of reasons, including: (a) learners abandoning technology due to technical problems, (b) learning designs lacking sufficient plasticity to meet diverse and sometimes unanticipated teachers’ and/or learners’ needs, or (c) socio-cultural contexts influencing technology adoption counter to designer intent (El-Masri & Tarhini, 2017; Gan & Balakrishnan, 2016; Orlikowski, 1991, 1996).

Increasingly, researchers of learning design are applying methods from usability and user experience (UX) research for evaluating and improving the learner experience (LX) with learning technologies (Earnshaw et al., 2018). UX and LX share much in common, particularly in terms of methodological approaches. That UX and LX share so much in common leads to challenges in differentiating the two. This is exacerbated by a general lack of accepted definition of LX to-date. Some of the factors that distinguish LX from UX relate to the goals, focus, and context of each. The three primary defining characteristics of UX are related to the user’s (a) involvement, (b) interaction, and (c) observable/measurable experience with a technology or product (Tullis & Albert, 2013). Importantly, these three characteristics must be considered broadly, looking at all contexts in which the user encounters and thereby experiences the technology or product. Among the goals of user experience design (UXD) is improving the arrangement of tools or features, from the user’s perspective, so as to improve the usability and UX of a digital product. The focus of UX is therefore quite broad, with applicability to any technology in any context for any user. Learner experience design (LXD), however, has a narrower focus on improving the usability and LX of only one type of technology—learning technology—from the perspective of only one type of user—the learner. While UX is applied in a broad variety of contexts, LX is applied only in learning design contexts. For a more comprehensive consideration of how LX is defined, readers are referred to the introductory chapter of this volume.

Usability evaluation is perhaps the most widely-practiced research methodology in UX. A variety of methods can be used in UX research to evaluate usability, including Nielsen’s well-known heuristics (1994a, 1994b) or task-based usability methods (e.g., Hackos & Redish, 1998). These methods aim to evaluate the perceived ease-of-use of digital technologies, which subsequently presents opportunities to improve them. For an overview of usability methods, readers are referred to Schmidt et al.’s (2020a) chapter in this volume and Earnshaw and colleagues’ (2018) work on UXD. However, a tension exists in that evaluation methods that focus narrowly on technological usability alone prove insufficient when applied in learning contexts (see Lim & Lee, 2007; Reeves, 1994; Silius et al., 2003). This has led to calls for usability approaches that are sensitive to pedagogical needs or “pedagogical usability” (Moore et al., 2014; Nokelainen, 2006). Furthermore, researchers in the learning sciences agree that learning is a social effort and that meaningful learning with technologies should be embedded within social group activities (Dabbagh et al., 2019). Within this frame, learning is dependent on the quality of social relations and interactions with teachers and peers (Jahnke, 2015). Studies by Jahnke et al. (2005) suggest that social interactions and social roles are equally important in fostering human-centered learning processes. However, a factor that is often overlooked when evaluating learning technologies is the social dimension (Gamage et al., 2020; Kreijns et al., 2003).

With increasing recognition in the field of learning design of the value of human-computer interaction (HCI) and daughter disciplines such as UX (Gray et al., 2020; Moore et al., 2014; Schmidt et al., 2020b), researchers and practitioners in this field are encouraged to take heed of emerging trends in these areas. There is therefore a timely and urgent need to foreground the inherent technocentric bias of usability evaluation, as the central focus of technological usability ignores factors that are critical to learning—specifically, the pedagogical and social dimensions of learner experience. Meaningful technology-mediated learning represents a complex activity system that must account for these pedagogical and social dimensions (Kaptelinin & Nardi, 2018). However, given the lack of accepted defining characteristics of LX and clear guidance on how LX differentiates itself from UX, there is a danger that usability methods, when applied in an LX context, could fail to account for these critical considerations. It therefore follows that usability evaluation of technology-enhanced learning should embrace a broader conceptualization of usability, considering (a) the social dimension, (b) the technological dimension, and (c) the pedagogical dimension. In short, we present this as sociotechnical-pedagogical usability. In the following sections, we propose and discuss a conceptual framework and associated heuristics for sociotechnical-pedagogical usability that potentially could enhance the theoretical and practical utility of usability evaluation as applied to learning technologies.

2. Conceptual Framework

Central to the intent of this chapter is a view of learning technology usage as a new social practice of instructors, teachers, and learners. New technologies naturally prompt changes to existing work and/or learning processes, structures, and cultures. Correspondingly, introducing new technologies into learning contexts necessarily influences learning processes; it is an interwoven, co-evolutionary manifestation of complex social, technical, and pedagogical interactions (Marell-Olsson & Jahnke, 2019). Considered a ‘wicked problem’, researchers have been challenged by this since the emergence of sociotechnical approaches in the early 1950s to support human work through technological and organizational change (cf. Trist & Bamforth, 1951). While human-computer interaction (HCI) research investigates person-technology relationships, learning design must consider not only the technological dimension of this relationship but also how the pedagogical and social dimensions of learning are influenced by technology. As presented above, we refer to this as sociotechnical-pedagogical usability, which we expand upon in the following sections.

2.1. Toward Learner Experience Evaluation: Extending Technological Usability to Consider Social and Pedagogical Dimensions of Technology-Enhanced Learning

Generally speaking, traditional (technological) usability evaluation focuses on the user interface (UI) and how user interaction with the UI enables the user to achieve certain goals related to the tool (Nielsen, 1994a, 1994b). Nielsen and Loranger (2006) define usability as:

How quickly people can learn to use something, how efficient they are while using it, how memorable it is, how error-prone it is, and how much users like using it. If people can’t or won’t use a feature, it might as well not exist (p. xvi).

Drawing from this, traditional usability evaluation or testing considers the following factors: (a) ease of use, (b) efficiency, (c) error frequency and severity, and (d) user satisfaction (Nielsen, 1994a, 1994b; Nielsen & Loranger, 2006). Usability research is concerned with the optimization of user interactions with the UI so as to enable the user to perform typical tasks, as well as aesthetic features which support a positive user experience with the system. This traditional approach to usability evaluation is known as technological usability. Technological usability is apparent in technology-enhanced learning environments. For example, instructors and learners interact with the UI features of learning management systems (i.e., navigating to resources, viewing grades, creating posts in the discussion board, submitting assignments, etc.). The technological usability of a given elearning, hybrid, or online course delivery system affects the learner experience. Systems that have higher technological usability promote better learner experiences than those with lower technological usability (Althobaiti & Mayhew, 2016; Parlangeli et al., 1999). This suggests that failing to address technological usability during the design of learning technologies introduces barriers to learner inquiry and navigation within the learning environment, thereby impacting knowledge construction.

It is clear, therefore, that technological usability is an important aspect of overall LX. However, UI interactions alone are insufficient to fully explain the overall quality of the LX because, as maintained by Rappin and colleagues (1997), “The requirements of interfaces designed to support learning are different than for interfaces designed to support performance” (p. 486). Simply because a learning system has high technological usability does not guarantee that using it will lead to a positive learning experience or promote learning outcomes. The pedagogical and social dimensions associated with the learning process also must be considered. Absent from technological usability evaluation are, for example, communication among students and teachers, content arrangement, learning level support, learning objectives, etc. (Jahnke, 2015; Lim & Lee, 2007). Learners do not only interact with a user interface when engaging with a learning system; they also interact with intentionally designed learning materials (the pedagogical) in the context of a learning community or affinity group (the social). By explicitly acknowledging in conjunction with technological considerations the pedagogical and social dimensions of learner experience, a complex and interconnected view of technology-mediated learning begins to emerge.

The perspective of the combined social and technical dimensions of technology-mediated learning, the sociotechnical, draws from sociotechnical theory (cf. Cherns, 1976, 1987; Mumford, 2000) and applies it to a learning context. Within a sociotechnical frame, learners actions and interactions with others (i.e., discussions, file sharing, chat, etc.) are mediated by learning technologies (i.e., learning management systems, serious games, etc.). These technology mediated social experiences can be characterized as human-to-human-via-computer interaction, or HHCI (Squires & Preece, 1999). When considered from the perspective of learning, design of HHCI can have a critical impact on learning processes and learner interactions. For example, in a study by Jahnke, Ritterkamp, and Herrmann (2005), researchers highlighted how the presence or absence of access to certain tools or files directly influenced learning and interactions. Their work foregrounds the significance and dynamicity of learner roles in HHCI learning environments. Schmidt (2014) reported that social interactions in a multi-user virtual reality learning environment had to be carefully engineered using specific technology affordances, and in some ways restricted, so as to promote intended learning outcomes. His work illustrates the sometimes unpredictable nature of learners’ social interactions in novel technology contexts. Key to the sociotechnical dimension, therefore, is the notion of dual optimization; that is, optimizing both the technological and the social dimensions of the learner experience.

Combined, the pedagogical and technological dimensions of technology-mediated learning, the technological-pedagogical, refer to the extent to which the tools, content, interface, and tasks in technology-mediated learning environments support learners’ achievement of learning goals and objectives (Silius et al., 2003). Related to this is the concept of pedagogical usability, an approach to usability that is less frequently studied than technical usability (Nokelainen, 2006). Pedagogical usability considers the extent to which “the tools, content, interface, and tasks of the learning environments support myriad learners in various learning contexts according to selected pedagogical objectives” (Moore et al., 2014, p. 150). This approach to usability evaluation is uniquely needed in technology-mediated learning contexts because it focuses primarily on the design of learning tasks within a user interface, not on the interface alone. A variety of pedagogical usability frameworks and heuristic checklists for web-based learning evaluation have been established in the literature (cf., Albion, 1999; Horila et al., 2002; Lim & Lee, 2007; Moore et al., 2014; Nokelainen, 2006; Quinn, 1996; Reeves, 1994; Silius et al., 2003; Squires & Preece, 1996, 1999). Frameworks and checklists like these enable learning designers to interrogate the features and affordances of a given learning technology that positively influence learning. By extension, this can result in improvements to a given learning design and to more general heuristics and principles of how to design quality technology-mediated learning. Importantly, this has ramifications for instantiation and extension of learning theory (McKenney & Reeves, 2018). Central to pedagogical usability is the interplay of technology and pedagogy, suggesting that an ecological perspective is needed in the design of technology-mediated learning.

3. Method

Having established three dimensions of learner experience in the sections above (the technological, the social, and the pedagogical), the question arises as to how these dimensions might be evaluated in practice. Nokelainen’s (2006) work of pedagogical usability is relevant to this in that he systematically identified and analyzed the evaluation criteria/heuristics from eight sources to support his evaluation framework. However, his work centered around pedagogical usability alone, whereas we are interested in the three dimensions of the technological, the social, and the pedagogical. Further, his work of pedagogical is nearly 15 years old, and a variety of researchers have extended this work since. We therefore sought to analyze and update Nokelainen’s work to uncover and extend associated evaluation criteria. To this end, we performed a targeted literature review to identify relevant articles that have been published since 2006. This review led to identification of five articles, in addition to the eight articles from Nokelainen. This corpus of 13 articles was analyzed to identify evaluation criteria, which were then summarized and synthesized. We present in Table 1 below the evaluation criteria that were discovered in our literature review related to usability heuristics for technology-mediated learning (as adapted and updated from Nokelainen, 2006).

Table 1

Social, Technological, and Pedagogical Evaluation Criteria Drawn From the Literature

(as adapted and updated from Nokelainen, 2006)

*Identified by Nokelainen (2006)| Author(s), Year |

Dimension(s) of learner experience |

Title |

Criteria |

|---|

| Nielsen, 1994a, 1994b* |

Technological |

10 Usability Heuristics for User Interface Design |

- Visibility of system status

- Match between system and the real world

- User control and freedom

- Consistency and standards

- Error prevention

- Recognition rather than recall

- Flexibility and efficiency of use

- Aesthetic and minimalist design

- Help users recognize, diagnose, and recover from errors

- Help and documentation

|

| Squires & Preece, 1999* |

Technological-Pedagogical |

Predicting quality in educational software |

- Appropriate levels of learner control

- Navigational fidelity

- Match between designer and learner models

- Prevention of peripheral cognitive errors

- Understandable and meaningful symbolic representation

- Support personally significant approaches to learning

- Strategies for the cognitive error recognition, diagnosis and recovery

- Match with curriculum

|

| Albion, 1999* |

Technical-

Pedagogical |

Heuristic evaluation of educational multimedia: from theory to practice |

- Establishment of context

- Relevance to professional practice

- Representation of professional responses to issues

- Relevance of reference materials

- Presentation of video resources

- Assistance is supportive rather than prescriptive

- Materials are engaging

- Presentation of resources

- Overall effectiveness of materials

|

| Reeves, 1994* |

Mainly pedagogical |

Evaluating what really matters in computer-based education |

- Learner control

- Pedagogical philosophy

- Underlying psychology

- Goal orientation

- Experimental value (authenticity)

- Teacher role

- Program flexibility

- Value of errors

- Cooperative learning

- Motivation

- Epistemology

- User activity

- Accommodation of individual differences (scaffolding)

- Cultural sensitivity

|

| Quinn, 1996* |

Mainly pedagogical |

Pragmatic evaluation: lessons from usability |

- Clear goals and objectives

- Context meaningful to domain and learner

- Content clearly and multiply represented and multiply navigable

- Activities scaffolded

- Elicit learner understandings

- Formative evaluation

- Performance should be ‘criteria-referenced’

- Support for transference and acquiring ‘self-learning’ skills

- Support for collaborative learning

|

| Squires & Preece, 1996* |

Technological, Pedagogical |

Usability and learning: evaluating educational software |

- Specific learning tasks

- General learning tasks

- Application operation tasks

- General system operation tasks

|

| Horila et al., 2002* |

Technological, Pedagogical |

Criteria for the pedagogical usability, version 1.0 |

- Learnability

- Graphics and layout

- Technical requirements

- Intuitive efficiency

- Suitability for different learners and different situations

- Ease of use: technical and pedagogical approach

- Interactivity

- Objectiveness

- Sociality

- Motivation

- Added value for teaching

|

| Silius et al., 2003* |

Pedagogical |

A multidisciplinary tool for the evaluation of usability, pedagogical usability, accessibility and informational quality of web-based courses |

- Support for organization (organization of teaching, organization of studying, support for education training portal for different user groups)

- Support for learning and tutoring process (learning process, tutoring process, achievement of learning objects influenced by motivation, cooperation, collaboration, reflection, knowledge construction, intention, activation, authenticity, contextualization, transfer)

- Support for development of learning skills (self-direction, interaction with other actors, and learners’ autonomy)

|

| Nokelainen, 2006 |

Mainly pedagogical |

An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students |

- Learner control

- Learning activity

- Cooperative/ collaborative learning

- Goal orientation

- Applicability

- Added value

- Motivation

- Valuation of previous knowledge

- Flexibility

- Feedback

|

| Lim & Lee, 2007 |

Pedagogical |

Pedagogical usability checklist for ESL/EFL e-learning websites |

- Instruction

- Contents

- Tasks

- Learner variables

- Interactions

- Evaluations

|

| Moore et al., 2014 |

Pedagogical |

Designing CMS courses from a pedagogical usability perspective |

- Content organization

- Information load and cognitive load

- Assessment

|

| Jahnke, 2015 |

Pedagogical, Social |

Digital Didactical Designs |

- Teaching/learning objectives

- Learning activities

- Forms of assessment

- Support of interaction

- Communication and roles among students and teacher

|

| Marell-Olsson et al., 2019 |

Pedagogical, Social |

Wearable Technology in a Dentistry Study Program |

- Communication support

- Information support

- Social relations

- Student-centered

- Reflective feedback for learning, clear purpose, added value

|

4. Results

The various criteria presented in Table 1 highlight the intersections between the technological, pedagogical, and social dimensions of technology-mediated learning systems evaluation. We analyzed all criteria presented in Table 1 to identify salient points of convergence and divergence. For the analysis we used categorical aggregation to code similar items into the categories (Creswell, 2016). Drawing from our analysis of those criteria, we synthesized our findings along key dimensions related to usability evaluation that considers not only the technological but also the social and pedagogical aspects of learner experience. We coin this multidimensional usability framework sociotechnical-pedagogical usability. An overview of the dimensions and coded criteria are provided below in Table 2.

Table 2

Coded Items Revealing the Three Dimensions of Social, Technological and Pedagogical Usability

Note. From a total of 97 items in Table 1, only three items were coded multiple times (i.e., into three different categories) (sum of 100 items).

|

Dimension

|

# of coded items

|

Categories of coded items (#)

|

|---|

|

Social

|

13

|

Collaboration (4)

Communication (4)

Roles, relationships (3)

Sociality, social interactivity (2)

|

|

Technology

|

20

|

Nielsen’s 10 heuristics, navigation, learnability, prevention of cognitive errors

|

|

Pedagogical

|

61

|

Learning activities (19)

Learner context, motivation (9)

Content arrangement, material organization (9)

Assessment (7)

Goals, objectives (6)

Learner control (6)

Assignments, tasks (5)

|

|

Meta-category (misc.)

|

6

|

e.g., Epistemology, added value for teaching, objectiveness, match between designer and learner models

|

In this section, we have presented our conceptual framework and explicated the dimensions of evaluation that are central to sociotechnical-pedagogical usability. We now turn to the potential implications of this on a preliminary conceptual model.

5. Discussion

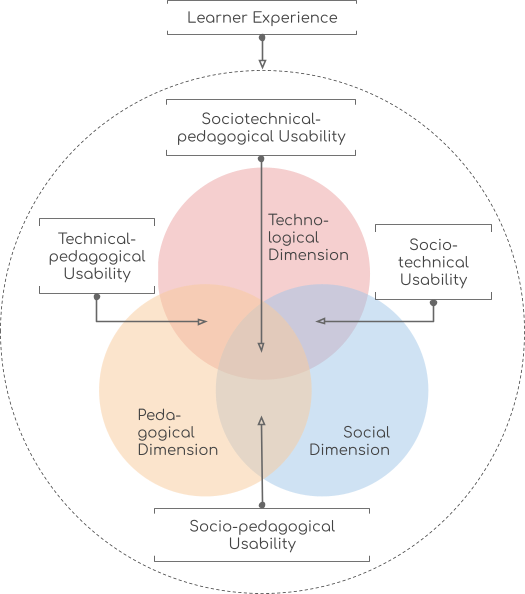

By considering the three dimensions of usability identified by our literature review—namely, the technical, the social, and the pedagogical—we witness the emergence of an interconnected and interdependent framework that extends traditionally narrow views of technical usability towards a more holistic view that acknowledges the centrality of the pedagogical and social aspects of learning. In this chapter, we identified evaluation dimensions drawn from social, technical and pedagogical aspects of learner experience to better understand the overall learner experience in technology-mediated learning environments. These three dimensions are not entirely new; however, we advocate for combining them and applying them. This can be beneficial in identifying potential design flaws when evaluating technology-mediated learning designs and then remediating those flaws. We assert that the congruence of these three dimensions in learning designs will promote positive learning experiences. This congruence is represented in Figure 1.

Figure 1

Figure 1

Multi-Dimensional Usability Framework in the Context of Learner ExperienceFigure 1 illustrates the intersections of the three separate dimensions of the social, pedagogical, and technological dimensions. First is socio-technical usability, which involves the technological and the social dimensions. It uniquely explains how the technology dimension (e.g., Nielsen, 1994b) and the social dimension are interdependent. The social dimension consists of learner or teacher communication and collaboration including social presence and social relationships to be built for learning, which cannot be achieved without a usable technology tool. Technological usability itself is inherently necessary but insufficient for a technology-enhanced learning environment. Instead, usability and the user-friendliness of online social presence provides the critical foundation for developing a community of learners, promoting active learning, and engaging students in learning.

Second, socio-pedagogical usability is situated at the intersection of the social and the pedagogical dimension and focuses attention on how to balance social and pedagogical factors in learning design. The pedagogical dimension involves instruction and learner tasks or assignments. The pedagogical dimension includes learning/instructional strategies, clear scaffolding and supports, instructions, and meaningful learning activities. While the pedagogical dimension is necessary for active student-centered learning, the social dimension is necessary to foster a positive learner experience. The social dimension includes sociality and social presence in online or hybrid learning.

Third, the technical-pedagogical dimension is positioned at the intersection of the technological and pedagogical dimension and emphasizes integration of usable technology with usable pedagogy. While technology usability is necessary but not sufficient for the whole learner experience, the pedagogical dimension considers (a) the instructor or teacher (or instructional designer) perspective on how to describe and distribute teaching goals and objectives, (b) the learner activities and assignments, and (c) the formative and summative assessment (e.g., rubrics) for each activity. In summary, the intersections of the three dimensions that we call socio-technical-pedagogical usability show how they affect each other, but even more importantly, by explicitly naming them and describing their interrelated properties, learning/instructional designers and/or instructors are provided a generative framework for what to design when engaging in the design of active student-centered learning with technologies.

Drawing from our synthesis of the social, technological, and pedagogical evaluation dimensions outlined in Table 1 and explicated in Figure 1, we now turn to establishing design recommendations (Table 3). Collectively, these design recommendations contribute to the field’s understanding of learning experience design in that they (a) acknowledge the utility of usability evaluation to exploring and assessing learner experience; (b) provide a nuanced approach toward conceptions of usability in the context of learning design; (c) stratify learner experience in technology-mediated learning contexts across the social, technological, and pedagogical dimensions; and (d) provide a foundation upon which future researchers can build and extend.

Table 3

Socio-Technical-Pedagogical Usability Design Recommendations for Hybrid or Online Courses

|

Usability dimension

|

Design recommendations for supporting learner experience in active student-centered learning environments

|

|---|

|

Social

|

Building social relationships and foster active learning roles of students by communication and online social presence:

- Prominent social presence of instructor through variety of actions (e.g., discussion boards, announcement activities, emails);

- Introduction of instructor through a visual video, or image combined with text;

- Introduction discussion post in the beginning of the course for students to prepare a video or image with text to introduce themselves to the class;

- Instructor-learner meetings once during the course to share feedback and thoughts;

- Instructor feedback for each student at least once a week;

- Inclusion of group learning activities for enhancing sense of belonging;

- Refrain from conveying any negative or demotivating message to students.

|

|

Technological

|

Technology usability and user-friendliness of tools:

- Smooth integration and easy access to multimedia features;

- Instruction on how to use multimedia technologies;

- Contact details for technical support (support for recovery from errors);

- Readily available instructions on how to resolve common technical issues;

- Transcription for audio and video multimedia content for easy access to information;

- Easy to locate and understand navigation guidelines;

- In general, apply Nielsen’s usability heuristics.

|

|

Pedagogical

|

Learning goals and objectives

- Well-worded goals for entire course and weekly objectives;

- Weekly objectives aligned with weekly content and learning activities and assignments;

- Easy to locate.

Content organization

- Logical categorization of weekly content with an intuitive sequence;

- Shortcuts to different categories of the course, such as grades, syllabus, assignments provided from the main navigation menu;

- Inclusion of interactive multimedia and visual information through voice, music, animation, real environment clips or images;

- Instructional tutorial on how to navigate through the structure, complete activities;

- Avoid multiple topics in a single week.

Learning activities, tasks, instructions

- Consistency in content load;

- Clear and detailed instructions on how to complete different activities, such as replying to discussion posts and use of quizzes;

- Design for active, student-centered learning (understanding, applying, creation);

- Learning activities bridge classroom learning and real world;

- Distance learners apply new knowledge where they live (the importance of place);

- Time flexibility to accommodate students completing their activities;

- Connecting the learning content with the student context real world.

Process-based assessment by using rubrics

- Student-centered: learners create artifacts and receive formative assessment how to improve the work;

- Fostering of motivation by including assessments through personal one-to-one feedback;

- Use of meaningful questions in quizzes or other activities related to goals and content;

- Design assessment activities for higher order learning skills (e.g., analysis, application) in addition to lower order thinking (e.g., recall and recognition) to challenge and engage different skill levels of learners;

- Visible interaction with students on discussion boards by acknowledging good replies and providing feedback to answers which can be improved.

|

6. Conclusion

Traditionally, learner experience studies have focused principally on technological usability. More recently, heuristics have begun to consider pedagogical perspectives. However, largely absent from learner experience research are considerations of the social dimension. In this chapter, we have foregrounded the importance of all three dimensions—the social, the technological, and the pedagogical—and have aligned all three dimensions into an operable framework for conducting learner experience research: in short, sociotechnical-pedagogical usability. We encourage future researchers to critique, apply, and extend the provisional framework and heuristics provided herein, with the hope that we, as a field, can move towards the development of a cohesive set of sociotechnical-pedagogical usability heuristics.

References

Albion, P. (1999). Heuristic evaluation of educational multimedia: From theory to practice. In J. Winn (Ed.), Proceedings ASCILITE 1999: 16th annual conference of the australasian society for computers in learning in tertiary education: Responding to diversity (pp. 9–15). Australasian Society for Computers in Learning in Tertiary Education.

Althobaiti, M. M., & Mayhew, P. (2016). Assessing the usability of learning management system: User experience study. In G. Vincenti, A. Bucciero, & C. Vaz de Carvalho (Eds.), E-learning, e-education, and online training (pp. 9–18). Springer International Publishing. https://doi.org/10/ggwf34

Cherns, A. B. (1976). The principles of sociotechnical design. Human Relations, 9(8), 783–792.

Cherns, A. B. (1987). Principles of sociotechnical design revisited. Human Relations, 40(3), 153–162.

Creswell, J. W., & Poth, C. N. (2016). Qualitative inquiry and research design: Choosing among five approaches (4th ed.). Sage Publications, Inc.

Dabbagh, N., Marra, R., & Howland, J. (2018). Meaningful online learning: Integrating strategies, activities, and learning technologies for effective designs (1st ed.). Routledge.

Earnshaw, Y., Tawfik, A., & Schmidt, M. (2018). User experience design. In R. E. West (Ed.). Foundations of learning and instructional design and technology (1st ed.). https://edtechbooks.org/-ENoi

El-Masri, M., & Tarhini, A. (2017). Factors affecting the adoption of e-learning systems in Qatar and USA: Extending the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2). Educational Technology Research and Development, 65(3), 743–763. https://doi.org/10/gbgzrg

Gamage, D., Perera, I., & Fernando, S. (2020). MOOCs Lack Interactivity and Collaborativeness: Evaluating MOOC Platforms. International Journal of Engineering Pedagogy (IJEP), 10(2), 94–111. https://doi.org/10/ggwf3w

Gan, C. L., & Balakrishnan, V. (2016). An empirical study of factors affecting mobile wireless technology adoption for promoting interactive lectures in higher education. International Review of Research in Open and Distributed Learning, 17(1), 214–239. https://doi.org/10/gg2jgq

Gray, C. M., Parsons, P., & Toombs, A. L. (2020). Building a holistic design identity through integrated studio education. In B. Hokanson, G. Clinton, A. A. Tawfik, A. Grincewicz, & M. Schmidt (Eds.), Educational technology beyond content: A new focus for learning (1st ed., pp. 43–55). Springer.

Hackos, J., & Redish, J. (1998). User and task analysis for interface design (1st ed.). John Wiley & Sons, Inc.

Horila, M., Nokelainen, P., Syvänen, A., & Överlund, J. (2002). Criteria for the pedagogical usability, version 1.0. Häme Polytechnic and University of Tampere.

Jahnke, I. (2015). Digital didactical designs: Teaching and learning in CrossActionSpaces. Routledge.

Jahnke, I., Ritterskamp, C., & Herrmann, T. (2005, November). Sociotechnical roles for sociotechnical systems: a perspective from social and computer sciences. Roles, an interdisciplinary perspective: Papers from the AAAI Fall symposium (pp. 68–75). AAAI Press.

Kaptelinin, V., & Nardi, B. (2018). Activity theory as a framework for human-technology interaction research. Mind, Culture, and Activity, 25(1), 3–5. https://doi.org/10/ggndhm

Kreijns, K., Kirschner, P. A., & Jochems, W. (2003). Identifying the pitfalls for social interaction in computer-supported collaborative learning environments: A review of the research. Computers in Human Behavior, 19(3), 335–353. https://doi.org/10/frnqwk

Lim, C. J., & Lee, S. (2007). Pedagogical usability checklist for ESL/EFL e-learning websites. Journal of Convergence Information Technology, 2(3), 67–76.

Marell-Olsson, E. & Jahnke, I. (2019). Wearable technology in a dentistry study program: Potential and challenges of smart glasses for learning at the workplace. In I. Buchem, R. Klamma, & F. Wild (Eds.), Perspectives on wearable enhanced learning (WELL) (pp. 433–451). Springer.

McKenney, S., & Reeves, T. (2018). Conducting educational design research (2nd ed.). Routledge.

Moore, J. L., Dickson-Deane, C., & Liu, M. Z. (2014). Designing CMS courses from a pedagogical usability perspective. Perspectives in Instructional Technology and Distance Education: Research on Course Management Systems in Higher Education, 143–169.

Mumford, E. (2000). A sociotechnical approach to systems design. Requirements Engineering, 5, 125–133.

Nielsen, J. (1994a). Enhancing the explanatory power of usability heuristics. CHI ’94: Proceedings of the SIGCHI conference on human factors in computing systems (pp. 152–158). ACM.

Nielsen, J. (1994b). Heuristic evaluation. In J. Nielsen & R. Mack (Eds.), Usability inspection methods (pp. 25–62). John Wiley & Sons.

Nielsen, J., & Loranger, H. (2006). Prioritizing web usability. Pearson Education.

Nokelainen, P. (2006). An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students. Journal of Educational Technology & Society, 9(2), 178–197.

Orlikowski, W. (1991).The duality of technology: Rethinking the concept of technology in organizations. Organization Science, 3, 398–427.

Orlikowski, W. (1996). Improvising organizational transformation over time: A situated change perspective. Information Systems Research, 1, 63–92.

Parlangeli, O., Marchigiani, E., & Bagnara, S. (1999). Multimedia systems in distance education: Effects of usability on learning. Interacting with Computers, 12(1), 37–49. https://doi.org/10/bmc89f

Quinn, C. N. (1996). Pragmatic evaluation: Lessons from usability. 13th Annual Conference of the Australasian Society for Computers in Learning in Tertiary Education (pp. 437–444). ASCILITE.

Rappin, N., Guzdial, M., Realff, M., & Ludovice, P. (1997). Balancing usability and learning in an interface. CHI '97: Proceedings of the SIGCHI conference on human factors in computing systems (pp. 479–486). ACM. https://doi.org/10/cb3nk4

Reeves, T. C. (1994). Evaluating what really matters in computer-based education. Computer Education: New Perspectives, 219–246.

Schmidt, M. (2014). Designing for learning in a three-dimensional virtual learning environment: A design-based research approach. Journal of Special Education Technology, 29(4), 59–71.

Schmidt, M., Earnshaw, Y., Tawfik, A. A., & Jahnke, I. (2020a). Methods of user centered design and evaluation for learning designers. In M. Schmidt, A. A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research: An introduction for the field of learning design & technology (1st ed.). https://edtechbooks.org/ux

Schmidt, M., Fisher, A. P., Sensenbaugh, J., Ling, B., Rietta, C., Babcock, L., Kurowski, B. G. & Wade, S. L. (2020b). User experience (re)design and evaluation of a self-guided, mobile health app for adolescents with mild traumatic brain Injury. Journal of Formative Design in Learning, 1–14.

Schmidt, M., & Tawfik, A. A. (2018). Using analytics to transform a problem-based case library: An educational design research approach. Interdisciplinary Journal of Problem-Based Learning, 12(1). https://edtechbooks.org/-ZmaC

Silius, K., Tervakari, A. M., & Pohjolainen, S. (2003). A multidisciplinary tool for the evaluation of usability, pedagogical usability, accessibility and informational quality of web-based courses. The Eleventh International PEG Conference: Powerful ICT for Teaching and Learning (Vol. 28) (pp. 1–10). https://edtechbooks.org/-bkg

Squires, D., & Preece, J. (1996). Usability and learning: evaluating the potential of educational software. Computers & Education, 27(1), 15–22.

Squires, D., & Preece, J. (1999). Predicting quality in educational software. Interacting with Computers, 11(5), 467–483.

Straub, E. T. (2017). Understanding technology adoption: Theory and future directions for informal learning. Review of Educational Research, 79(2), 625–649.

Trist, E. L., & Bamforth, K. W. (1951). Some social and psychological consequences of the Longwall method of coal-getting. Human Relations, 4, 3–38.

Tullis, T., & Albert, W. (2013). Measuring the user experience: Collecting, analyzing, and presenting usability metrics. Elsevier.

Acknowledgements

We are grateful for the instructors for sharing the course with us. We also thank the MU Extension department at the University of Columbia-Missouri, especially Dr. Jessica Gordon for supporting this project. We would like to thank the participants and instructors for participating and sharing their experiences.