This chapter discusses the affordances of applying user experience (UX) methods to designing technology for understanding learners’ classroom experiences. The chapter highlights a student survey designed to support teachers in building equitable classrooms. The surveys, called “Student Electronic Exit Tickets,” are based on three constructs related to equity: coherence, relevance, and contribution. Survey responses are provided to teachers through a visual analytics dashboard intended to help them readily interpret their learners’ experiences. We explain how UX methods, such as think alouds and cognitive interviews, guided design choices in the dashboard that help teachers attend to equity concerns by disaggregating student data by gender and race. UX methods were also used to validate our argument that visual analytics can be a supportive agent for teachers, prompting them to notice and attend to inequity in their classes. We propose an agenda for future designers of educational technology that incorporates UX methods in the creation of tools that aim to make learning in classrooms more equitable.

1. User Experience Methods as a Vehicle for Producing Equitable Visual Analytics

Baker and Siemens (2014) argued that learning sciences as a field has been late in adopting analytics, compared to other disciplines such as biology which first published an analytics scientific journal in 1970. A variety of tools and methods have been employed in learning analytics (LA) for making predictions (e.g., classifiers, regressors, and latent knowledge estimators) and for structure discovery (e.g., clustering, factor analysis, social network analysis, and domain structure discovery). A reference model for learning analytics based on the following four dimensions—data and environments (what), stakeholders (who), objectives (why) and methods (how)—has been proposed, and the LA literature can be mapped onto this reference model (Chatti et al., 2013).

Researchers from the LA community emphasized the importance of human-centered design (HCD) methods being incorporated in the field of LA and laid out an agenda for human centered learning analytics (Buckingham Shum et al., 2019). Holstein and colleagues (2017) applied UX methods to the gathering and understanding of teachers' needs in ways that enable intelligent tutor dashboards to support them. First, the researchers conducted four types of design interviews (generative card sorting exercises, semi-structured interviews, directed storytelling, and speed dating) with ten middle school teachers. These interviews provided guidance on prototyping an augmented reality glasses application for monitoring student performance in the intelligent tutoring system in real-time. They also provided insights into teachers’ perceptions of what they consider to be useful analytics (e.g. analytics currently generated by the intelligent tutoring system were not considered to be actionable). Similarly, Ahn and colleagues (2019) provided insights on the value of using HCD methods (contextual design, design tensions) with teachers for designing analytics dashboards providing data on student learning and supporting the improvement of teaching practice. To understand teachers’ perceptions related to visual analytics, they used methods including case study, participant observation, interviews, affinity mapping, and think-aloud. Dollinger and colleagues (2019) stressed the importance of involving stakeholders in the creation and design process of learning analytics tools. They conducted a case study on using and developing LA in several Australian universities spanning over six years. Some of the advantages resulting from this co-creation process were (a) ongoing usage of the platform, (b) expanding the user base, and (c) incorporating modifications and additions from user feedback and suggestions.

Typically, teachers are provided with access to learning analytics through instructor dashboards, i.e., user interfaces that present automated analyses of student data, including visualizations and opportunities to “drill down” more closely examine the underlying data. For instance, in a programming course, instructor dashboards providing real-time data analytics have been implemented to make predictions about the state of a learner based on their grade assignment by the teacher (Diana et al., 2017). Supervised machine learning algorithms along with human graded scores were able to successfully predict the learners’ task state, enabling teachers to identify struggling learners. In another example from mathematics education, automated analysis of classroom video data has been used to provide feedback to teachers about the nature of their classroom discourse through an analytics tool called TalkMoves (Suresh et al., 2018). The TalkMoves tool uses deep learning models to detect well-defined discussion strategies called “talk moves” (Michaels et al., 2010) and generates visualizations that allow teachers to gain new insights into their instructional practices.

The various emotional states of learners during a video-based instructional session on foreign language development can be identified by analyzing audio, video, self-reporting and interaction traces (Ez-Zaouia & Lavoué, 2017). Based on this research, a teacher-facing dashboard called EMODA was created that informs instructors about learners’ emotions during lessons so that they can quickly adapt their instructional strategies. Different visualizations are provided in the teacher dashboards that provide access to the learners’ emotions and prompt an alteration of instruction as appropriate. Hubbard et al. (2017) generated a novel intervention that measures learning with the use of virtual reality and EEG headsets. The intervention works by creating a closed-loop system between the virtual and EEG biological data to generate information about the user’s state of the mind. Researchers have also studied other physiological measures (such as heart rate and facial expressions) and their relationship to learning (Di Mitri et al., 2017; Spaan et al., 2017; Xu & Woodruff, 2017). In summary, the field has started exploring and adopting human-centered or user experience design methods for a better adaptation of the learning analytics systems to support data-driven instruction. There is also a push to understand the relationship between a number of physiological and contextual measures centered around learning and teaching. Given the abundance of learner experience data that can potentially be made available, research involving UX or HCD methods should involve the different stakeholders that use data to make teaching and learning more equitable in classroom settings.

2. Understanding the Use of Data-Driven Decision Making in Schools

Using data to bring about change in instructional practice or to make more informed curricular decisions is not a new endeavor in most schools. The enactment of No Child Left Behind Act (2001) and the American Recovery and Reinvestment Act (2009) increased pressure on schools to be accountable for their actions based on concrete data (Kennedy, 2011). Schools and classrooms can offer rich bodies of information on learning experiences, but developing and applying suitable technology for generating usable knowledge has been a challenge (Wayman et al., 2004). Effective software must be designed to scaffold the analytic process of interpreting learners’ data. Readily interpretable learner experience data can promote increased use of this type of assessment practice by teachers and motivate efforts to promote epistemic justice in the classroom (Penuel & Yarnall, 2005).

2.1. How Data-Driven Decision Making Supports Teachers and Schools

Researchers recommend involving teachers in data driven decision making in order to become more knowledgeable about and attentive to the individual needs of their students (Schifter et al., 2014; Wayman, 2005). Wayman and Stringfield (2006) highlighted three avenues to support teachers’ use of data systems: professional development, leadership for a supportive data climate, and opportunities for data collaboration. Mandinach and colleagues (2006) proposed an interesting approach to decrease the mismanagement of data as it moves from the district to individual schools. They defined a conceptual framework for managing data and generating informed data driven decisions based on six cognitive skills: collect, organize, analyze, summarize, synthesize, and prioritize. Data stakeholders such as teachers, principals and district leaders systematically work their way through these skills in order to mine, make sense of, and generate recommendations from the data. Ysseldyke and McLeod (2007) emphasized the importance of using technology to monitor the responses of students as part of a continuous feedback loop. They note that a variety of data tools (such as Plato Learning, McGraw-Hill Digital Learning, and Pearson Prosper) provide in-depth information to teachers, principals and school psychologists that might lead to improved instruction.

Wayman and Stringfield (2006) stressed the importance of including the entire school faculty in the use of student data to improve instruction. The researchers conducted a study in three schools where they involved all faculty members in the process of utilizing student data and observed changes such as increased teacher efficiency, better response to students’ needs, support in analyzing current teaching practices, and an increase in collaboration. Van Geel and colleagues (2016) carried out an intervention based on data-driven decision making in 53 primary schools to explore the impact on students’ mathematics achievement. Based on two years of longitudinal data, they reported an increase in student achievement, particularly within lower socioeconomic status schools. Staman and colleagues (2014) created a professional development course designed to help teachers learn to carry out data-driven decision making activities. The course included a data-driven decision making cycle that consisted of four phases: evaluation of results through performance feedback, diagnosing the cause of underperformance, process monitoring, and designing and executing a plan for action. Results from their study indicated that the professional development course had a significant and positive impact on the school staff’s skills and knowledge.

Schildkamp and colleagues (2012) investigated a discrepancy between data from local school-based assessments and national assessments and found that the largest discrepancies were related to students’ gender and ethnicity. This study raises questions about the data analysis capabilities of the instructors who were not able to figure out why these discrepancies arose or take any subsequent action. Schildkamp and Kuiper (2010) argued that to continuously improve schools’ capacity to provide better instruction to students, teachers need to become capable of engaging in data analysis. To that end, training should be provided to teachers and school leaders, and collaborative data analysis teams should be established that include all stakeholders.

To promote more inclusive instruction, Roy and colleagues (2013) developed a differentiated instruction scale that focuses on serving the needs of all types of learners in the classroom. By considering data generated from this scale, teachers can test the effectiveness of different instructional styles and monitor the academic progress of their students. In another study focused on school principals, Roegman and colleagues (2018) documented their thinking about accountability and the use of data to inform instruction. The researchers found that principals mainly considered data exploration and analysis as a tool to help schools and students do well on standardized assessments, and their primary interest was in various types of student assessment data. The researchers argued that preparation programs can play an effective role in helping principals to consider and utilize data in a more varied and enriched manner.

2.1.1. Emerging Trends Related to Teachers’ Involvement in Data Practices

This literature review highlights several emerging trends on how to engage teachers in data practices so they can improve their instruction and focus on understanding and meeting the needs of all students:

- Teachers need professional development on how to conduct data analyses and to ensure the collected data is meaningful for motivating improvement in the classroom.

- Teachers in the same school should be supported to collaborate and share data, provide support and guidance to each other as they try out new pedagogical practices in their classrooms, and understand and accommodate individual differences in student learning.

- Involving the entire faculty can help with analyzing significant amounts of data and designing new strategies to improve the process.

- Well-designed technology can play a positive role in helping teachers to understand instructions trends and patterns over time.

- Principals need support to become more knowledgeable about the different types of student data, and the broader range of uses beyond accountability.

2.3. Supporting Student Involvement in Data Sharing Practices

There is a growing emphasis in the research literature on the benefits of sharing student data with students themselves in order to create a shared understanding of the classroom experience and to collaboratively set learning goals based on those experiences (Hamilton et al., 2009; Kennedy & Datnow, 2011). Research has examined the impact and effectiveness of such data sharing, particularly with respect to its impact on student motivation. Marsh and colleagues (2016) conducted a study with six low performing high schools and found that engaging students with data can lead to a “mastery orientation” which increases student motivation, in contrast with a “performance orientation” that can be demotivating for most students. This study also highlights how a school, district structure, and leadership activities involved in promoting (or deemphasizing) data practices can affect students’ motivation and interest.

Jimerson and colleagues (2016) focused on the effects of including students in making use of data and setting learning goals for themselves, which they termed “students involved data use” (SIDU). In this qualitative study with 11 teachers in 5 school districts, the results were mixed from the teachers’ perspective. Some teachers believed that their students benefited from the SIDU approach, but there were some challenges, including managing time effectively, establishing a uniform vocabulary, and motivating the students. The authors suggested that additional research is needed to explore how teachers can introduce, implement and sustain this practice in an effective way. In another study, Jimerson and colleagues (2019) found that teachers perceived incorporating students in the analysis process as valuable, particularly as a way to improve their classroom practice by adjusting their instruction based on individual student needs. The teachers also felt that this practice was empowering their students and helped them to “own” their data. The authors concluded that involving students in data use can positively contribute to career-long improvement cycles for teachers, but it can also have unintended negative consequences that may affect students’ attitudes and motivation and may infringe on students’ privacy.

Apart from taking part in data practices, some high school students have been involved in shaping social justice policies, demonstrating that students can play an important role in school improvement (Welton et al., 2017). Researchers have emphasized the benefits of incorporating student voices in school decision making and policy implementation (Levin, 2000). By including students as agents of change, meaningful guidelines for their engagement can be elaborated, such as allowing students to participate in formal management processes, offering students training and support, and encouraging students to take part in discussions about proposed and resulting changes.

3. Student Electronic Exit Tickets (SEET): A Visual Analytics Tool Supporting Equitable Instruction at the Classroom Level

Understanding learner experience through a variety of quantifying methods can help in promoting equitable instruction for diverse classrooms (Penuel & Watkins, 2019; Rousseau & Tate, 2003; Shah & Lewis, 2019; Valiandes, 2015). Taking a student-centered approach and gathering reliable information about learners’ experiences in the classroom can shed light on the learning community and processes from the students’ perspective (Horan et al., 2010; Paris & Alim, 2017; Penuel & Watkins, 2019; Steinberg et al., 1996; Tinto, 1997). In a self-report survey, students identified the knowledge gained from their peers while sharing out ideas during lessons as the most important aspect of their learning process, noting such peer-peer interactions makes the content more personal to them (Holley & Steiner, 2005).

Designing assessments that address issues related to equity and epistemic justice in the classroom is critical to understand learners' experiences (Penuel & Watkins, 2019). Collecting and engaging with these data can guide teachers to better align their instruction and curricular materials with learners’ interests and experiences (González et al., 2001) particularly when it is evident that experiences differ depending on the student, task, and teachers (Penuel et al., 2016). Data designed to uncover students’ perceptions direct attention towards noticing and understanding situations in which learners’ experiences differ based on their race and gender and, in turn, how these differences impact overall classroom culture (Langer-Osuna & Nasir, 2016).

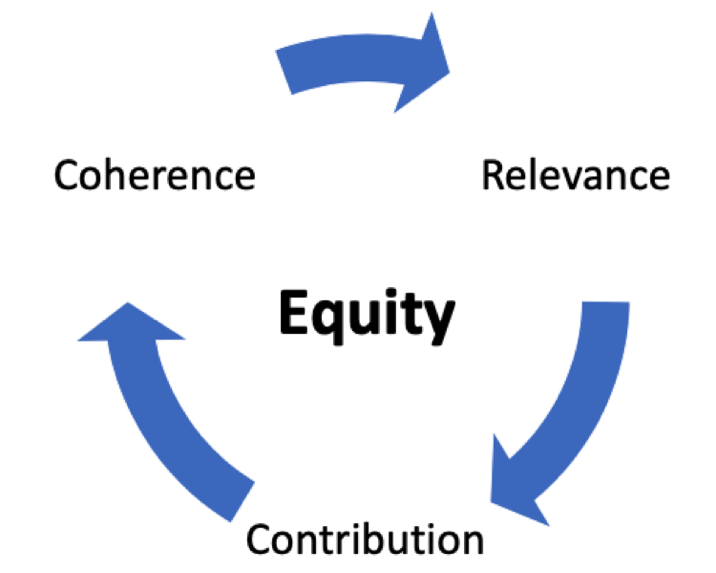

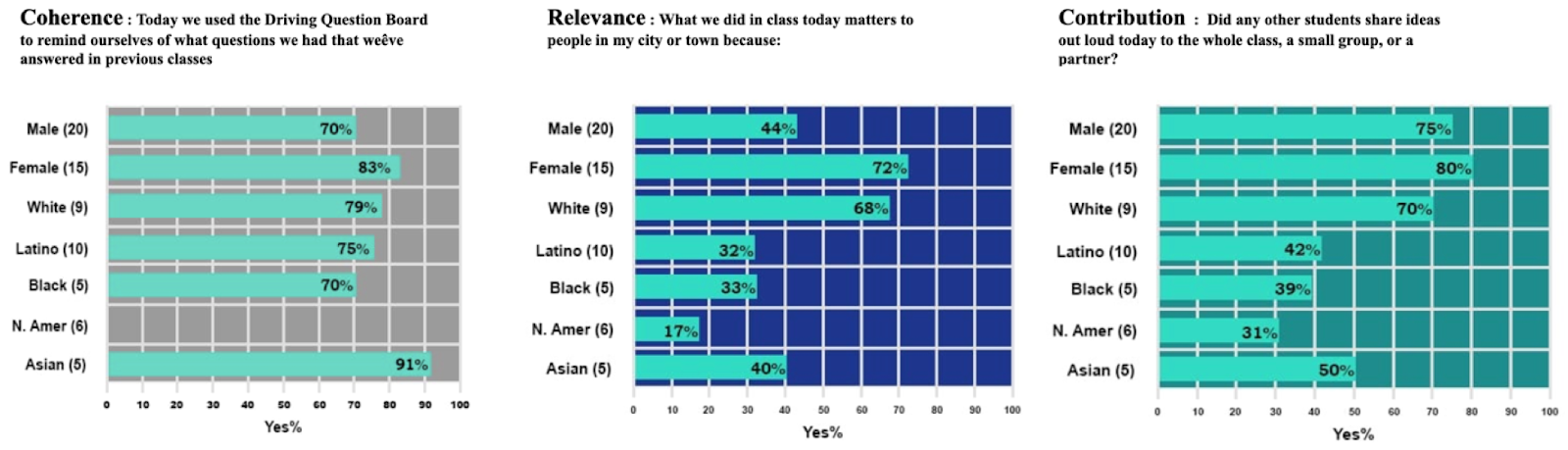

We argue that supporting equity involves understanding learners’ experiences based on three constructs: coherence, relevance, and contribution (Penuel et al., 2018). To this end, we have created an assessment premised on these constructs called the Student Electronic Exit Ticket (SEET). SEET data provide targeted information about learners’ experiences within a particular academic unit and classroom. Each construct comprises a unique set of questions. SEET questions related to coherence ask students whether they understand how current classroom activities contribute to the purpose of the larger investigations in which they are engaged. Coherent learning experiences appear connected from the students’ perspective, where the progression of learning experiences is driven by student questions, ideas, and investigations (Reiser et al., 2017). Questions related to relevance ask students to consider the degree to which lessons matter to the students themselves, to the class, and to the larger community (Penuel et al., 2018). For contribution, SEET questions ask students whether they shared their ideas in a group discussion, heard ideas shared by others, and whether others’ ideas impacted their thinking. The aim of using the SEET assessment in the classroom is not to judge teachers or identify students’ understanding of disciplinary content. Rather, as shown in Figure 1, the assessment is intended to help create an environment for improving teacher instruction and diminishing classroom inequity. Gathering and studying data based on students’ perceptions of coherence, relevance, and contribution can prompt teachers to minimize inequality and promote student agency.

Figure 1

Figure 1

Framework for Supporting Equity in Classrooms Using Three Constructs: Coherence, Relevance, and ContributionIn this section, we describe the design of the SEETs as a visual analytics tool. Teachers first gather student data using SEETs toward the end of a lesson or curricular unit. They then view patterns within and across their classrooms, looking at student data disaggregated by gender and race. Teachers can also visually track their students’ experience over time and examine the degree to which changes occurred.

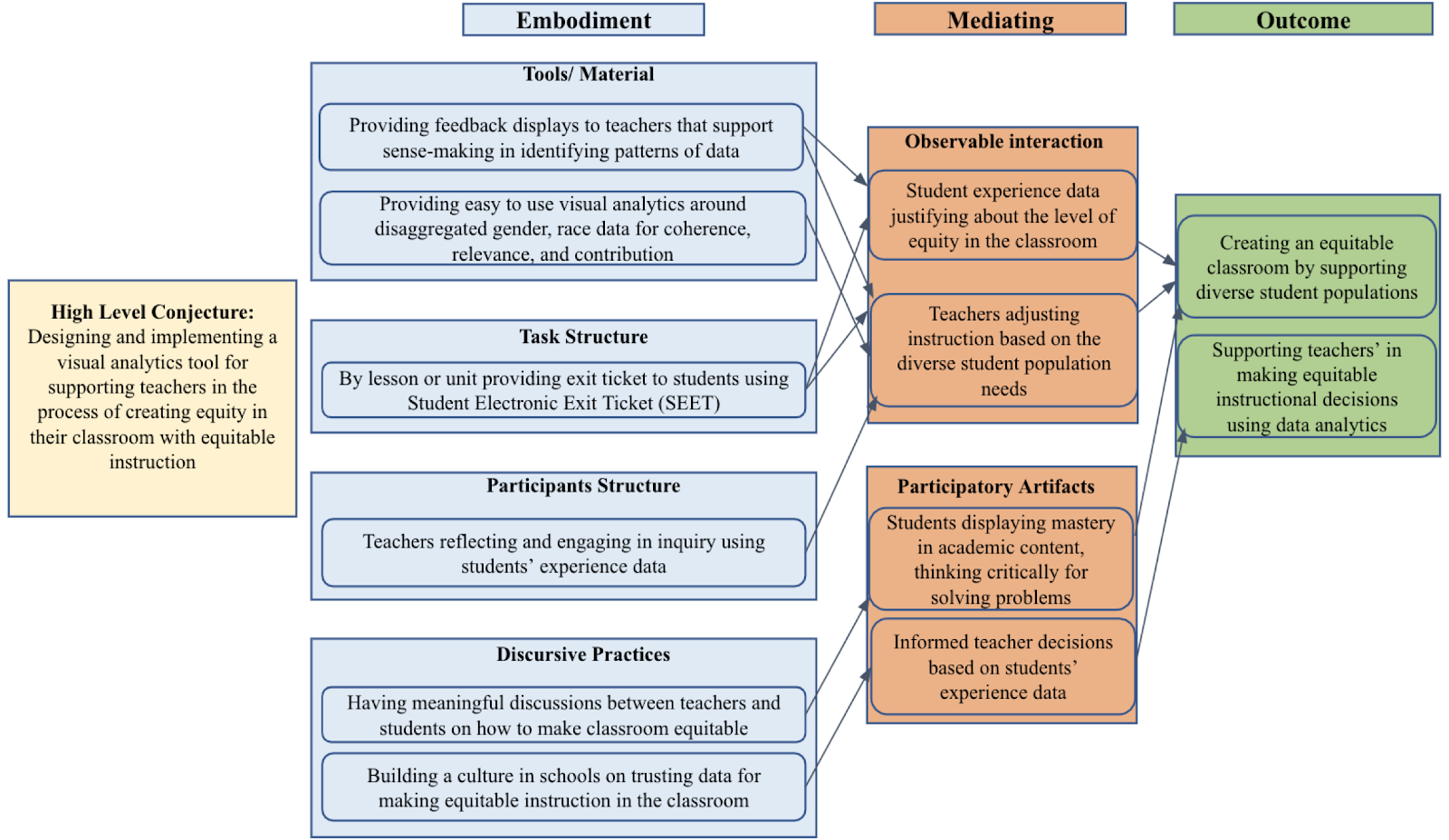

The conjecture map in Figure 2 illustrates our ideas about how visual analytics tools can support more equitable classrooms. In design-based research, a conjecture map is used as “a means of specifying theoretically salient features of a learning environment design and mapping out how they are predicted to work together to produce desired outcomes” (Sandoval, 2014, p.19). By designing tools to meaningfully display student experience data, we conjecture that teachers can adjust their instructional decisions and actions to create an equitable environment.

Figure 2

Figure 2

Conjecture Map for Designing the System and Achieving Desired OutcomesWe present two use cases to illustrate how applying user experience methods impacted the tool’s design and development process to meet our objective of promoting equity from the lens of teachers. A use case is a methodology used by software development teams to guide their processes. The methodology involves determining the central requirements that will lead to the proposed solution or software components, drawing on advice and feedback from stakeholders or other relevant actors (Cockburn, 2000; Jacobson, 1993). Based on a research practice partnership between the University of Colorado Boulder and a large urban school district in Denver, Colorado, we describe two use cases that utilize different user experience methods in an effort to gather feedback on the design of the visual analytics tool addressing equity.

3.1. Use case 1: Using the think-aloud method

The think-aloud method is a way of understanding the cognitive processes of the participants when a stimulus is introduced during decision making (Kuusela & Pallab, 2000). The central goal of this method is to gather participants’ verbal reasoning as they search for information, evaluate their options, and make the best choice. We selected this user experience method for gathering feedback on the visual displays of the SEET data by focusing on the sense-making of the teachers. Our research team drafted thirty possible data visualizations based on representative student experience data in order to determine which visualizations were most useful to middle school teachers. We used these visualizations as a stimulus for gathering information related to the design of the dashboard. We also attended to the teachers’ data and visual literacy skills as they sought to make sense of the visualizations. With the growing emphasis on using data for decision making in schools and for improving instruction (Marsh et al., 2006; Marsh et al., 2016; Stecker et al., 2008), analytics tools need to be designed so that they match teachers’ data and visual literacy skills and are not subject to biased or ambiguous interpretations (Szafir, 2018).

All teachers who were included in the design process taught science at the middle school level and used a problem-based approach to teaching. Think aloud and cognitive interviews were conducted simultaneously in two iterations (iteration 1: five science teachers; iteration 2: two science teachers) with seven randomly selected teachers (four males, three females). In our think-aloud studies, we showed the teachers different visualizations based on analytics from sample classroom data addressing students’ perceptions of coherence, relevance, and contribution. Teachers were asked to verbalize their thoughts as they sought to interpret these visualizations and answer questions posed by the research team about the data.

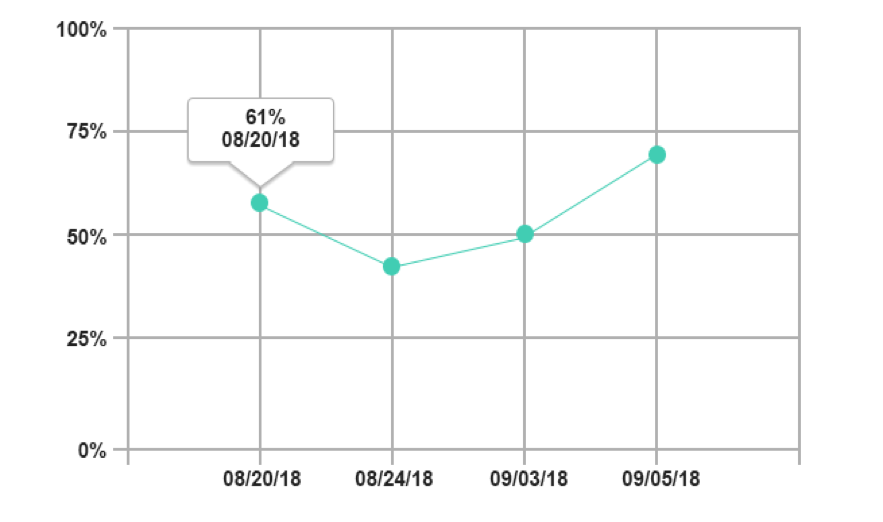

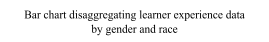

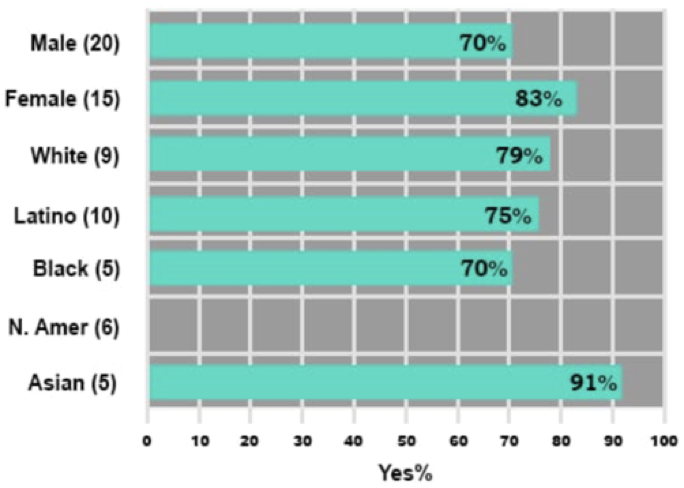

During iteration 1, the first three teachers selected two visualizations (horizontal stacked bar and connected scatterplot) that they felt best supported them in making sense of the data. Based on the feedback of the last two teachers in iteration 1, we switched to different visualizations; those two teachers nominated the following visualizations as the most useful: heat map, bubble chart, and line chart. In iteration 2, with two new science teachers, we included the preferred visualizations from the first iteration along with some new visualizations for the teachers to consider. The two teachers selected three visualizations as the most conducive to sense-making: horizontal bar chart, heatmap, and connected scatterplot. Based on teachers’ suggestions, along with our observations of teachers’ visual and data literacy skills, we selected a horizontal bar chart, a heat map, and a connected scatterplot as the most appropriate visualizations of disaggregated learner experience data (see Figure 3). Most of the teachers indicated that these data displays were consistent with their abilities and expectations in interpreting data and provided them with an easy and unbiased understanding of the learners’ experiences.

Figure 3

Figure 3

Selected Visualizations From Teachers Providing Feedback of Learner ExperienceTeachers also provided design recommendations to make the visualizations more readily interpretable and to support a comparison of results within and across constructs. An example of such a recommendation is shown in Figure 4. In this case, teachers suggested using the same background color for questions belonging to a similar construct, to make it easier to view patterns within that construct.

Figure 4

Figure 4

Design Recommendation for Highlighting Questions From Same Construct3.2. Use case 2: Using Cognitive Interview

After the think-aloud, teachers then participated in cognitive interviews (Fisher & Geiselman, 1992). Cognitive interviews allow for an in-depth analysis of the validity of verbal reports of the respondents’ thought process (Blair & Presser, 1993; Conrad & Blair, 1996). Our interviews focused on the target constructs and how visualizations can reveal equity concerns in classrooms. Using this UX method enabled us to gather justifications for the selection of particular visual displays and also provided an initial understanding of how teachers attend to equity using visual analytics. We adopted the grounded theory approach for the analysis of the verbal scripts from think aloud and cognitive interviews (Charmaz, 2014). A recurring pattern that emerged during the cognitive interviews was related to teachers’ thinking about how visualizations can promote new understandings and discussions around equity or inequity. There is general agreement that equity or inequity in the classroom can be created through the prevailing classroom discursive practices (Herbel-Eisenmann, 2011; Moschkovich, 2012). During the cognitive interviews, many teachers ideated on having discussions with their students based on the visualizations to further support equity and enhance the learners' classroom experience. As Joan[1] reflected during her interview when asked how these visualizations would help her understand the needs in her classroom:

I think it would definitely give me some empathy. Especially here, in coherence for females. I would definitely drive my instruction to help target this group. Clearly if this was my class with my data, I did not do my job enriching these female students. So, that would really cause me to go back and reflect and hopefully make some changes. This piece of data would allow me to just directly ask them: What did you need that I didn't give you? (Cognitive interview with Joan, November 2018)

Cognitive interviews helped us, as designers of the SEETs, validate our view of visual analytics as having the potential to serve as a scaffolding agent for teachers to consider inequity in their classrooms. For example in her interview, Maggie described what she took away from the bar chart visualization:

The instructional need would probably be making this culturally relevant to all students in the classroom, making sure that all students do find a connection to it, whatever they're learning. Because it's clear that all students aren't finding that connection from the lesson to what's important to them or their community, only some students are, and it's solely dependent on cultural background or ethnicity. (Cognitive interview with Maggie, December 2018)

The cognitive interviews enabled our research team to gather evidence on how cognizant teachers are about fostering equity in their classrooms. As part of our research practice partnership, the participating teachers were expected to play a significant role in the design and development process of various curricular and instructional products and to weigh in on the validity of assumptions made by the researchers. In this case, the teachers played a significant role in helping the research team to understand how the visualizations will be used, and improving the interpretability of the visualizations.

4. Conclusion

Learning experience design is an interdisciplinary and evolving field (Ahn, 2019). Prior research has identified many of the benefits and challenges of user-centered design when creating new technology (Abras et al., 2004; Greenberg & Buxton, 2008; Norman, 2005). Research within the learning sciences has a rich history of building on these ideals to promote learner-centered design (Soloway et al., 1994), including teachers and learners in the design process of systems meant to impact teaching and learning can provide an in-depth understanding of their expectations and help to identify motivations and barriers to adoption and use. This chapter illustrated how user experience design methods can be applied to the design of learning analytics systems where designers must grapple with multiple interwoven concerns, such as what data should be collected, how can it be displayed to support appropriate interpretations, and how visualizations can be crafted to promote useful feedback and instructional change. Ultimately, our goal is to create systems that support educators to thoughtfully reflect on their classroom culture, by providing useful data on their students’ felt experiences and highlighting the critical role teachers play in shaping equitable classroom learning experiences.

References

Abras, C., Maloney-Krichmar, D., & Preece, J. (2004). User-centered design. In W. Bainbridge (Ed.), Berkshire encyclopedia of human-computer interaction, volume 2. (pp. 764-768). Berkshire Publishing Group.

Ahn, J. (2019). Drawing inspiration for learning experience design (LX) from diverse perspectives. The Emerging Learning Design Journal, 6(1). https://edtechbooks.org/-khkB

American Recovery and Reinvestment Act of 2009, Pub. L. No. 111-5, 123 Stat. 115 (2009). https://edtechbooks.org/-smKz

Baker, R., & Siemens, G. (2014). Educational data mining and learning analytics. In R. K. Sawyer (Ed.), Cambridge handbook of the learning sciences (2nd ed., pp. 253 – 274). Cambridge University Press.

Blair, J., & Presser, S. (1993). Survey procedures for conducting cognitive interviews to pretest questionnaires: A review of theory and practice. In D. A. Marker & W. A. Fuller (Eds.), Proceedings of the survey research methods section, American Statistical Association (pp. 370-375). American Statistical Association. https://edtechbooks.org/-Ucgt

Buckingham Shum, S., Ferguson, R., & Martinez-Maldonado, R. (2019). Human-centred learning analytics. Journal of Learning Analytics, 6(2), 1-9.

Charmaz, K. (2014). Constructing grounded theory (2nd ed.). SAGE Publications Ltd.

Chatti, M. A., Dyckhoff, A. L., Schroeder, U., & Thüs, H. (2013). A reference model for learning analytics. International Journal of Technology Enhanced Learning, 4(5-6), 318-331.

Cockburn, A. (2000). Writing effective use cases. Addison-Wesley Professional.

Conrad, F., & Blair, J. (1996). From impressions to data: Increasing the objectivity of cognitive interviews. In N. C. Tucker (Eds.), Proceedings of survey research methods section, American Statistical Association (pp. 1-9). American Statistical Association. https://edtechbooks.org/-Erq

Diana, N., Eagle, M., Stamper, J., Grover, S., Bienkowski, M., & Basu, S. (2017). An instructor dashboard for real-time analytics in interactive programming assignments. LAK '17 conference proceedings: The seventh international learning analytics & knowledge conference (pp. 272-279). ACM.

Di Mitri, D., Scheffel, M., Drachsler, H., Börner, D., Ternier, S., & Specht, M. (2017). Learning pulse: A machine learning approach for predicting performance in self-regulated learning using multimodal data. In A. Wise, P. H. Winne, G. Lynch, X. Ochoa, I. Molenaar, S. Dawson, & M. Hatala (Eds.), LAK '17 conference proceedings: The seventh international learning analytics & knowledge conference (pp. 188-197). ACM.

Dollinger, M., Liu, D., Arthars, N., & Lodge, J. (2019). Working together in learning analytics towards the co-creation of value. Journal of Learning Analytics, 6(2), 10-26.

Ez-Zaouia, M., & Lavoué, E. (2017, March). EMODA: A tutor oriented multimodal and contextual emotional dashboard. In A. Wise, P. H. Winne, G. Lynch, X. Ochoa, I. Molenaar, S. Dawson, & M. Hatala (Eds.), LAK '17 conference proceedings: The seventh international learning analytics & knowledge conference (pp. 429-438). ACM.

Fisher, R. P., & Geiselman, R. E. (1992). Memory enhancing techniques for investigative interviewing: The cognitive interview. Charles C Thomas Publisher.

González, N., Andrade, R., Civil, M., & Moll, L. (2001). Bridging funds of distributed knowledge: Creating zones of practices in mathematics. Journal of Education for Students Placed at Risk, 6(1-2), 115-132.

Greenberg, S., & Buxton, B. (2008). Usability evaluation considered harmful (some of the time). CHI '08: CHI conference on human factors in computing systems (pp. 111-120). ACM.

Hamilton, L., Halverson, R., Jackson, S. S., Mandinach, E., Supovitz, J. A., Wayman, J. C., Pickens, C., Martin, E. S., & Steele, J. L. (2009). Using student achievement data to support instructional decision making. Institute of Education Sciences. https://edtechbooks.org/-FkFS

Herbel-Eisenmann, B., Choppin, J., Wagner, D., & Pimm, D. (Eds.). (2011). Equity in discourse for mathematics education: Theories, practices, and policies (Vol. 55). Springer Science & Business Media.

Holley, L. C., & Steiner, S. (2005). Safe space: Student perspectives on classroom environment. Journal of Social Work Education, 41(1), 49-64.

Holstein, K., McLaren, B. M., & Aleven, V. (2017, March). Intelligent tutors as teachers' aides: exploring teacher needs for real-time analytics in blended classrooms. LAK '17: Proceedings of the seventh international learning analytics & knowledge conference (pp. 257-266). ACM.

Horan, S. M., Chory, R. M., & Goodboy, A. K. (2010). Understanding students' classroom justice experiences and responses. Communication Education, 59(4), 453-474.

Hubbard, R., Sipolins, A., & Zhou, L. (2017). Enhancing learning through virtual reality and neurofeedback: A first step. LAK '17: Proceedings of the seventh international learning analytics & knowledge conference (pp. 398-403). ACM.

Jacobson, I. (1993). Object-oriented software engineering: A use case driven approach. Pearson Education India.

Jimerson, J. B., Cho, V., Scroggins, K. A., Balial, R., & Robinson, R. R. (2019). How and why teachers engage students with data. Educational Studies, 45(6), 677-691.

Jimerson, J. B., Cho, V., & Wayman, J. C. (2016). Student-involved data use: Teacher practices and considerations for professional learning. Teaching and Teacher Education, 60, 413-424.

Kennedy, B. L., & Datnow, A. (2011). Student involvement and data-driven decision making: Developing a new typology. Youth & Society, 43(4), 1246-1271.

Kennedy, M. M. (2011). Data use by teachers: Productive improvement or panacea? Working Paper# 19. Education Policy Center at Michigan State University.

Kuusela, H., & Pallab, P. (2000). A comparison of concurrent and retrospective verbal protocol analysis. The American Journal of Psychology, 113(3), 387-404.

Langer-Osuna, J. M., & Nasir, N. I. S. (2016). Rehumanizing the “other” race, culture, and identity in education research. Review of Research in Education, 40(1), 723-743.

Levin, B. (2000). Putting students at the centre in education reform. Journal of Educational Change, 1(2), 155-172.

Mandinach, E. B., Honey, M., & Light, D. (2006, April 9). A theoretical framework for data-driven decision making [Paper presentation]. Annual meeting of AERA, San Francisco, CA United States.

Marsh, J. A., Farrell, C. C., & Bertrand, M. (2016). Trickle-down accountability: How middle school teachers engage students in data use. Educational Policy, 30(2), 243-280.

Marsh, J. A., Pane, J. F., & Hamilton, L. S. (2006). Making sense of data-driven decision making in education: Evidence from recent RAND research. RAND Corporation. https://edtechbooks.org/-pzzs

Michaels, S., O’Connor, C., Hall, M. W., & Resnick, L. B. (2010). Accountable talk sourcebook: For classroom conversation that works. University of Pittsburgh Institute for Learning. https://edtechbooks.org/-qmVi

Moschkovich, J. N. (2012). How equity concerns lead to attention to mathematical discourse. In Herbel-Eisenmann, B., Choppin, J., Wagner, D., & Pimm, D. (Eds.), Equity in discourse for mathematics education (pp. 89-105). Springer, Dordrecht.

No Child Left Behind Act of 2002, Pub. L. No. 107-110, 115 Stat. 1425 (2002). https://edtechbooks.org/-mPKq

Norman, D. A. (2005). Human-centered design considered harmful. Interactions, 12(4), 14-19.

Paris, D., & Alim, H. S. (Eds.). (2017). Culturally sustaining pedagogies: Teaching and learning for justice in a changing world. Teachers College Press.

Penuel, W. R., Van Horne, K., Jacobs, J., & Turner, M. (2018). Developing a validity argument for practical measures of student experience in project-based science classrooms. [Paper presentation] Annual Meeting of the American Educational Research Association, New York, NY, United States.

Penuel, W. R., Van Horne, K., Severance, S., Quigley, D., & Sumner, T. (2016). Students’ responses to curricular activities as indicator of coherence in project-based science. International Society of the Learning Sciences.

Penuel, W. R., & Watkins, D. A. (2019). Assessment to promote equity and epistemic justice: A use-case of a research-practice partnership in science education. The ANNALS of the American Academy of Political and Social Science, 683(1), 201-216.

Penuel, W. R., & Yarnall, L. (2005). Designing handheld software to support classroom assessment: An analysis of conditions for teacher adoption. Journal of Technology, Learning, and Assessment, 3(5).

Reiser, B. J., Novak, M., & McGill, T. A. (2017). Coherence from the students’ perspective: Why the vision of the framework for K-12 science requires more than simply “combining” three dimensions of science learning. Board on Science Education. https://edtechbooks.org/-qvoj

Roegman, R., Perkins-Williams, R., Maeda, Y., & Greenan, K. A. (2018). Developing data leadership: Contextual influences on administrators’ data use. Journal of Research on Leadership Education, 13(4), 348-374.

Rousseau, C., & Tate, W. F. (2003). No time like the present: Reflecting on equity in school mathematics. Theory into Practice, 42(3), 210-216.

Roy, A., Guay, F., & Valois, P. (2013). Teaching to address diverse learning needs: Development and validation of a differentiated instruction scale. International Journal of Inclusive Education, 17(11), 1186-1204.

Sandoval, W. (2014). Conjecture mapping: An approach to systematic educational design research. Journal of the Learning Sciences, 23(1), 18-36.

Schifter, C., Natarajan, U., Ketelhut, D. J., & Kirchgessner, A. (2014). Data-driven decision-making: Facilitating teacher use of student data to inform classroom instruction. Contemporary Issues in Technology and Teacher Education, 14(4), 419-432.

Schildkamp, K., & Kuiper, W. (2010). Data-informed curriculum reform: Which data, what purposes, and promoting and hindering factors. Teaching and Teacher Education, 26(3), 482-496.

Schildkamp, K., Rekers-Mombarg, L. T., & Harms, T. J. (2012). Student group differences in examination results and utilization for policy and school development. School Effectiveness and School Improvement, 23(2), 229-255.

Shah, N., & Lewis, C. M. (2019). Amplifying and attenuating inequity in collaborative learning: Toward an analytical framework. Cognition and Instruction, 37(2), 1-30.

Soloway, E., Guzdial, M., & Hay, K. (1994). Learner-centered design: The challenge for HCI in the 21st century. Interactions, 1(2), 36-48.

Staman, L., Visscher, A. J., & Luyten, H. (2014). The effects of professional development on the attitudes, knowledge and skills for data-driven decision making. Studies in Educational Evaluation, 42, 79-90.

Stecker, P. M., Lembke, E. S., & Foegen, A. (2008). Using progress-monitoring data to improve instructional decision making. Preventing School Failure: Alternative Education for Children and Youth, 52(2), 48-58.

Steinberg, L. D., Brown, B. B., & Dornbusch, S. M. (1996). Beyond the classroom: Why school reform has failed and what parents need to do. Gifted and Talented International, 11(2), 89-90.

Suresh, A., Sumner, T., Huang, I., Jacobs, J., Foland, B., & Ward, W. (2018). Using deep learning to automatically detect talk moves in teachers' mathematics lessons. 2018 IEEE International Conference on Big Data (Big Data) (pp. 5445-5447). IEEE.

Szafir, D. A. (2018). The good, the bad, and the biased: five ways visualizations can mislead (and how to fix them). Interactions, 25(4), 26-33.

Tinto, V. (1997). Classrooms as communities: Exploring the educational character of student persistence. The Journal of Higher Education, 68(6), 599-623.

Valiandes, S. (2015). Evaluating the impact of differentiated instruction on literacy and reading in mixed ability classrooms: Quality and equity dimensions of education effectiveness. Studies in Educational Evaluation, 45, 17-26.

Van Geel, M., Keuning, T., Visscher, A. J., & Fox, J. P. (2016). Assessing the effects of a school-wide data-based decision-making intervention on student achievement growth in primary schools. American Educational Research Journal, 53(2), 360-394.

Wayman, J. C. (2005). Involving teachers in data-driven decision making: Using computer data systems to support teacher inquiry and reflection. Journal of Education for Students Placed at Risk, 10(3), 295-308.

Wayman, J. C., & Stringfield, S. (2006). Technology-supported involvement of entire faculties in examination of student data for instructional improvement. American Journal of Education, 112(4), 549-571.

Wayman, J. C., Stringfield, S., & Yakimowski, M. (2004). Software Enabling School Improvement through Analysis of Student Data. (Report Number 67). Center for Research on the Education of Students Placed at Risk (CRESPAR), Johns Hopkins University. https://edtechbooks.org/-VAo

Welton, A. D., Harris, T. O., Altamirano, K., & Williams, T. (2017). The politics of student voice: Conceptualizing a model for critical analysis. In M. Young & Sarah Diem (Eds.), Critical approaches to education policy analysis (pp. 83-109). Springer.

Xu, Z., & Woodruff, E. (2017). Person-centered approach to explore learner's emotionality in learning within a 3D narrative game. LAK '17: Proceedings of the seventh international learning analytics & knowledge conference (pp. 439-443). ACM.

Ysseldyke, J. E., & McLeod, S. (2007). Using technology tools to monitor response to intervention. In S. R. Jimerson, M. K. Burns, & A. M. VanDerHeyden (Eds.), Handbook of response to intervention (pp. 396-407). Springer.

Acknowledgements

We would like to thank all the participating teachers in the work. Also, we would like to acknowledge the role of reviewers in shaping this work. This material is based upon work supported by the Spencer Foundation.

[1] All teacher names are pseudonyms.