This chapter introduces the use of Q methodology to evaluate instruction in the context of higher education. As an important component of instructional design, evaluation is essential to ensure the quality of a program or curriculum. Different data collection methods and analysis tools are needed to evaluate educational interventions (Frechtling, 2010; Saunders, 2011). Instructional design practitioners are required to be familiar with a range of quantitative and qualitative analytic methods to perform a variety of evaluations in different contexts. Q methodology is a unique mixed method that utilizes both quantitative and qualitative techniques to examine people’s subjective viewpoints (Brown, 1993). We propose that Q methodology should be included in the evaluator’s toolbox. We attempt to provide instructional design practitioners with some practical guidelines to apply Q methodology to evaluation based on a systematic overview of evaluation and Q methodology. Suggestions and limitations of using Q methodology for higher education evaluation are also discussed.

Definition and Benefits of Evaluation

Instructional design is an integrative and iterative process that involves analysis, design, development, implementation, and evaluation (ADDIE; Branch, 2009). As an integral element of the ADDIE paradigm, formative and summative evaluation function as quality control of instructional design projects. With the focus on future performance improvement, Guerra-Lopez (2008) describe evaluation as the comparison between objectives and actual results in order to “produce action plans for improving the programs and solutions being evaluated so that expected performance is achieved or maintained and organizational objectives and contributions can be realized” (p. 6). Evaluation not only benefits performance improvement but also “provides information for communicating to a variety of stakeholders.… It also gives managers the data they need to report ‘up the line’, to inform senior decision-makers about the outcomes of their investments” (Frechtling, 2010, p. 4). In higher education settings, evaluation is critical for enhancing course design, refining curriculum, promoting student learning experiences, and ensuring program success (Brewer-Deluce et al., 2020). Evaluation also helps create faculty development opportunities to advance instructional design quality across courses and programs.

Q Methodology in Instructional Design

Q methodology (Q) is a unique approach to studying the target audience’s subjective perspectives on a certain topic (Brown, 1993). A concourse of statements representing all dimensions around the topic at issue is established first, and then participants are directed to sort out a series of statements sampled from the concourse (usually 30-50) into a normally distributed grid (Watts & Stenner, 2012). The sorts are thereby statistically analyzed to identify both diverged and converged viewpoints among participants. Surveys and interviews are necessary to facilitate interpretation and member-checking. Q has been widely applied to various disciplines such as public healthcare, marketing, and political science and has gained increasing attention in education (Rieber, 2020). However, Q has not become a popular methodology in educational studies (Rodl, Cruz, & Knollman, 2020). Reiber (2020) introduced Q to the field of instructional design and demonstrated how Q could be used for formative evaluation, needs assessment, and learner analysis. He maintained that Q was a powerful alternative to traditional approaches (such as surveys, interviews, and focus groups) to better understand learners' needs, perspectives, and inform instructional design decision-making.

A Review of the Use of Q for Evaluation

In higher education, the limitations of the traditional evaluation methods have become apparent. More scholars have discovered the huge potential of Q (Brewer-Deluce et al., 2020; Collins & Angelova, 2015; Ramlo, 2015a, 2015b). Brewer-Deluce et al. (2020) critiqued that conventional Likert-scale surveys fail to capture diverse viewpoints of students, and qualitative data from open-ended questions or interviews are limited, fragmented, and difficult for consistent data analysis. These traditional evaluation methods usually produce “minimal useful, actionable suggestions for course improvement” whereas Q can be helpful in “identifying areas for course strength and improvement that are aligned with student needs in an evidence-based way” (Brewer-Deluce et al., 2020, p.146). Therefore, Q presents “a viable solution to ongoing course evaluation limitations” (Brewer-Deluce et al., 2020, p.147).

Harris et al. (2019) incorporated Q into the framework of realist evaluation, a framework focused on determining “what works for whom, why and in what circumstances within programmes” (p. 432). They argued that Q and realist evaluation are compatible because of three reasons: First, both Q and realist evaluation utilize mixed methods; second, context is the key for both Q (which lies in the development of concourse) and realist evaluation; third, they both adopt the logic of abduction in the process of interpretation to make sense of the data collected. Harris et al.’s (2019) work provide theoretical support for the use of Q for evaluation.

In the evaluative practices in higher education, some scholars have successfully experimented using Q in course evaluation, teacher evaluation, and program evaluation. For instance, Brewer-Deluce et al. (2020) stated that their well-established undergraduate anatomy course did not benefit much from regular course evaluations when they constantly received positive survey results and unhelpful responses from open-ended questions. Therefore, they employed Q beyond the regular evaluation methods to gain more valuable insights. From the Q case study, Brewer-Deluce et al. (2020) discovered that students had different preferences on different components of the course. Students also made suggestions for course improvement based on these results. Collins and Angelova (2015) carried out a Q evaluation of the 35 learning activities in a graduate-level course for Teaching English to Speakers of Other Languages (TESOL) methods. Results showed three distinctive perspectives, i.e, better learning through group activities, preference on independent work, and better learning from online activities. These perspectives are not as likely to be discovered from Likert surveys. Similarly, Ramlo (2015a) used Q to evaluate the effectiveness of her intervention of flipped learning in a college Physics course.

Besides these single-course evaluations, Jurczyk and Ramlo (2004) conducted a series of Q evaluations on several undergraduate Chemistry and Physics courses across multiple semesters using the same Q sort tool. This Q sort tool consisted of statements about the overall course structure, lecture quality, lab quality, lecture instructor, and lab instructor. Although their evaluations only involved limited numbers of participants in some classes, Jurczyk and Ramlo (2004) asserted the potential of standardized Q evaluation tools for cross-course evaluations (Brewer-Deluce et al., 2020).

Ramlo and Newman (2010) introduced Q to program evaluation with an example of evaluating an inquiry-based informatics course. They claimed that the results of Q could create more meaningful and useful stakeholder profiles for program effectiveness based on personal values, opinions, attitudes, and needs rather than demographic factors. Using the same approach, Ramlo (2015b) conducted a program evaluation study for a Construction Engineering Technology program with both faculty and students included as participants.

As Ramlo (2012) pointed out, Q can be a versatile tool for multiple purposes in higher education such as needs assessment and program evaluation when the viewpoints of faculty and/or students are important. However, the existing literature shows that Q has been used for evaluation mostly by researchers rather than instructional design practitioners. Therefore, the purpose of this chapter is to introduce Q to practitioners for evaluative practices in higher education contexts.

A Practical Guide on How to Use Q for Evaluation

All methods have their strengths, applicable contexts, and weaknesses. We propose that Q can serve as a supplement of the current evaluation methodologies (Rieber 2020) because (1) Q is helpful to reveal nuances of participants’ viewpoints that might be ignored by traditional survey methods, and (2) the hands-on Q sorting activity is usually found to be engaging for participants and thus can generate more authentic results. Additionally, Q does not require a large number of participants as it does not aim to generalize results to a larger population (Brown, 1993).

Effective evaluation using Q requires practitioners to create a series of systemic evaluation statements, develop open-ended questions that align with evaluation purposes, administer Q sort activity, analyze data, and interpret results. In the following section, we try to provide practitioners with a step-by-step guide on how to use Q for evaluation. Based on theories and practices of evaluation (Oliver, 2000) and procedures of Q (Rieber, 2020), a Q evaluation sequence normally contains the following five steps:

1. Identify stakeholders and evaluation objectives

2. Create concourse and draw sample statements

3. Construct and administer the Q-sort activity

4. Factor analysis and interpretations

5. Report and communicate results

Step 1: Identify stakeholders and evaluation objectives.

An evaluation framework can guide evaluation practices. The initial step is to create an evaluation framework that requires practitioners to identify related stakeholders and evaluation objectives. Before creating evaluation protocols and measurement indicators, instructional designers need to identify the purpose of the evaluation. The evaluation objectives can be identified through a needs assessment from all relevant stakeholders for the program. A needs assessment is an essential part of program evaluation (Spector & Yuen, 2016; Stefaniak et al. 2018; Tessmer et al., 1999). Needs assessment of relevant stakeholders can provide vital information for the development of evaluation objectives. Instructional designers can ask a series of needs assessment questions to relevant stakeholders to identify where the needs are and how the evaluation helps with the needs. For instance, in a typical course evaluation, the stakeholders might be the students, faculty, teaching assistants, lab staff, and so on. The evaluation objectives can be aimed at investigating the effectiveness of the overall course, the instructional approaches, or a specific learning activity depending on the contexts and purposes.

Step 2: Create concourse and draw sample statements.

A well-established concourse is the foundation for effective Q evaluation. This step requires practitioners to investigate all relevant contextual factors, concerns, or issues influencing the program (Harris et al, 2019) and develop a concourse based on systematic analysis. To evaluate the effectiveness of a group project in a course, for example, the concourse might include statements related to the learning task, instructor support, group member commitment, communication and collaboration, and quality of work. There are multiple ways to generate statements for the concourse. Scholars can create statements based on existing literature and research, personal experiences and observations, or a combination of both (McKeown & Thomas, 2013). Although not required, it is recommended to refer to relevant literature to get inspiration from validated statements in evidence-based empirical studies or theoretical articles. This is sometimes helpful for the interpretation of results as well. More practically, the development of a concourse makes use of the results of the needs assessment mentioned in the first step, that is, survey responses or quotes from interviews. After the concourse is created, a series of statements should be sampled to be used for the Q-sort activity in the next step. A well-rounded concourse can have hundreds of statements, with the sampled statements (i.e., the Q set) ranging between 30-50 statements (Stephensen, 1985).

Step 3: Construct and administer the Q-sort activity.

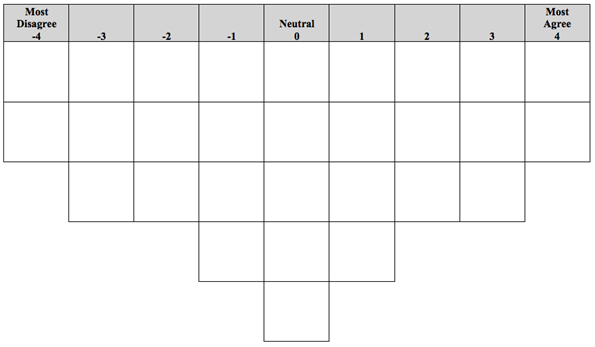

When the Q set is ready, evaluators need to decide the shape of the Q-sort grid. The Q-sort grid is an inverted normal distribution shape that ranges from most disagree to most agree (Watts & Stenner, 2012). The figure below shows a Q-sort grid for a Q set of 29 statements. Normally, there is no specific required shape for a Q-sort, thus evaluators need to discern the shape at their discretion based on participants’ familiarity with the evaluation topic. When participants have less expertise on the topic, the bell curve tends to be designed more flat (Watts & Stenner, 2012).

Figure 1

An Exemplar Q-sort Grid for 29 Statements

A picture of a Q-sort Grid

A picture of a Q-sort Grid

To guide the sorting process, evaluators pose a major guiding question for sorters. Using the group project evaluation as an example again, the guiding question might be: What do you think of the group project in our course? The actual Q-sort process first requires participants to sort all statements into three general categories (disagree, neutral, and agree). After this, participants fill statements into the Q-sort grid based on the three categories, and then move statements around if changes are needed. After sorting, participants are often asked to fill out a follow-up survey to explain why they sorted certain statements (e.g. the extreme options) the way they did. This is a helpful step for evaluators to have a deeper understanding of the sorters’ viewpoints. Depending on the objectives of the evaluation, sorters can also be invited to participate in post-sorting focus groups or individual interviews.

Traditionally, Q-sort is administered with paper cards of statements in face-to-face settings. Many researchers still prefer this means of administration when possible. However, recently, online Q distribution tools such as QSortware, HTMLQ, and Lloyd’s Q Sort Tool are utilized for convenient administration and data collection.

Step 4: Factor analysis and interpretations.

After the Q-sort data are collected, the investigator can conduct corresponding factor analysis and make meaningful interpretations for evaluation purposes. PQMethod has been widely used by researchers as a data analysis tool. More information about Q-sort administration and analysis tools can be found on the Q Methodology official website (https://edtechbooks.org/-YRGh).

KenQ is recommended as a powerful tool that takes care of all tedious statistics with a user-friendly interface. In KenQ, evaluators upload the sorting data to stage for factor analysis which is done with just a few clicks to conduct the major steps of correlation matrix, factor extraction, factor rotation, and factor display. At the end of factor analysis, evaluators download the results as a .csv file. They can also save the visualization of the idealized Q-sort for each factor (participants who share similar viewpoints are grouped as a factor). Idealized Q-sorts are considered the perspective of an average person in that factor. Through the interpretations of the viewpoints of all factors emerging from the factor analysis, evaluators identify successes and problems of a course or program.

Step 5: Report and communicate the results.

After the Q-sort analysis, a written report communicates the evaluation activity and results. The written report normally contains both qualitative data analysis results (participants’ responses to the open-ended questions and interviews) as well as quantitative analysis results (factor analysis of the Q-sorts). The target audience for the evaluation report include decision-makers, key stakeholders, and policymakers. The report should contain all relevant information including the evaluation objective, the evaluation protocol, major findings, and an action plan with recommended solution strategies.

Limitations and Implications

Despite all the advantages of Q, the limitations of Q are also obvious. As Brewer-Deluce et al. (2020) mention, this approach can be time-consuming. It also presents challenges of Q-sort administration and requires some level of data analysis expertise. There will be a learning curve for those who are new to Q. Another limitation of Q is the issue of generalizability (Brewer-Deluce et al., 2020). Although it is possible to create standardized Q-sorts to apply in different contexts, factors emerging from one evaluation are not expected to be generalized to other contexts or populations. It suggests that evaluators need to adapt and leverage different resources to create specific Q sorts appropriate for their evaluation projects and contexts.

However, more online distribution tools and data analysis tools become increasingly available to help evaluators conduct Q analysis easily. Using Brown’s (1993) car analogy, learning to use Q methodology functionally is like learning to drive a car (not to make a car from scratch). It does not require knowledge of all the mechanics; however, it requires practices to get proficient. Once mastered, Q methodology will be a useful tool in an evaluator’s toolbox.

Conclusion

This chapter discussed the use of Q as an alternative to conventional evaluation methods in higher education settings. Based on a systematic review of evaluation and Q, we propose a series of practical guidelines for instructional design practitioners to conduct evaluation projects using Q. We also briefly discuss the benefits and limitations of Q. Context is central to the practices of evaluation (Vo & Christie, 2015) – no single evaluation methodology can fit all contexts. It is the evaluator’s responsibility to decide what tool(s) to use for a specific evaluation project. More empirical studies using Q for evaluation purposes are needed to demonstrate the usefulness of Q. We have provided practitioners with practical guidance on the use of Q for evaluation. For specific examples to illustrate how to follow these guidelines, readers should refer to relevant research by Susan Ramlo (e.g., Ramlo & Newman, 2020; Ramlo, 2012, 2015a, 2015b), who has conducted multiple evaluation and assessment studies using Q, and Watts and Stenner’s (2012) book, which provides a comprehensive description of Q theory, Q research, and Q data analysis and interpretations.

References

Branch, R. M. (2009). Instructional design: The ADDIE approach (Vol. 722). Springer Science & Business Media.

Brewer-Deluce, D., Sharma, B., Akhtar-Danesh, N., Jackson, T., & Wainman, B. C. (2020). Beyond Average Information: How Q‐Methodology Enhances Course Evaluations in Anatomy. Anatomical sciences education, 13(2), 137-148. https://edtechbooks.org/-XPo

Brown, S. R. (1993). A primer on Q methodology. Operant Subjectivity, 16, 91-138. DOI:10.15133/j.os.1993.002.

Collins, L., & Angelova, M. (2015). What helps TESOL Methods students learn: Using Q methodology to investigate students' views of a graduate TESOL Methods class. International Journal of Teaching and Learning in Higher Education, 27(2), 247-260. https://edtechbooks.org/-AeCN

Frechtling, J. (2010). The 2010 user-friendly handbook for project evaluation. Arlington, VA: National Science Foundation. https://edtechbooks.org/-iuqQ

Guerra-López, I. J. (2008). Performance evaluation: Proven approaches for improving program and organizational performance (Vol. 21). John Wiley & Sons.

Hamilton, J., & Feldman, J. (2014). Planning a program evaluation: Matching methodology to program status. In Spector, J. M., Merrill, M. D., Elen, J., & Bishop, M. J. (Eds.), Handbook of research on educational communications and technology (pp. 249-256). Springer.

Harris, K., Henderson, S., & Wink, B. (2019). Mobilising Q methodology within a realist evaluation: Lessons from an empirical study. Evaluation, 25(4), 430-448.

Jurczyk, J., & Ramlo, S. E. (2004, September). A new approach to performing course evaluations: Using Q methodology to better understand student attitudes [Paper presentation]. International Q Methodology Conference, Athens, GA. https://edtechbooks.org/-QPAR

McKeown, B., & Thomas, D. B. (2013). Q methodology (Vol. 66). Sage publications.

Oliver, M. (2000). An introduction to the evaluation of learning technology. Journal of Educational Technology & Society, 3(4), 20-30.

Ramlo, S. E., & Newman, I. (2010). Classifying individuals using Q methodology and Q factor analysis: Applications of two mixed methodologies for program evaluation. Journal of Research in Education, 21(2), 20-31. https://edtechbooks.org/-yUbJ

Ramlo, S. E. (2012). Determining faculty and student views: Applications of Q methodology in higher education. Journal of Research in Education, 22(1), 86-107. https://edtechbooks.org/-FAYN

Ramlo, S. E. (2015a). Student views about a flipped physics course: A tool for program evaluation and improvement. Research in the Schools, 22(1), 44–59. DOI: 10.31349/RevMexFisE.18.131

Ramlo, S. E. (2015b). Q Methodology as a tool for program assessment. Mid-Western Educational Researcher, 27(3), 207-223. https://edtechbooks.org/-cxdj

Rieber, L. P. (2020). Q methodology in learning, design, and technology: An introduction. Educational Technology Research and Development, 68(5), 2529-2549. https://edtechbooks.org/-HpHL

Rodl, J. E., Cruz, R. A., & Knollman, G. A. (2020). Applying Q methodology to teacher evaluation research. Studies in Educational Evaluation, 65, 100844. https://edtechbooks.org/-bvCq

Saunders, M. (2011). Capturing effects of interventions, policies and programmes in the European context: A social practice perspective. Evaluation, 17(1), 89-102. https://edtechbooks.org/-uVn

Spector, J. M., & Yuen, A. H. (2016). Educational technology program and project evaluation. Routledge.

Stefaniak, J., Baaki, J., Hoard, B., & Stapleton, L. (2018). The influence of perceived constraints during needs assessment on design conjecture. Journal of Computing in Higher Education, 30(1), 55-71. https://edtechbooks.org/-QzBe

Stefaniak, J. E. (2020). Needs assessment for learning and performance: Theory, process, and practice. Routledge.

Stephenson, W. (1985). Q-Methodology and English literature. In C. R. Cooper (Ed.), Researching response to literature and the teaching of literature: Points of departure (pp. 233–250). Ablex.

Tessmer, M., McCann, D., & Ludvigsen, M. (1999). Reassessing training programs: A model for identifying training excesses and deficiencies. Educational Technology Research and Development, 47(2), 86-99. https://edtechbooks.org/-qwt

Vo, A. T., & Christie, C. A. (2015). Advancing research on evaluation through the study of context. New Directions for Evaluation, 2015(148), 43-55. https://edtechbooks.org/-ECuZ

Watts, S. & Stenner, P. (2012). Doing Q methodological research: Theory, method, and interpretation. SAGE.