"Check out this study!" Your colleague excitedly smiles as she drops a dog-eared journal on your desk.

"This conclusively shows that learning styles don't exist! Finally, we can stop talking about them!"

Interested, you thumb to the marked article and begin reading.

The study is rigorous. Operating off of the proposed definitions of learning styles, the researchers had students self-report on their preferred styles (visual, aural, kinesthetic). Then, they assigned each student homework materials that taught the lesson's content in their preferred style. They then tested students on their comprehension via a standardized test and compared results to a control group.

Analyzing the results, the researchers found no performance differences between groups, meaning that though students believed they learned in a particular way, how they were presented the information actually didn't matter. The researchers concluded that though we might think we learn in a particular style, teaching to a preferred style actually has no impact on learning.

You close the journal and look back up at your colleague. What is your reaction?

Do you agree? "Yes, learning styles are definitely bunk." Maybe you jump onto Twitter and blast it to your likeminded colleagues. "Finally! Learning styles can die!"

Or do you disagree? "This doesn't completely disprove learning styles. I still think we do learn in different ways." Maybe you dig into the article further, nitpicking the researchers' methods and trying to find holes in their reasoning. "Maybe the homework didn't actually align with the students' styles. Or maybe people have difficulty determining their own styles. Or maybe the researchers have a conflict of interest in disproving learning styles." You grasp for whatever rationale you can. Learning styles are true, and it doesn't matter what this study says.

Whether you agreed or disagreed with your colleague in this fictional scenario, the most important question to ask is "why?" Why did you agree or disagree? Did you make your decision about learning styles based on your own experiences, on research evidence, on your own biases (e.g., aesthetic preference), or on what the common belief of your community happened to be?

Key Terms

- Abduction

- A logical inference of a conclusion (or special case of inductive reasoning) that likely follows (but doesn't necessarily follow) from the provided premises and provides a reasonable explanation of the mechanism by which the conclusion follows.

- Confirmation Bias

- A tendency to interpret new evidences as supporting or confirming existing theories and paradigms (often ignoring contradictory evidence or negative cases).

- Consensus Fallacy

- A logical fallacy in which it is assumed that something is true because a majority of people believe it.

- Degenerative Science

- A research programme which either does not make novel predictions or which makes novel predictions that are systematically proven wrong (cf., Lakatos).

- Ontology

- The branch of knowledge that deals with being and reality.

- Scientific Progressivism

- The belief that applying the scientific method has historically yielded positive, fairly-consistent improvement in human understanding and knowledge.

- Theory

- An explanation of the 'how' or 'why' of particular phenomena, typically referencing existing facts and laws.

I use this example because it's always polarizing among education professionals, and if you believe in learning styles, then you are fairly unlikely to change your stance even after being presented with compelling contradictory evidence (Newton & Miah, 2017). Education researchers generally treat learning styles as a myth, because studies like the one described above have failed to support the theory over and over again (e.g., Husmann & O'Loughlin, 2019; Kraemer, Rosenberg, & Thompson-Schill, 2009; Macdonald, Germine, Anderson, Christodoulou, & McGrath, 2017; Willingham, Hughes, & Dobolyi, 2015), and the strongest scientific evidence on the subject concludes that "there is no adequate evidence base to justify incorporating learning-styles assessments into general educational practice" (Pashler, McDaniel, Rohrer, & Bjork, 2008, p. 105) and that learning styles instruments "should not be used in education" (Coffield, Moseley, Hall, & Ecclestone, 2004, p. 118). Practitioners, on the other hand, such as teachers, generally believe in learning styles and even develop lessons and entire courses around serving the needs of specific learners as defined by their preferred style. And as a go-between, teacher educators represent more of a mixed bag: some siding with the research and others siding with the received wisdom of classroom teachers.

This type of scenario unfolds daily and is not limited to learning styles. It also is not merely a theory-practice divide, because many fields have competing theories that researchers gravitate toward, bolster, and defend, much like political ideologies and parties. This happens, because we all struggle to know sometimes (a) when to believe a theory, (b) how far to apply a theory, and (c) how to deal with evidence that conflicts with our accepted theories.

I previously introduced the concept of a theory as a narrative, story, or model that researchers use expansively to explain what they observe and experience. Theories are necessary because they allow us to make sense of a messy, irrational, chaotic world, and as such, they serve as a kind of interpreter for our brains or lens for seeing the world in understandable ways, signaling to us what to pay attention to and what to ignore, what to emphasize and what to deemphasize.

Our brains need ways for organizing information, to make meaning, and to guide judgments. Consider a painting. A digital camera can copy a painting into a two-dimensional series of colored pixels, but being able to represent the painting digitally is not the same as being able to interpret it (to identify what is in the painting). Similarly, our bodies and brains can experience phenomena, but making any kind of sense of these phenomena requires something to compare it to or some organizing structure (or schema) to place it in. Today an artificial intelligence can take a colored pixel series created by a digital camera and identify faces because it has massive databases of images to compare to that a human has told them represent faces. In this way, the artificial intelligence is using a schema that allows it to make meaning of what it is seeing by comparing it to what it has seen before and using categories or methods of analysis provided to it. Without a schema, the digital representation of the painting would only ever be a series of colors. With a schema of what a face is, though, humans and artificial intelligence alike can begin to see faces in the colors, making meaning of the meaningless.

Theories provide these schemas. They give us meaningful structures and outlines to attach ideas, observations, and experiences to. Without them, we would just have decontextualized and meaningless bits of information, such as an infinite number of pixel colors, but with them, we can organize information in meaningful ways. This understanding is similar to constructivist learning theories, which pushed back against behaviorist and tabula rasa views of learning to recognize that any new learning will be dependent upon what we have already learned, influenced by our histories, beliefs, and attitudes toward what we are learning.

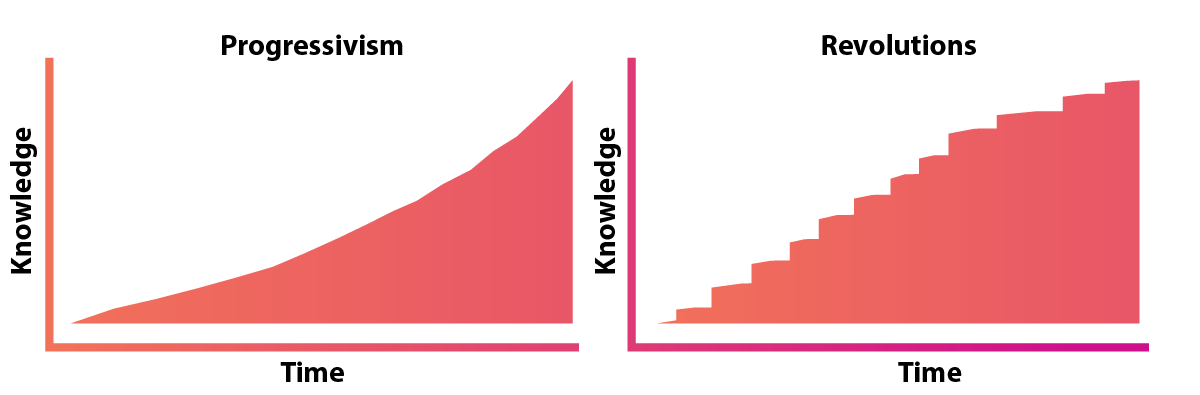

In addition, schemas and theories are not static but are expected to adapt, evolve, and grow to accommodate new information and new types of informational relationships to better and more fully understand the world. Science generally operates on this assumption of scientific progressivism, wherein we believe that through observation we can come to progressively know more and more about the world around us, and by applying the scientific method, we will gradually make our theories and schemas grow and expand, ostensibly until they encompass all truth.

As practitioners apply the scientific method, theories can be tested to see how well they perform, and through subsequent testing, theories can evolve to be more accurate or useful. Thus, scientists typically "view theories as provisional" and as progressing through failure, wherein "the best theory we have now" is expected to "fail in some way and that a superior theory will eventually be proposed" (Willingham, 2012, p. 86). In this view, scientific progress is steady and linear, with subsequent discoveries building upon previous discoveries, ever-moving upward and onward to more accurate understandings of the world. "Good science is cumulative; … [and] science is always supposed to move forward" (Willingham, 2012, p. 95).

Theory Building and Revolutionary Crisis Cycles

However, the actual history of theory building in science, and the concomitant adoption and rejection of theories, paints a messier picture than scientific progressivism generally acknowledges.

For starters, new, important, earth-shattering theories are often created by accident or as bolt-out-of-the-blue moments of inspirational abduction that come to scientists when they are grappling with scientific problems often in mundane settings. The story goes that Archimedes invented his principle of volume while he was taking a bath and noticed the displacement of water his body made, to which he shouted "Eureka!" About 1,900 years later, Newton shouted the same thing when an apple fell on his head, and he had the initial idea for the theory of gravity. In 1669, Hennig Brand accidentally discovered the first chemical element phosphorus while collecting bathtubs full of urine (for the purpose of creating gold), thereby paving the way for the discrediting of transmutation alchemy (and his own research program). And Einstein's theories of relativity evolved from thought experiments he had about how light would bend and be perceived if rooms and flashlights were accelerated.

In all of these cases, laws and theories developed out of abductive reasoning, imagination, and chance rather than pure empirical observation or rational proofs. In more recent years, attempts have been made to make theory-building more systematic (such as Grounded Theory, cf. Glaser & Strauss, 2017), but the history of science largely is not a history of one observation rationally stacking onto another. Rather, it represents changes in understanding that came in unexpected, irrational leaps and jarring revolutions rather than calculated, rational steps.

Recognizing this, Thomas Kuhn (1996) famously argued that the history of science is best understood as a series of scientific revolutions between dominant paradigms or theories. (I will explore paradigms more deeply in subsequent chapters, but for simplicity, I will equate paradigm with theory here.) In progressivist views, science increases knowledge incrementally over time (cf. Fig. 1), but in the revolutions view, knowledge may build slightly over time as scientists operate within current theories, but such progress is slow and necessarily comes to an end (once the theory reaches its explanatory limit). At that point, revolutions in theory are required to move knowledge-building forward.

Under this view, most of the day-to-day work of scientists operates firmly under the assumptions of preexisting, dominant theories, which Kuhn refers to as "normal science." But, such work always has a ceiling that it cannot pass, because the dominant theory will prevent scientists from learning anything beyond what the theory already allows for. At this point, for progress to continue, a radically different theory must be proposed.

Fig. 1. A depiction of scientific knowledge-building over time through the lens of progressivism vs. revolutions

Fig. 1. A depiction of scientific knowledge-building over time through the lens of progressivism vs. revolutions

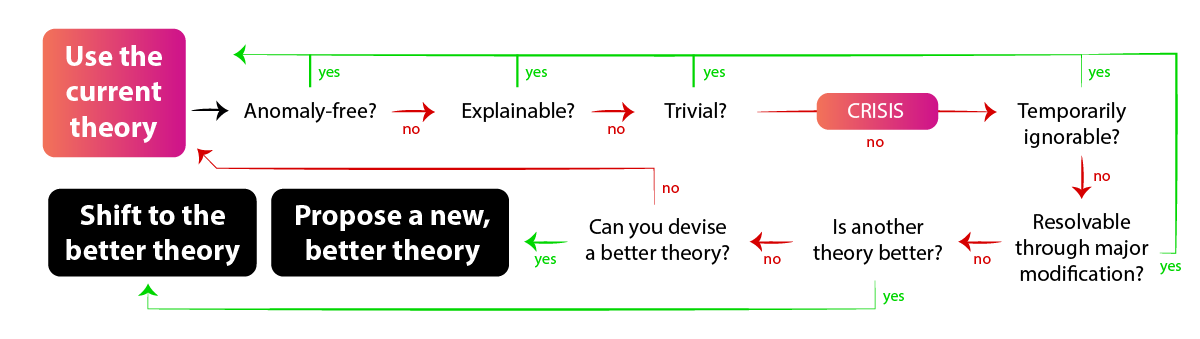

Though Kuhn has often been mischaracterized as being a relativist, his explanation has a clear structure and pattern, which scientists have historically followed, and this applies to education researchers as well. Essentially, researchers will persist in using existing theories until a crisis to the theory arises. If the crisis is serious enough, then they will look for competing theories (or create new ones) only if the alternative is better able to deal with the crisis than the current theory. Such "paradigm shifts" to new theories are painful but necessary for scientific progress to continue.

To illustrate how the crisis cycle works, Figure 2 walks you through the questions that a researcher would ask while using a theory in their day-to-day activities of normal science. All goes well until an anomaly arises. In this case, the researcher must consider whether the anomaly is explainable by the current theory; if so, then she should stay with the current theory. If not, she should consider whether the anomaly is trivial or important; if it's trivial, then she should stay with the current theory. If it's important, then a crisis has arisen, and the researcher must decide whether the crisis has to be dealt with now or whether it would be okay to postpone dealing with it later (perhaps when better tools are available). If it must be dealt with now, then she must consider whether the original theory could be modified to address the anomaly; if so, then she should remain. If not, then she should consider whether any other theories might exist that better explain the anomaly. If so, then she should make a paradigm shift to the alternative theory. If not, then she should get in the business of theory generation and decide if she can abductively provide a better theory that accounts for the anomaly (and everything else that the current theory accounted for) in a better manner. If so, then she should shift to her new theory, but if not, then she should stay with the current theory.

Fig. 2. An application of Kuhn's historical crisis cycles to our own theory adoption, creation, and rejection

Fig. 2. An application of Kuhn's historical crisis cycles to our own theory adoption, creation, and rejection

By going through this process, researchers will generally adopt a conservative approach to maintaining the status quo of current theories and will only shift to new theories if there is a clear, present, and vital need to do so (prioritizing the avoidance of Type I errors to Type II errors). But this also means that new ideas and theories are (at least in theory) met with skepticism unless there is a clear need for them. After all, why propose a new theory of learning, identity, curriculum development, or social interaction if our current theories are working fine? And why reject our current theories if there are no better alternatives to move to?

Though the use of theory is necessary for doing research, this state of affairs in how we adopt and reject theories leads to a number of problems that education researchers need to be aware of, including the consensus fallacy, confirmation bias, degenerative science, the Barnum effect, and irrationality. After addressing each of these problems, I'll then close the chapter by providing some guidance on how education researchers should responsibly adopt or reject theory and highlight what some key components of good theory might be.

Learning Check

The revolution view of scientific progress proposes which of the following:

- There is no such thing as scientific progress

- Science progresses over time

- Science progresses through steady, incremental improvements

- Science progresses through paradigm shifts

According to Kuhn, which of the following must happen before a theory is rejected?

- An anomaly (or conflicting evidence) to the theory must arise

- The theory must enter a state of crisis

- The scientific community must reach a consensus regarding the theory's ability to account for emerging evidence

- A better theory must be proposed

Consensus Fallacy

A consensus fallacy simply means that people can mistakenly believe that a conclusion is true based on its popularity or that given two options we should go with the option that is more popular. The problem with the consensus fallacy is obvious: the truth of any claim has nothing to do with its popularity, and major advancements in science historically happen when alternatives rise up in opposition to popular theories (such as Copernican heliocentrism challenging geocentrism).

Popularity itself is not evidence, but though this truism is obviously reasonable in its finite applications to logic, in the messy, complex real world of research we do often rely upon consensus for moving forward. For instance, many arguments used in popular media supporting the theory of anthropogenic climate change use premises like "97% of climate scientists agree that human carbon production is causing glacial melting." Or, in the case of learning styles, "most teachers believe in learning styles, so they must exist." The structure of such an argument is that if most experts or practitioners agree on something, then it must be true. However, we wouldn't make the same argument in other settings, such as "65% of Americans believe in the supernatural, so the supernatural must exist."

One rationale for this discrepancy is that in highly technical fields popularity amongst experts is used as a proxy for evidence when non-experts might struggle to know and weigh the evidence themselves. Not everyone has access to all the data points and not everyone has the training necessary to interpret the data in reasonable ways, which means that we may at times feel justified in relying on consensus among experts to guide us when the field is too esoteric (e.g., not everyone sees what goes on in a teacher's classroom) or complicated (e.g., not everyone can weigh historical temperature data points across the globe).

But, this still isn't a completely satisfying answer, because we wouldn't think that every argument made on expert consensus would be valid. "Most theologians believe in God, so God must exist," is as ridiculous as "Most mathematicians believe that trigonometry is essential for leading a fulfilled life, so all kids should be taught it." Another similarly absurd argument would be "Most evolutionary biologists do not believe in God, so God must not exist." If someone made these arguments to me, I'd probably respond with something like this: "Of course theologians believe in God, and mathematicians believe in the importance of math! That's their jobs! That's what they're paid to do!" That is, even in the case of expert opinion, we don't always believe that consensus is an appropriate proxy for evidence, especially when the expert's position or theoretical assumptions conflict with their ability to give unbiased guidance on a particular topic or when experts overstep the limits of their expertise (such as evolutionary biologists making metaphysical claims or mathematicians making claims about social needs and wellbeing, cf. Feyerabend, 1975).

Thus, if we are relying upon consensus in research, we should at least ensure (1) that the experts we rely upon are qualified to draw the conclusions they are drawing (and are not overstepping their bounds), (2) that the theoretical assumptions, biases, and conflicts of interest they are subject to in their positions are not negatively influencing their ability to draw reasonable conclusions, and (3) that we only treat consensus as a shorthand proxy for evidence and not as evidence itself (meaning that counter-evidence should be carefully considered).

In practice, researchers rely upon the expertise of others to make complex projects possible. If, for instance, I am trying to make a smartphone app to teach kids how to read basic words, I should of necessity rely upon the expertise of early childhood literacy colleagues to inform its pedagogical design. However, I would not rely on their expertise to supplant my own in matters that draw upon my own realm of expertise, such as privacy, usability, database architecture, and so forth.

This all means that in our own fields of expertise, consensus should never be a determining factor of whether to accept or reject a theory, because (as experts) we should be aware of and be able to weigh evidence regarding the theories before us, and we should never treat any theory as being beyond critique merely because our colleagues believe it. Yet, we should also exercise some level of intellectual humility with regard to the limits of our own expertise and recognize that soliciting and relying upon consensus among experts in other fields may be a practical necessity.

Learning Check

Which of the following would be examples of the consensus fallacy?

- 98% of climate scientists agree that anthropogenic climate change is occurring, so global warming is real

- Most people believe in God, so God must exist

- Microbiologists study single-cell organisms and agree that organisms act for their own genetic survival, so all of human action, morality, and decision-making is the result of self-interest and genetic survival

- Most teachers believe in learning styles, so learning styles must be an accurate explanation of how people's brains work

If we are going to commit the consensus fallacy to accept a conclusion (without fully weighing and understanding the evidence ourselves), what should we ensure?

- That the conclusion agrees with our biases

- That the group making the consensus has legitimate expertise

- That the conclusion does not overstep the limits of the group's expertise

- That the conclusion is not merely an expression of a fundamental bias of the group

Confirmation Bias

Confirmation bias means that if we already believe a theory, then we will tend to collect and interpret evidence that agrees with the theory while ignoring evidence that contradicts it. Kuhn (1996) explained that scientists fall into this trap with theory when they "take the applications [of the theory] to be the evidence for the theory, the reasons why it ought to be believed" (p. 80). They do not recognize that what they observe will be dictated by what they are expecting to observe. This prevents the emergence of "alternative interpretations" or the discussion of "problems for which scientists have failed to produce theories," meaning that whole swathes of phenomena are ignored or pigeonholed in inappropriate ways (p. 81).

This has been a major and ongoing problem in theoretical psychology and has reverberations in the education literature, as experimental results that did not conform to behaviorist models were typically ignored, or tests were modified until results conformed with them (Greenwald, Pratkanis, Leippe, & Baumgardner, 1986; MacKay, 1988). In response, both psychology and education produced competing models (e.g., cognitivism, constructivism, social constructivism) to address important phenomena being ignored or inappropriately explained by behaviorism (e.g., higher-order reasoning, individual differences). This problem of confirmation bias inherent in operating from any dominant theory suggests that practitioners will only see in the world that which their theories allow them to see and also thereby suppress new ideas as being "necessarily subversive of [the accepted theory's] basic commitments" (Kuhn, 1996, p. 5).

To illustrate, let's circle back to learning styles. If I'm a teacher that believes in learning styles, I will begin to classify my students (at least in my mind) according to these styles and will use observations in my classroom to solidify both my classifications and the theory itself. If I notice that Juan does better at a test that has illustrations, I might think "hmm ... Juan must be a visual learner." If I then give a lecture, and Juan falls asleep, I might think "hmm ... Juan is definitely a visual learner because he was just not drawn into the aural modality of the lecture." If I show a video, and he falls asleep again, then I'll definitely be convinced: "Yes, Juan is a visual learner. All the evidence proves it!"

If a colleague then shows me an article debunking learning styles, I'll protest the findings on the basis that I know that learning styles exist, because Juan is an obvious example of a student who has a clear learning style. Never mind the fact that these observations could have been explained by any number of other theories and facts, like that Juan's language proficiency is struggling, that he had less sleep than his classmates the night before, or that I'm simply a boring lecturer. I have seen a learning style manifested in Juan because that is what I was looking for. The theory has, therefore, manipulated my observations and confirmed itself through that very manipulation.

This phenomenon is not unique to learning styles or to education. We all generally tend to find examples of whatever it is we're looking for. If you believe in God, you'll see evidence for God. If you believe in phrenology, you'll see evidence of how people who have wronged you seem to have similarly-shaped heads. If you believe in astrology, you'll witness how your zodiac sign affects your life (and ignore how it doesn't). If you believe the world should be just, you'll see evidence of injustice. If you believe the world is inequitable, you'll see evidence of inequity. In this way, theories are powerful lenses that let us find whatever it is we are looking for, just as a microscope allows us to find a bacterium or a telescope allows us to find a star.

It's not bad that theories allow us to see things; that's what they're supposed to do. What is problematic, though, is when we rely upon what the theory is showing us as the guiding evidence for why we should believe the theory. Theories that are self-confirming have a circular logic that doesn't make them amenable to outside verification or critique.

In response to this problem, Popper (1959) and other critico-rationalist philosophers of science argued that the scientific enterprise must critically evaluate its relationship to the world and the limits of empirical observation alone. Turning science on its head, Popper argued that the testing of a theory can only occur after the theory has been accepted and that such testing must rely upon logical falsification rather than evidence-building.

Unlike non-scientific methods of inquiry, Popper explained that scientific theories must be amenable to the rules of logic and that logical tests may be applied to specific statements implied from a theory to "falsify the whole system" (p. 56). In this way, singular anomalies contradicting theories could be used to test a theory's strength and either disprove the theory or limit its universalizability. From this perspective, to be scientific, a theory must reveal testability (i.e., provide predictions that could be proven false), and the less a theory provides testability, the less it should be trusted as a good scientific theory.

This process of falsification, Popper argued, is a more sure way of determining the accuracy and value of a theory, because any theory can explain the world when it has the power to shape what we see through it. Good theories, though, make a prediction that we can test to see if the theory is false. Theories that don't make predictions, then, are non-scientific. Theories that make predictions that turn out to be true represent good science for the time being but may be proven false in a future prediction. But theories that make predictions that turn out to be false are rejected as untrue.

Thus, evidence in favor of the theory doesn't matter in determining its scientific value. All that matters is whether the theory contradicts itself by predicting things that are proven false, and if it makes no predictions that could be proven false, then it is a non-scientific theory.

In the case of learning styles, we can make very clear predictions of what should happen if learning styles exist, which allows the theory to pass Popper's falsifiability criterion, making it a scientific theory. We could, for instance, expect that if learning styles exist, then teaching students to their preferred styles should produce learning improvements. If this prediction doesn't pan out in rigorous studies, though, then, based on the requirement of falsification, we would have a strong justification to reject the theory, because it has been proven inaccurate no matter how much confirmatory evidence we might have collected along the way.

Learning Check

According to Popper, what is the central characteristic of "good" scientific theories?

- They can be proven true

- They can be proven false

- They can neither be proven true nor false

- They are found to be useful

Degenerative Science

The main critique for Popper's falsification criterion is that it sets "unrealistic standards for sound science" in many practical settings (Mercer, 2016, p. 1) and that he was making an argument for how science should be done in a purist form rather than how it is done in practice. Addressing the issue of falsification directly, Kuhn (1996) explained that "if any and every failure to fit [a falsification] were ground for theory rejection, all theories ought to be rejected at all times" (p. 146). Similarly, Mulkay and Gilbert (1981) explained that "Negative results ... may incline a scientist to abandon a hypothesis, but they will never require him to abandon it, on Popper's own admission. ... Thus the utter simplicity and clarity of Popper's logical point are lost as soon as he begins to take cognizance of some of the complexities of scientific practice and as soon as he makes the transition from his ideal scientific actor to real scientists engaged in research" (p. 391).

In other words, Popper idealistically gave a single negative result the power to topple an entire theory that might have mountains of confirmatory results, but in the real world, scientists balance falsifications and confirmations in a complex way to determine how long to responsibly stay with a theory that is starting to show some negative results.

Following a similar strand of reasoning, Lakatos (1974) argued that asking whether a theory is true or false is the wrong question. Rather, theories, like lenses, are tools for seeing novel things clearly and therefore can only either be progressive (empowering us to learn and see more) or degenerative (not empowering us to learn and see more). Falsification still plays a role in this classification but is seen more as a holistic or continual check on the overall health of a theory.

To explain, one example Lakatos gave was a comparison of two prominent theories from divergent fields: Marxism and Newtonian physics. As a once young Communist leader himself, Lakatos considered himself a disciple of Marx and zealously directed many Communist efforts in Hungary until he was forced to flee the country under Soviet threat. He took refuge in the United Kingdom, becoming a Cambridge professor and a prominent philosopher of science and mathematics. Revisiting Marxism in his later life, Lakatos (1974) explained:

Has … Marxism ever predicted a stunning novel fact successfully? Never! It has some famous unsuccessful predictions. It predicted the absolute impoverishment of the working class. It predicted that the first socialist revolution would take place in the industrially most developed society. It predicted that socialist societies would be free of revolutions. It predicted that there will be no conflict of interests between socialist countries. Thus the early predictions of Marxism were bold and stunning but they failed. Marxists explained all their failures: they explained the rising living standards of the working class by devising a theory of imperialism; they even explained why the first socialist revolution occurred in industrially backward Russia. They "explained" Berlin 1953, Budapest 1956, Prague 1968. They "explained" the Russian-Chinese conflict. But their auxiliary hypotheses were all cooked up after the event to protect Marxian theory from the facts. The Newtonian programme led to novel facts; the Marxian lagged behind the facts and has been running fast to catch up with them.

Lakatos considered the Marxism of his day to be degenerative science, because it consistently predicted novel facts that did not come about, and rather than rejecting the theory, adherents would devise ad hoc explanations for why the predictions failed, essentially propping up the theory with makeshift addenda.

Newtonian physics, on the other hand, made novel factual predictions that could not have been arrived at without the aid of the theory. And they were consistently confirmatory, making it a progressive theory for scientific work.

To apply this same principle to education research, it may be too purist of us to follow Popper's falsification criterion too strictly, discounting a theory simply because it provided one false prediction. However, as theories are applied over time a pattern of falsification can emerge that should be troubling for researchers. For instance, if studies emerge showing that predictions made by learning styles are false, then we might explain away one or two false predictions on the basis of misapplication or extraneous factors ("auxiliary hypotheses" according to Lakatos), but if predictions continue to fail, then we should question the progressive value of adhering to a theory that is obviously degenerative in its predictive capabilities. This is especially true when alternative theories might exist that can more accurately and consistently explain results, such as multimodality, self-determination, and a host of others in the case of learning styles.

Learning Check

According to Lakatos, what is the central characteristic of degenerative scientific theories?

- They make a true prediction

- They make many true predictions

- They make a false prediction

- They make many false predictions

Either/Or Fallacy

Building off of this last point, just as the world is complex and messy, there might be a variety of theories that we could employ to explain anything that we see in it, meaning that there might actually be many proposed theories that can explain any given phenomenon. For this reason, by focusing too heavily on a single theory and ignoring multiple alternatives, researchers can also quickly fall into an either/or (or false dichotomy) fallacy, where they assume that there are only two possible theoretical explanations for a given phenomenon.

This may sometimes be done as a persuasive tactic that education researchers use to bolster support for their own theoretical stances rather than an actual scientific error. In Kuhn's scenarios, there are always two competing theories — the dominant theory and the challenger — and the dominant theory will carry the day unless the challenger can show a capacity to outperform it in predicting and explaining the world. In education research, though, researchers are often hard-pressed to articulate what the dominant theory they are trying to respond to may be.

For this reason, you will rarely see critiques of formal theory in education research but will rather see veiled references to "the status quo," "one-size-fits-all," "lecture-based," "pencil-and-paper," or "industrial model," or researchers will use a nondescript catch-all term like "traditional" to serve as a strawman counterpoint to their proposition. This is often done to carve out a place for new theoretical approaches without seriously considering what the state of the field actually is, thereby ignoring theoretical plurality and alternative explanations.

To refer back to learning styles, if you showed me falsifying study results of the theory, I might respond incredulously with an either/or retort like "So, you think kids are all the same?!" Or, "You think we should just use one-size-fits-all curricula?!" Or, I might ignore the results and heroically proclaim that "I don't care what the so-called studies say; I'm going to differentiate for my students' diverse learning needs anyway." Yet, in doing so, I'm not showing a commitment to differentiation but merely a commitment to one, limited theory of differentiation, and my strategy for bringing up the alternatives I did would be to claim that any approach that ignores learning styles ignores the needs of students.

However, learning styles is not the only theory that empowers us to view students as unique individuals with unique needs. Given three diverse students — Juan (a first-generation, Christian, undocumented, English language learner from Guatemala), Suzy (a white, atheist, middle-class introvert), and Sofu (a physically active extrovert and child of Christian refugees from Nigeria) — it would be absurd to assume that these three students have the same learning needs or that they will respond identically to stories you read or videos you watch in class. But, it is also equally absurd to assume that the biggest differences between these students will be that one prefers pictures (visual), one prefers words (aural), and one prefers physical movement (kinesthetic). That is, learning styles theory is not flawed because it requires us to differentiate for student needs; rather, it is flawed, because it does not require us to differentiate enough in ways that matter for our students. It ignores socioeconomic, historical, language, and a variety of other factors that will influence a student's ability to learn.

Yet, whenever theory is brought up either in research or in more general contexts, specific theories are typically only compared against vague, Bogeyman-like alternatives. Perhaps this happens at times because we do not know what the alternatives actually are, but at other times it seems that such phantasmic comparisons are made for simple persuasive effect.

Barnum Effect

Another important and related problem with theory adoption borrows from a common psychological phenomenon known as the Barnum Effect. Named after the famous showman P. T. Barnum, the effect explains a technique charlatans, such as magicians, mediums, and crystal ball gazers, use to convince people that they have special powers and insightful knowledge about strangers' lives. They do this, in part, by purportedly telling strangers "secrets" that only they should know by actually making broad, generic statements about human nature and experience that could relate to anyone.

For instance, a psychic medium might tell a client that she "longs for freedom," "doesn't like to feel suffocated," and "is focused on success," and the client might believe that the medium has special powers of insight because she keenly feels these statements to be true about herself. She would likely leave her session feeling energized and convinced that "yes, I am a woman who longs for freedom," not realizing that each of these statements is true for just about anyone and that she is being duped into building unjustifiable trust in the medium. In such situations, victims passionately believe the charlatan, because the statements are universally true, generic, and obvious, and because they align with the victims' biases and assumptions about the world. And once they believe that the charlatan has a gift of divination, they will gradually become less and less critical of subsequent statements.

Theories in education research can be structured in this same fortune-cookie-like way. Broad theories can be proposed that are generic and are impossible to refute, not because they are strong and accurate but because they are fluid, generic, and untestable. In other words, vagueness leads to a false perception of accuracy, and once we believe in the generalist theory, we begin to uncritically accept any addenda taught by adherents of the theory as inspired and also obviously true.

Learning styles is an interesting case of this, because individual scholars who propose the theory can be quite descriptive and precise in their formulations (making connections between specific styles and activity in particular areas of the brain), but among companies that provide commercial products to schools for testing and addressing learning styles, articulation tends to be broad and unfalsifiable (e.g., "kids are different and have different needs"). Once generalist theories are believed, though, all sorts of addenda can be appended without critical review, such as the adding of learning style upon learning style until people begin uncritically accepting that some students are inherently "naturalistic," some are "philosophical," some are "intrapersonal," ad infinitum, without any reasonable evidence other than that they have passionately bought into the base, generalist theory.

Proponents of many generalist theories can fall into this same trap, such as feminism, critical race theory, and Marxism, where adherents might believe in the obvious generalist theory (e.g., "that the sexes should be socially, economically, and politically equal") and then might also uncritically believe more specific applications, manifestations, or addenda to the theory without reasonable confirmatory evidence or legitimate consideration of contradictions (e.g., "that girls' outperformance of boys' in reading and writing must be a result of patriarchal oppression").

In contrast, Kuhn explained that theories must be limited in "both scope and precision" in order to be useful (Kuhn, 1996, p. 23). Applying this to education, Burkhardt and Schoenfeld (2003) argued that "most of the theories that have been applied to education are quite broad. They lack what might be called 'engineering power' ... [or] the specificity that helps to guide design, to take good ideas and make sure that they work in practice." (p. 10) For these reasons, "education lags far behind [other fields] in the range and reliability of its theories," because we have overestimated the strength of our theories and allowed them to have too broad of a scope (p. 10). This leads to a precarious situation wherein we might uncritically believe any eventuality or formulation of a generalist theory simply because the base theory is so obviously true.

Learning Check

To avoid the Barnum Effect, theories in education research should be:

- Broad and generalist

- Focused and specific

- Useful and progressive

- Accurate and universal

Irrationality

And finally, we may also be influenced to adopt or reject a theory for purely irrational reasons, such as intuition, hunches, opportunism, and aesthetics. Feyerabend (1975) argued that rather than being an aberration, this is actually the historical norm of science, wherein revolutionary new theories have regularly been adopted and proliferated for no other reason than that they appealed to "valuable weaknesses of human thinking" (p. 126), requiring researchers to utilize "propaganda, emotion, ad hoc hypotheses, and appeal to prejudices of all kinds" to develop them and encourage their adoption (p. 119).

To illustrate this point, Feyerabend carefully chronicled the attempts of Galileo to convince the religio-scientific community of his day that the Copernican heliocentric theory of the universe was true and that geocentric theories were false. Based on the scientific evidence available at the time, there was not a legitimate scientific reason to reject geocentric theories, especially because existing geocentric theories (like the Tychonic system) were more accurate, complete, and non-contradictory than the Copernican theory (e.g., the case of stellar parallax). The main benefit that Copernicanism had over the Tychonic system at the time was that it was simpler and more elegant, which seemed to outweigh the need for evidence and accuracy in Galileo's mind. Stellar parallax wasn't detected for more than 200 years after Galileo's death, and without that key piece of evidence, Copernicanism was less empirically accurate than existing geocentric alternatives. Yet, today, we celebrate Galileo's commitment to Copernicanism not because it was the scientifically reasonable position to hold at the time, but because he stuck to simplicity and elegance (and ended up being right) even when the evidence at the time pointed the other way.

Some philosophers of science have built upon this point and gone on to question the potential truth-value of theories altogether, making irrationality in theory adoption not only a historical reality but also a necessity. In the realm of psychology, Greenwald, Pratkanis, Leippe, and Baumgardner (1986) explained that "no theory can be proven true by empirical data" and also that it is "impossible to prove one false" (p. 226). This suggests that the quality of a theory may be based primarily on its value to humans via irrational factors, rather than its accuracy or inherent truth value.

In the case of learning styles, this explains why the theory has proliferated despite contradicting evidence and inconsistencies. It appeals to Feyerabend's "valuable weaknesses" or "noble prejudices" of humans by being intuitive and simple, which adopters seem to value more than research evidence.

Similar situations exist with various theories. A powerful example of this for me occurred once while attending an academic conference at Oxford University. I sat in a small audience while the presenter of a research study passionately shared his message and then, preempting any critiques or questions, flatly explained: "I know that you may argue with my theoretical stance, but I've lived it, I've experienced it, so it doesn't matter what you think." And he was right. I couldn't argue with him, not because his rationale was strong, but because he systematically rejected rationality as an expectation for theory adoption.

Though such irrational approaches to theory seem to be anti-scientific (or, at best, non-scientific), they are nonetheless prevalent in education research. A first-generation college graduate might adopt critical race theory to study a K-12 school because it resonates with his experiences as a minoritized child, not because it is the most effective way for addressing the problem he is seeking to solve. An educational technology researcher might adopt a particular technology integration model because it is depicted with simple, intuitive, and colorful graphics, not because it is logically or pragmatically superior to alternatives. Or a behavioral scientist might adopt a granting agency's preferred theory of student behavior to improve chances for funding, not because it is accurate, helpful, or meaningful for understanding student behavior.

Clearly, as humans, education researchers are influenced and motivated by many irrational factors that will lead them to adopt some theories (like those that appeal to their [noble and ignoble] prejudices) while rejecting others (like those presented with a variety of typos), but as education researchers, we should consider to what extent we should allow irrationality to guide such decision making and to what extent we should strive to be more critical and rational.

Good Theory

Given all of these difficulties of theory adoption, education researchers might understandably lament "What are we to do?" Building off of Kuhn (1996), Rogers (2003), and others, I have previously outlined some considerations that may be helpful for education researchers broadly in identifying "good" theory (Kimmons, Graham, & West, 2020; Kimmons & Hall, 2016). In our current treatment, the most important of these seem to be the following: (1) clarity, (2) compatibility, (3) fruitfulness, (4) scope, and (5) ability to disprove/reject.

First, good education research theories should be clear, meaning that they are "simple and easy to understand conceptually and in practice" (Kimmons & Hall, 2016), thereby "eschewing explanations and constructs that invite confusion, misinterpretation, and 'hidden complexity'" (Kimmons, Graham, & West, 2020). The world is messy, but theories only allow us to make sense of it and to progress as a field if they themselves are clear and rigid. Clear theories can be tested against emerging evidence, reevaluated, and revised or rejected as needed. They allow for falsifiability and resist degenerative science and non-science by giving us concrete expectations.

Unclear, messy, esoteric, and amorphous theories, on the other hand, cannot be nailed down to specifics (often either in their form or in eventualities), meaning that they cannot be tested for accuracy. A theory that can be adapted to explain anything can be confirmed by anything and contradicted by nothing. Such procrustean obfuscation can be appealing to researchers who want their theories to survive no matter what the evidence actually says, but it is ultimately detrimental to the field because it allows theories to persist far beyond their shelf-life and prevents us ever from moving past the empirical ceiling necessarily established by the theory.

Second, a good theory is compatible with good practice (Kimmons & Hall, 2016). The whole purpose of having a theory is to inform practice, but not all practice is based on theory. This means that we may have a variety of good practices in education and research that are not based on theory, but if we are ever considering a theory to inform our practice, we should at least begin by making sure that the new theory doesn't make our practice any worse. As Kuhn explained, theories are never adopted in a vacuum, but they are rather intentional shifts responding to emergent needs. Education theories come in all shapes and sizes; some are focused on design, others on understanding. Yet, in all cases, theories should always improve rather than merely replace existing ways of working. This means that there is always a comparison aspect of theory adoption in which we must ensure that by making any shift we are keeping any good practices and are only continually making our craft better.

Third, Kuhn (1977) proposed that good scientific theories should be fruitful, meaning that they should "produce new findings and discoveries," thereby helping researchers "to reach solutions to research puzzles" and to solve problems (Ivani, 2019, p. 4). As stated earlier, even a theory that is not true (or cannot be proven true) may still be useful — Newton's laws are not always true, but they are extremely useful. One way that this happens is by helping us to make predictions that come true. Another way is by helping us to make progress in other fields or areas of inquiry that are not central to the theory. Without such fruitfulness, we run the risk of falling into Lakatos's depiction of degenerative science, where all of our work focuses on merely propping up the theory rather than learning from it.

Fourth, theories should be scoped properly to be useful. Broad, sprawling theories lack the engineered precision necessary to actually inform action, and they persist not because they are strong, by having a solid, finite backbone, but because they are weak, attempting to amorphously ooze into vast realms of human experience so that we can see them everywhere without actually learning anything.

And finally, adopting a theory should never be a terminal decision. That is, we should always operate on the assumption that any theory might be wrong or that there might come a time when it is no longer the most useful way of approaching our problems. We should be willing to reject any theory, given sufficient evidence and alternative ways of approaching our problems, rather than holding onto it with cult-like fanaticism. Theories are supposed to be our tools, not our masters. They serve us; we do not serve them. A fanatic might point to their unwillingness to reject a broken or unhelpful theory as a sign of heroic conviction, but in actuality, such an approach only stymies our collective progress toward addressing education problems that we all want to solve.

References

Burkhardt, H., & Schoenfeld, A. H. (2003). Improving educational research: Toward a more useful, more influential, and better-funded enterprise. Educational Researcher, 32(9), 3-14.

Coffield, F., Moseley, D., Hall, E., & Ecclestone, K. (2004). Learning styles and pedagogy in post-16 learning: A systematic and critical review. London: Learning and Skills Research Centre.

Feyerabend, P. K. (1975). Against method: Outline of an anarchistic theory of knowledge. London: Verso.

Glaser, B. G., & Strauss, A. L. (2017). The discovery of grounded theory: Strategies for qualitative research. New York: Routledge.

Greenwald, A. G., Pratkanis, A. R., Leippe, M. R., & Baumgardner, M. H. (1986). Under what conditions does theory obstruct research progress? Psychological Review, 93(2), 216.

Husmann, P. R., & O'Loughlin, V. D. (2019). Another nail in the coffin for learning styles? Disparities among undergraduate anatomy students’ study strategies, class performance, and reported VARK learning styles. Anatomical Sciences Education, 12(1), 6-19.

Ivani, S. (2019). What we (should) talk about when we talk about fruitfulness. European Journal for Philosophy of Science, 9(1), 4.

Kimmons, R., & Hall, C. (2016). Emerging technology integration models. In G. Veletsianos (Ed.), Emergence and innovation in digital learning: Foundations and applications. Edmonton, AB: Athabasca University Press.

Kimmons, R., Graham, C., & West, R. (2020). The PICRAT model for technology integration in teacher preparation. Contemporary Issues in Technology and Teacher Education, 20(1).

Kraemer, D. J., Rosenberg, L. M., & Thompson-Schill, S. L. (2009). The neural correlates of visual and verbal cognitive styles. Journal of Neuroscience, 29(12), 3792-3798.

Kuhn, T. S. (1977). Objectivity, value judgement, and theory choice. In T. S. Kuhn (ed.), The essential tension. Selected studies in scientific tradition and change (pp. 320–339). Chicago: University of Chicago Press

Kuhn, T. S. (1996). The structure of scientific revolutions (3rd ed.). Chicago, IL: The University of Chicago Press.

Lakatos, I. (1974). Science and Pseudoscience. In G. Vesey (ed.), Philosophy in the open. Open University Press.

MacKay, D. G. (1988). Under what conditions can theoretical psychology survive and prosper? Integrating the rational and empirical epistemologies. Psychological Review, 95(4), 559–565. https://doi.org/10.1037/0033-295X.95.4.559

Macdonald, K., Germine, L., Anderson, A., Christodoulou, J., & McGrath, L. M. (2017). Dispelling the myth: Training in education or neuroscience decreases but does not eliminate beliefs in neuromyths. Frontiers in Psychology, 8, 1314.

Mercer, D. (2016). Why Popper can’t resolve the debate over global warming: Problems with the uses of philosophy of science in the media and public framing of the science of global warming. Public Understanding of Science, 27(2). doi:10.1177/0963662516645040

Mulkay, M., & Gilbert, G. N. (1981). Putting philosophy to work: Karl Popper’s influence on scientific practice. Philosophy of the Social Sciences, 11(3). http://doi.org/10.1177/0963662516645040

Newton, P. M., & Miah, M. (2017). Evidence-based higher education — Is the learning styles 'myth' important? Frontiers in Psychology, 8, 444.

Pashler, H., McDaniel, M., Rohrer, D., & Bjork, R. (2008). Learning styles: Concepts and evidence. Psychological Science in the Public Interest, 9(3), 105-119.

Popper, K. (1959). The logic of scientific discovery. Routledge.

Rogers, E. M. (2003). Diffusion of innovations. New York, NY: Free Press.

Willingham, D. T. (2012). When can you trust the experts? How to tell good science from bad in education. San Francisco: Jossey-Bass.

Willingham, D. T., Hughes, E. M., & Dobolyi, D. G. (2015). The scientific status of learning styles theories. Teaching of Psychology, 42(3), 266-271.